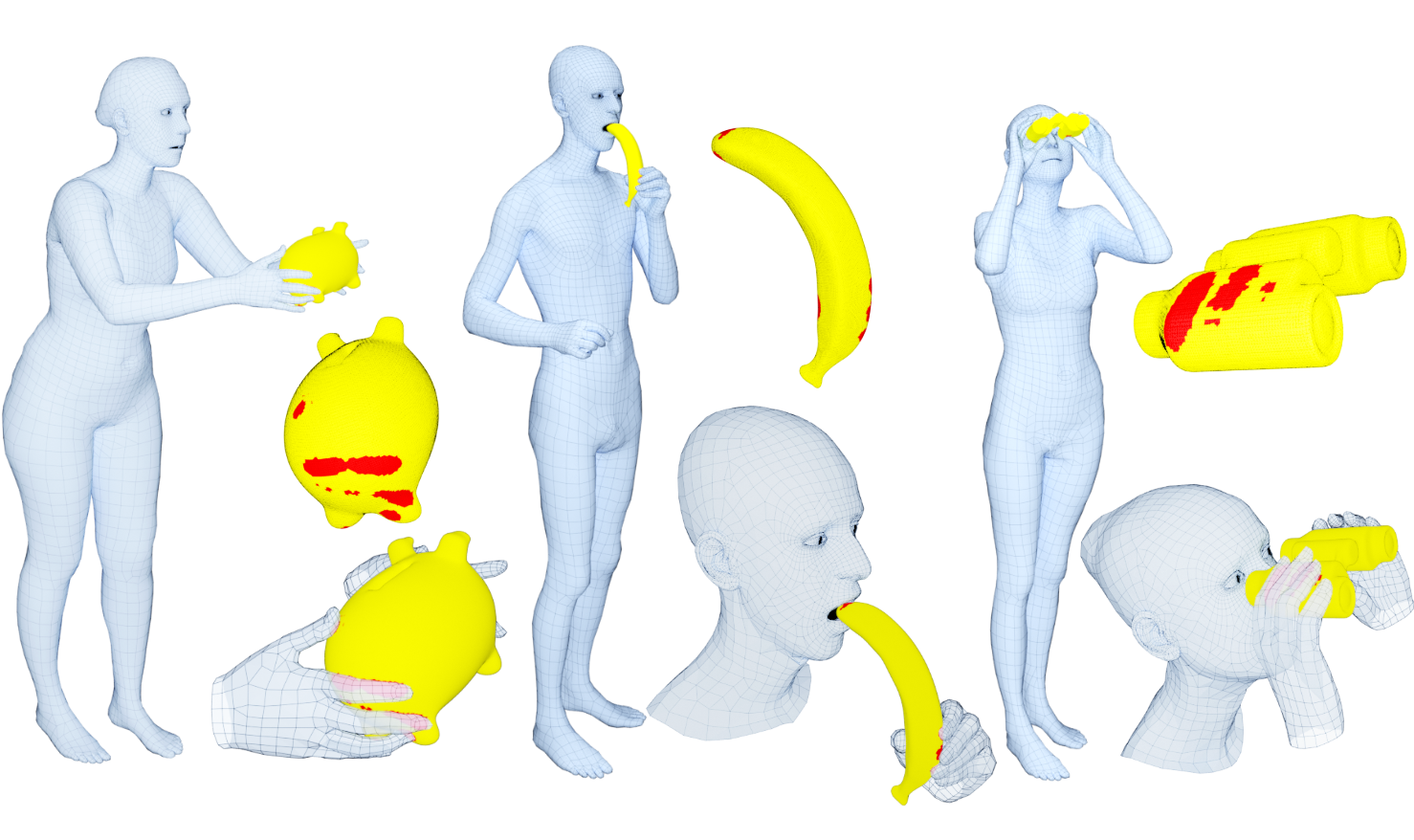

Training computers to understand, model, and synthesize human grasping requires a rich dataset containing complex 3D object shapes, detailed contact information, hand pose and shape, and the 3D body motion over time. While "grasping" is commonly thought of as a single hand stably lifting an object, we capture the motion of the entire body and adopt the generalized notion of "whole-body grasps". Thus, we collect a new dataset, called GRAB (GRasping Actions with Bodies), of whole-body grasps, containing full 3D shape and pose sequences of 10 subjects interacting with 51 everyday objects of varying shape and size. Given MoCap markers, we fit the full 3D body shape and pose, including the articulated face and hands, as well as the 3D object pose. This gives detailed 3D meshes over time, from which we compute contact between the body and object. This is a unique dataset, that goes well beyond existing ones for modeling and understanding how humans grasp and manipulate objects, how their full body is involved, and how interaction varies with the task. We illustrate the practical value of GRAB with an example application; we train GrabNet, a conditional generative network, to predict 3D hand grasps for unseen 3D object shapes. The dataset and code are available for research purposes at https://grab.is.tue.mpg.de.

| Author(s): | Omid Taheri and Nima Ghorbani and Michael J. Black and Dimitrios Tzionas |

| Book Title: | Computer Vision – ECCV 2020 |

| Volume: | 4 |

| Pages: | 581--600 |

| Year: | 2020 |

| Month: | August |

| Series: | Lecture Notes in Computer Science, 12349 |

| Editors: | Vedaldi, Andrea and Bischof, Horst and Brox, Thomas and Frahm, Jan-Michael |

| Publisher: | Springer |

| Project(s): | |

| Bibtex Type: | Conference Paper (inproceedings) |

| Address: | Cham |

| DOI: | 10.1007/978-3-030-58548-8_34 |

| Event Name: | 16th European Conference on Computer Vision (ECCV 2020) |

| Event Place: | Glasgow, UK |

| State: | Published |

| URL: | https://grab.is.tue.mpg.de |

| Electronic Archiving: | grant_archive |

| ISBN: | 978-3-030-58547-1 |

| Links: | |

BibTex

@inproceedings{GRAB:2020,

title = {GRAB: A Dataset of Whole-Body Human Grasping of Objects},

booktitle = {Computer Vision -- ECCV 2020},

abstract = {Training computers to understand, model, and synthesize human grasping requires a rich dataset containing complex 3D object shapes, detailed contact information, hand pose and shape, and the 3D body motion over time. While "grasping" is commonly thought of as a single hand stably lifting an object, we capture the motion of the entire body and adopt the generalized notion of "whole-body grasps". Thus, we collect a new dataset, called GRAB (GRasping Actions with Bodies), of whole-body grasps, containing full 3D shape and pose sequences of 10 subjects interacting with 51 everyday objects of varying shape and size. Given MoCap markers, we fit the full 3D body shape and pose, including the articulated face and hands, as well as the 3D object pose. This gives detailed 3D meshes over time, from which we compute contact between the body and object. This is a unique dataset, that goes well beyond existing ones for modeling and understanding how humans grasp and manipulate objects, how their full body is involved, and how interaction varies with the task. We illustrate the practical value of GRAB with an example application; we train GrabNet, a conditional generative network, to predict 3D hand grasps for unseen 3D object shapes. The dataset and code are available for research purposes at https://grab.is.tue.mpg.de.},

volume = {4},

pages = {581--600},

series = {Lecture Notes in Computer Science, 12349},

editors = {Vedaldi, Andrea and Bischof, Horst and Brox, Thomas and Frahm, Jan-Michael},

publisher = {Springer},

address = {Cham},

month = aug,

year = {2020},

slug = {grab-2020},

author = {Taheri, Omid and Ghorbani, Nima and Black, Michael J. and Tzionas, Dimitrios},

url = {https://grab.is.tue.mpg.de},

month_numeric = {8}

}