Vincent Stimper

Guest Researcher

Overview

I was a PhD student in the ELLIS program being supervised by Dr. Jose Miguel Hernandez Lobato (University of Cambridge) and Prof. Bernhard Schölkopf (Max Planck Institute for Intelligent Systems). During my PhD, I worked on probabilistic modeling and representation learning with a focus on normalizing flows and applications to the physical sciences. My mission is to create theoretically substantiated machine learning algorithms for natural science and engineering applications.

For more recent updates, please visit my personal website.

Latest Project

SE(3) Equivariant Augmented Coupling Flows

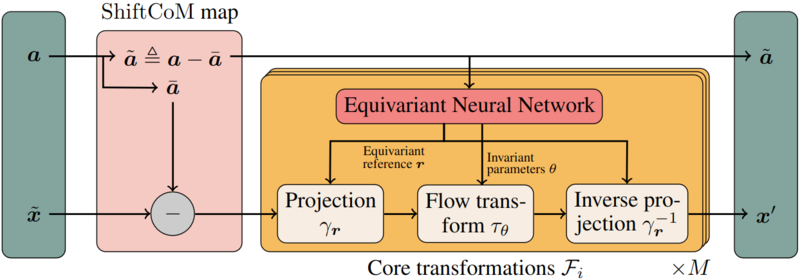

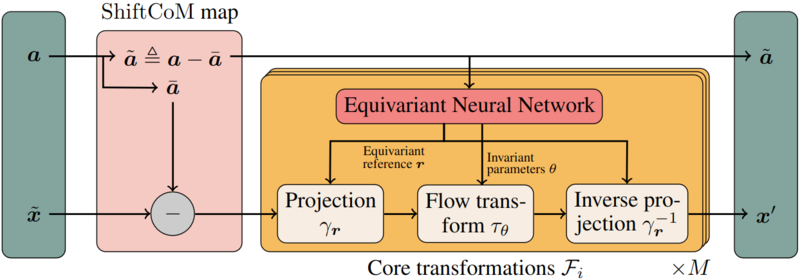

Coupling normalizing flows allow for fast sampling and density evaluation, making them the tool of choice for probabilistic modeling of physical systems. However, the standard coupling architecture precludes endowing flows that operate on the Cartesian coordinates of atoms with the SE(3) and permutation invariances of physical systems. This work proposes a coupling flow that preserves SE(3) and permutation equivariance by performing coordinate splits along additional augmented dimensions. At each layer, the flow maps atoms' positions into learned SE(3) invariant bases, where we apply standard flow transformations, such as monotonic rational-quadratic splines, before returning to the original basis. Crucially, our flow preserves fast sampling and density evaluation, and may be used to produce unbiased estimates of expectations with respect to the target distribution via importance sampling.

Concurrently with Klein et al. [2023], we are the first to learn the full Boltzmann distribution of alanine dipeptide by only modeling the Cartesian positions of its atoms.

The article is published in NeurIPS 2023, and the code is publicly available on GitHub.

Education

- Jan 2020 - May 2024: PhD in Engineering (Machine Learning), University of Cambridge, and Max Planck Institute for Intelligent Systems, Tübingen, Germany

- Oct 2017 - Sep 2019: Master of Science in Physics, Technical University Munich, Munich, Germany

- Oct 2014 - Sep 2017: Bachelor of Science in Physics, Technical University Munich, Munich, Germany

Internships & Employment

- Jul 2023 - Sep 2023: Research Intern, Microsoft, Berlin, Germany

- Jun 2021 - Sep 2021: Applied Science Intern, Amazon, Berlin, Germany

- Oct 2019 - Dec 2019: Applied Science Intern, Amazon, Tübingen, Germany

- Dec 2017 - May 2018: Research Assistant, Ludwig Maximilian University of Munich, Munich, Germany

- Jul 2017 - Sep 2017: Research Intern, Ontario Institute for Cancer Research, Toronto, Canada

You can find my full CV here (last update: October 6, 2023).

Here, a selection of my research projects is listed. A full list of my research articles can be found on my Google Scholar profile.

SE(3) Equivariant Augmented Coupling Flows

Coupling normalizing flows allow for fast sampling and density evaluation, making them the tool of choice for probabilistic modeling of physical systems. However, the standard coupling architecture precludes endowing flows that operate on the Cartesian coordinates of atoms with the SE(3) and permutation invariances of physical systems. This work proposes a coupling flow that preserves SE(3) and permutation equivariance by performing coordinate splits along additional augmented dimensions. At each layer, the flow maps atoms' positions into learned SE(3) invariant bases, where we apply standard flow transformations, such as monotonic rational-quadratic splines, before returning to the original basis. Crucially, our flow preserves fast sampling and density evaluation, and may be used to produce unbiased estimates of expectations with respect to the target distribution via importance sampling.

Concurrently with Klein et al. [2023], we are the first to learn the full Boltzmann distribution of alanine dipeptide by only modeling the Cartesian positions of its atoms.

The article is published in NeurIPS 2023, and the code is publicly available on GitHub.

Flow Annealed Importance Sampling Bootstrap

Normalizing flows can approximate complicated Boltzmann distributions of physical systems. However, current methods for training flows either suffer from mode-seeking behavior, use samples from the target generated beforehand by expensive MCMC simulations, or use stochastic losses that have very high variance. We tackle this challenge by augmenting flows with annealed importance sampling (AIS) and minimize the mass covering α-divergence with α=2, which minimizes importance weight variance. Our method, Flow AIS Bootstrap (FAB), uses AIS to generate samples in regions where the flow is a poor approximation of the target, facilitating the discovery of new modes.

To the best of our knowledge, we are the first to learn the Boltzmann distribution of the alanine dipeptide molecule using only the unnormalized target density and without access to samples generated via Molecular Dynamics (MD) simulations: FAB produces better results than training via maximum likelihood on MD samples while using 100 times fewer target evaluations. After reweighting samples with importance weights, we obtain unbiased histograms of dihedral angles that are almost identical to the ground truth ones, as can be seen in the figure below.

For more details, please have a look at our full article published at ICLR 2023 and check out our code on GitHub.

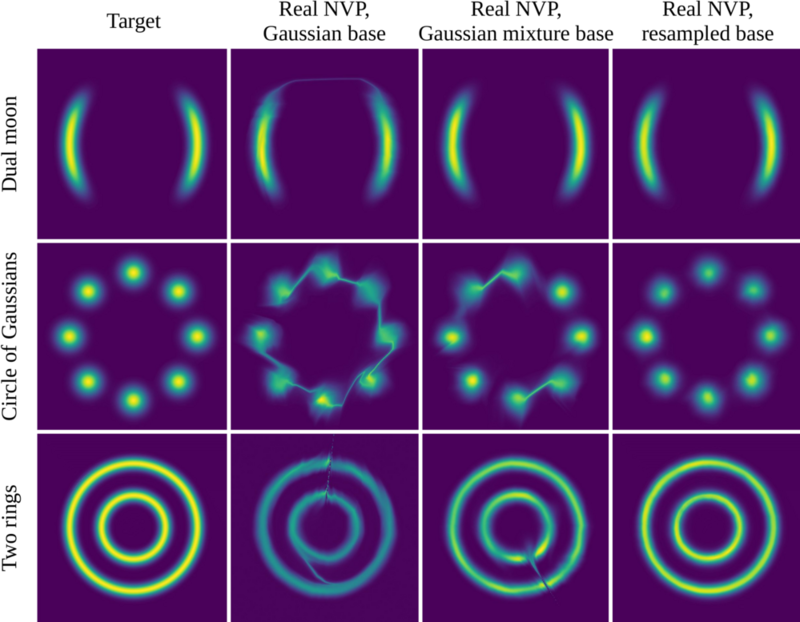

Resampling Base Distributions of Normalizing Flows

Due to their invertible nature, normalizing flows struggle to model distribution with complex topological structure, e.g. multimodal distributions or distributions with rings and tori. To address this issue, we developed a resampled base distribution which can assume arbitrary complex topological structures, thereby boosting the performance of the flow model without sacrificing invertibility.

The code is available on GitHub and the article was published in AISTATS 2022.

Reconstruction of the Electronic Band Structure

The electronic band structure charaterizes important properties of materials. We developed an unsupervised learning procedure, based on a Markov Random Field, to reconstruct it from photoemission spectroscopy data. The method can be applied to dataset with various number of dimensions. Thereby, we were able to reconstruct the full 3D valance band structure of tungsten diselenide for the first time.

The code is available on GitHub and the article is published in Nature Computational Science.

Contrast Enhancement of High-Dimensional Data

In image processing and computer vision contrast enhancement is an important preprocessing technique for improving the performance of downstream tasks. We extended an existing approach 2D images, namely contrast limited adaptive histogram equalization (CLAHE), to data with an arbitrary number of dimensions. To demonstrate our procedure, called multidimensional CLAHE (MCLAHE), we applied it to a 4D photoemission spectroscopy dataset. As you can see below, MCLAHE reveals the the exitation of an electronic band in the experiment.

The implementation was done in Tensorflow and supports parallelization with multiple CPUs and various other hardware accelerators, including GPUs. It is available on GitHub, and the full article is published in IEEE Access.