3-D Object Reconstruction of Symmetric Objects by Fusing Visual and Tactile Sensing

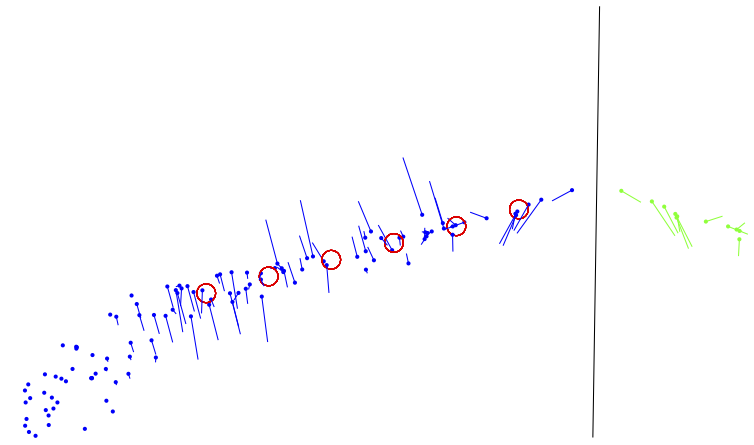

In this work, we propose to reconstruct a complete 3-D model of an unknown object by fusion of visual and tactile information while the object is grasped. Assuming the object is symmetric, a first hypothesis of its complete 3-D shape is generated. A grasp is executed on the object with a robotic manipulator equipped with tactile sensors. Given the detected contacts between the fingers and the object, the initial full object model including the symmetry parameters can be refined. This refined model will then allow the planning of more complex manipulation tasks. The main contribution of this work is an optimal estimation approach for the fusion of visual and tactile data applying the constraint of object symmetry. The fusion is formulated as a state estimation problem and solved with an iterative extended Kalman filter. The approach is validated experimentally using both artificial and real data from two different robotic platforms.

| Author(s): | Illonen, J. and Bohg, J. and Kyrki, V. |

| Journal: | The International Journal of Robotics Research |

| Volume: | 33 |

| Number (issue): | 2 |

| Pages: | 321-341 |

| Year: | 2013 |

| Month: | October |

| Publisher: | Sage |

| Project(s): | |

| Bibtex Type: | Article (article) |

| DOI: | 10.1177/0278364913497816 |

| Electronic Archiving: | grant_archive |

| Links: | |

BibTex

@article{Illonen_IJRR_2013,

title = {3-D Object Reconstruction of Symmetric Objects by Fusing Visual and Tactile Sensing},

journal = {The International Journal of Robotics Research},

abstract = {In this work, we propose to reconstruct a complete 3-D model of an unknown object

by fusion of visual and tactile information while the object is grasped. Assuming the

object is symmetric, a first hypothesis of its complete 3-D shape is generated. A grasp

is executed on the object with a robotic manipulator equipped with tactile sensors.

Given the detected contacts between the fingers and the object, the initial full object

model including the symmetry parameters can be refined. This refined model will then

allow the planning of more complex manipulation tasks.

The main contribution of this work is an optimal estimation approach for the fusion of

visual and tactile data applying the constraint of object symmetry. The fusion is

formulated as a state estimation problem and solved with an iterative extended

Kalman filter. The approach is validated experimentally using both artificial and real

data from two different robotic platforms.},

volume = {33},

number = {2},

pages = {321-341},

publisher = {Sage},

month = oct,

year = {2013},

slug = {illonen_ijrr_2013},

author = {Illonen, J. and Bohg, J. and Kyrki, V.},

month_numeric = {10}

}