Adaptive Locomotion of Soft Microrobots

Networked Control and Communication

Controller Learning using Bayesian Optimization

Event-based Wireless Control of Cyber-physical Systems

Model-based Reinforcement Learning for PID Control

Learning Probabilistic Dynamics Models

Gaussian Filtering as Variational Inference

Machine learning methods for soft millirobot design and control

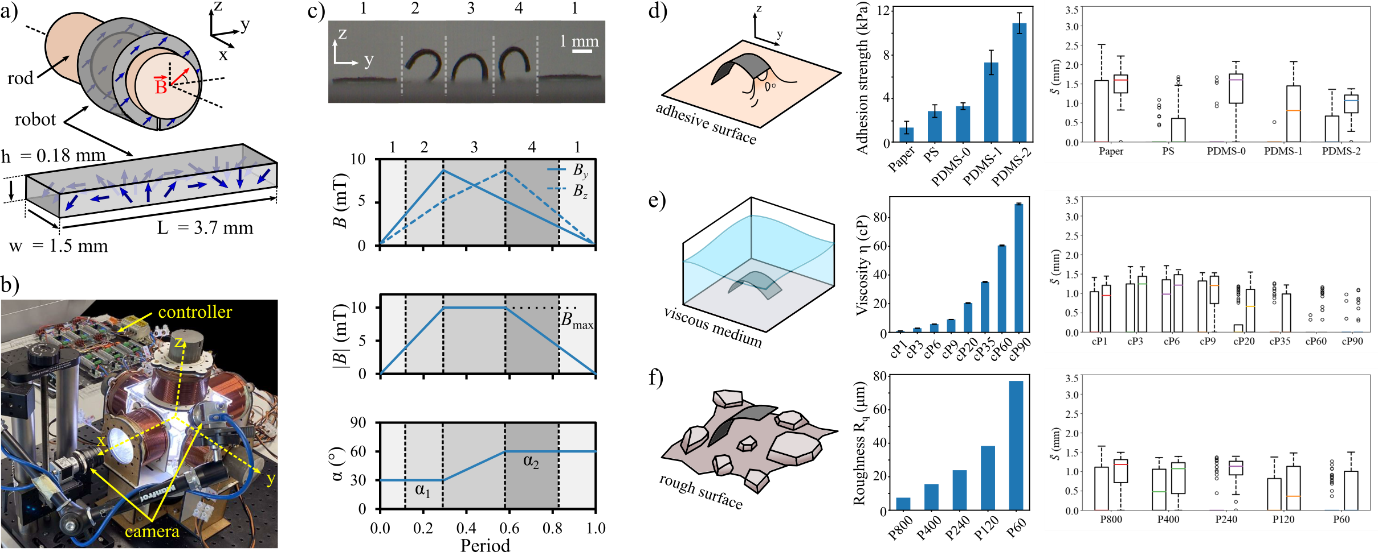

Untethered small-scale soft robots have promising applications in minimally invasive surgery, targeted drug delivery, and bioengineering applications as they can directly and non-invasively access confined and hard-to-reach spaces in the human body. For such potential biomedical applications, the adaptivity of the robot control is essential to ensure the continuity of the operations, as task environment conditions show dynamic variations that can alter the robot’s motion and task performance. The applicability of the conventional modeling and control methods is further limited for small soft robots due to their kinematics with virtually infinite degrees of freedom, inherent stochastic variability during the fabrication, and changing dynamics during real-world interactions. To address the controller adaptation challenge to dynamically changing task environments, we propose in [] using a probabilistic learning approach for a millimeter-scale magnetic walking soft robot using Bayesian optimization (BO) and Gaussian processes (GPs). Our approach provides a data-efficient learning scheme by finding the gait controller parameters while optimizing the stride length of the walking soft millirobot using a limited number of physical experiments. To demonstrate the controller adaptation, in [

], we test the walking gait of the robot in task environments with different adhesion properties, medium viscosities, and surface roughnesses. We also utilize the transfer of the learned GP model among different task spaces and robots and studied its effect on improving controller learning performance.

Members

Publications