Autonomous Motion

Intelligent Control Systems

Conference Paper

2018

Probabilistic Recurrent State-Space Models

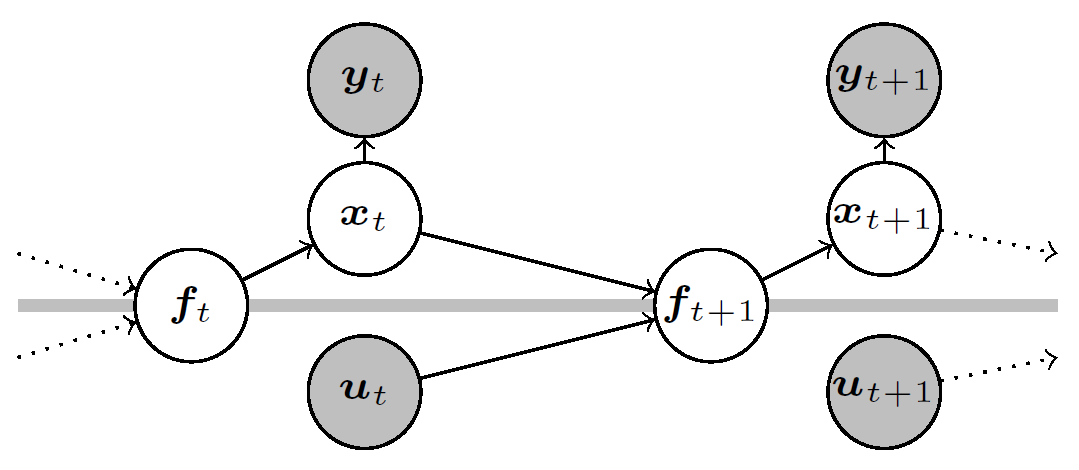

State-space models (SSMs) are a highly expressive model class for learning patterns in time series data and for system identification. Deterministic versions of SSMs (e.g., LSTMs) proved extremely successful in modeling complex time-series data. Fully probabilistic SSMs, however, unfortunately often prove hard to train, even for smaller problems. To overcome this limitation, we propose a scalable initialization and training algorithm based on doubly stochastic variational inference and Gaussian processes. In the variational approximation we propose in contrast to related approaches to fully capture the latent state temporal correlations to allow for robust training.

| Author(s): | Andreas Doerr and Christian Daniel and Martin Schiegg and Duy Nguyen-Tuong and Stefan Schaal and Marc Toussaint and Sebastian Trimpe |

| Book Title: | Proceedings of the International Conference on Machine Learning (ICML) |

| Year: | 2018 |

| Month: | July |

| Project(s): | |

| Bibtex Type: | Conference Paper (inproceedings) |

| Event Name: | International Conference on Machine Learning (ICML) |

| Event Place: | Stockholm, Sweden |

| State: | Published |

| Electronic Archiving: | grant_archive |

| Links: | |

| Attachments: | |

BibTex

@inproceedings{doerr2018probabilistic,

title = {Probabilistic Recurrent State-Space Models},

booktitle = {Proceedings of the International Conference on Machine Learning (ICML)},

abstract = {State-space models (SSMs) are a highly expressive model class for learning patterns in time series data and for system identification. Deterministic versions of SSMs (e.g., LSTMs) proved extremely successful in modeling complex time-series data. Fully probabilistic SSMs, however, unfortunately often prove hard to train, even for smaller problems. To overcome this limitation, we propose a scalable initialization and training algorithm based on doubly stochastic variational inference and Gaussian processes. In the variational approximation we propose in contrast to related approaches to fully capture the latent state temporal correlations to allow for robust training. },

month = jul,

year = {2018},

slug = {probabilistic-recurrent-state-space-models},

author = {Doerr, Andreas and Daniel, Christian and Schiegg, Martin and Nguyen-Tuong, Duy and Schaal, Stefan and Toussaint, Marc and Trimpe, Sebastian},

month_numeric = {7}

}