Code & Data

Filter by

LICENSE TYPE

Perceiving Systems

PS:License 1.0

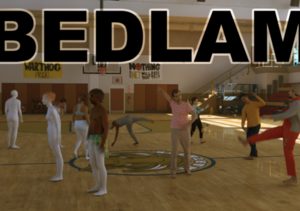

BEDLAM: A Synthetic Dataset of Bodies Exhibiting Detailed Lifelike Animated Motion

Synthetic image dataset (1.6 million images) with bodies in motion in realistic environments and trained HPS regressors using only this data.

BEDLAM dataset contains monocular RGB videos with ground-truth 3D bodies in SMPL-X format. It includes a diversity of body shapes, motions, skin tones, hair, and clothing. The clothing is realistically simulated on the moving bodies using commercial clothing physics simulation. We render varying numbers of people in realistic scenes with varied lighting and camera motions. We then train various HPS regressors using BEDLAM and achieve state-of-the-a...

Perceiving Systems

PS:License 1.0

SUPR Convertor

A utility tool to convert SMPL-X model parameters to SUPR model parameters.

Software Workshop

Perceiving Systems

PS:License 1.0

Mesh Annotator

Contact labeling application for selecting body regions / mesh vertices on the Mechanical Turk.

Perceiving Systems

PS:License 1.0

TEACH: Temporal Action Compositions for 3D Humans

Official PyTorch implementation of the paper "TEACH: Temporal Action Compositions for 3D Humans".

Perceiving Systems

PS:License 1.0

EMOCA: Emotion Driven Monocular Face Capture and Animation

EMOCA takes a single in-the-wild image as input and reconstructs a 3D face with sufficient facial expression detail to convey the emotional state of the input image. EMOCA advances the state-of-the-art monocular face reconstruction in-the-wild, putting emphasis on accurate capture of emotional content.

<p>The repository provides:</p>

<ul>

<li>An approach to reconstruct animatable 3D faces from an in-the-wild images, that is capable of recovering facial expressions that convey the correct emotional state.</li>

<li>A novel perceptual emotion-consistency loss that rewards the accurac...

Software Workshop

Perceiving Systems

PS:License 1.0

Mechanical Turk Manager

Web application for interfacing with the Amazon Mechanical Turk.

Perceiving Systems

PS:License 1.0

DIGIT

DIGIT estimates the 3D poses of two interacting hands from a single RGB image. This repo provides the training, evaluation, and demo code for the project in PyTorch Lightning.

Perceiving Systems

PS:License 1.0

SAMP: Stochastic Scene-Aware Motion Prediction

SAMP generates a 3D human avatar that navigates a novel scene to achieve goals. The software includes runtime Unity code and training code as well as training data.

Perceiving Systems

PS:License 1.0

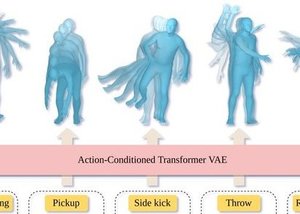

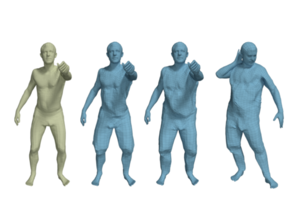

ACTOR: Action-Conditioned 3D Human Motion Synthesis with Transformer VAE

ACTOR learns an action-aware latent representation for human motions by training a generative variational autoencoder (VAE). By sampling from this latent space and querying a certain duration through a series of positional encodings, we synthesize variable-length motion sequences conditioned on a categorical action. ACTOR uses a transformer-based architecture to encode and decode a sequence of parametric SMPL human body models estimated from action recognition datasets.

Perceiving Systems

PS:License 1.0

ROMP: Monocular, One-Stage, Regression of Multiple 3D People

ROMP estimates multiple 3D people in an image in real time. Unlike prior methods, it does not first detect the people and then estimate their pose. Instead, ROMP estimates the a heatmap corresponding to the centers of people together with the parameters of the SMPL model at these centers. The code includes realtime demos.

Perceiving Systems

PS:License 1.0

SNARF: Differentiable Forward Skinning for Animating Non-rigid Neural Implicit Shapes

Official code release for ICCV 2021 paper SNARF: Differentiable Forward Skinning for Animating Non-rigid Neural Implicit Shapes. We propose a novel forward skinning module to animate neural implicit shapes with good generalization to unseen poses. Given scans of a person, SNARF creates an animatable implicit avatar. Training and animation code is included.

Perceiving Systems

PS:License 1.0

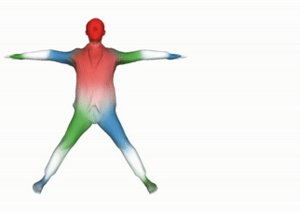

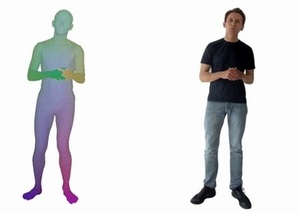

Learning to Regress Bodies from Images using Differentiable Semantic Rendering

DSR uses semantic information in the form of clothing segmentation when learning to regress 3D human pose and shape from an image. The SMPL body should fit inside the clothing and match unclothed regions.

Perceiving Systems

PS:License 1.0

PARE: Part Attention Regressor for 3D Human Body Estimation

PARE regresses 3D human pose and shape using part-guided attention. It learns to be robust to occlusion, making it much more practical than recent methods when applied to real images.

Perceiving Systems

PS:License 1.0

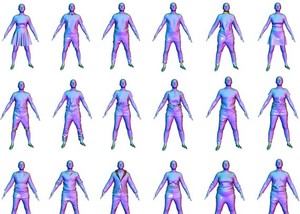

ReSynth Dataset

The ReSynth Dataset is a synthetic dataset of 3D clothed humans in motion, created using physics based simulation.

The dataset contains 24 outfits of diverse garment types, dressed on varied body shapes across both genders. All outfits are simulated using a consistent set of 20 motion sequences captured in the CAPE dataset.

We provide both the simulated high-res point clouds as well as the packed data that's ready to run with the model introduced in the ICCV 2021 paper "The Power of Points for Modeling Humans in Clothing". Checkout out the dataset website for more information.

Perceiving Systems

PS:License 1.0

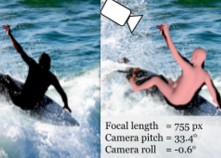

SPEC dataset: Pano360, SPEC-SYN, SPEC-MTP

Pano360 dataset consists of 35K panoramic images of which 34K are from Flickr and 1K rendered from photorealistic 3D scenes. We use it to train CamCalib.

SPEC-SYN is a photorealistic synthetic dataset which has accurate

ground-truth SMPL and camera annotations. We use it for both training and evaluating SPEC.

SPEC-MTP is a crowdsourced dataset consisting of real images, camera calibration and SMPL-X fits. We use it only for evaluation purposes in the experiment.

Perceiving Systems

PS:License 1.0

SPEC: Seeing People in the Wild with an Estimated Camera

SPEC is the first in-the-wild 3D HPS method that estimates the perspective camera from a single image and employs this to reconstruct 3D human bodies more accurately.

Perceiving Systems

PS:License 1.0

The Power of Points for Modeling Humans in Clothing

PoP (Power of Points) is a point-based model for generating high-fidelity dense point clouds of humans in clothing with pose-dependent geometry. It supports modeling multiple subjects of varied body shapes and different outfit types with a single model. At test-time, given a single, static scan, the model can animate it with plausible pose-dependent deformations.

Perceiving Systems

The MIT License

Active Domain Adaptation via Clustering Uncertainty-weighted Embeddings

Generalizing deep neural networks to new target domains is critical to their real-world utility. While labeling data from the target domain, it is desirable to select a subset that is maximally-informative to be cost-effective (called Active Learning). The ADA-CLUE algorithm addresses the problem of Active Learning under a domain shift. The GitHub repo consists of code to train models with the ADA-CLUE algorithm for multiple source and target domain shifts. Pre-trained models are also available.

Perceiving Systems

PS:License 1.0

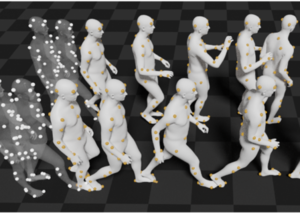

MoSh: Motion and Shape Capture from Sparse Markers

MoSh can fit surface models of human, animal, and objects to marker-based motion capture, in short mocap, data with high accuracy second only to that of 4D scan data. Fitting is completely automatic, given labeled, and clean mocap marker data. The labeling can also be made automatic using SOMA, whose code is also released here.

Perceiving Systems

PS:License 1.0

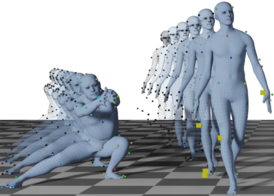

SOMA: Solving Optical Marker-Based MoCap Automatically

Marker-based optical motion capture systems, in short mocap, are an ultimate precision tool to capture motion of objects and bodies. However, the raw output of these systems are unordered sparse point clouds that have to be brought into correspondence with physical markers on the surface. SOMA is a machine learning-based tool that automates this process, replacing the human expert in the loop, thus enabling rapid production of high quality data with applications in computer graphics and computer vision.

Perceiving Systems

PS:License 1.0

3DCP: 3D Contact Poses

The 3D Contact Poses dataset contains SMPL-X bodies fit to 3D scans of five subjects in various self-contact poses, as well as self-contact optimised meshes from the AMASS motion capture dataset.

Perceiving Systems

PS:License 1.0

SAMP Dataset

SAMP dataset is high-quality MoCap data covering various sitting, lying down, walking, and running styles. We capture the motion of the body as well as the object.

Perceiving Systems

PS:License 1.0

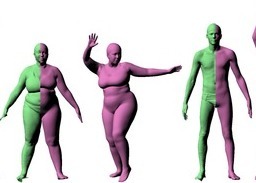

AGORA dataset

While the accuracy of 3D human pose estimation from images has steadily improved on benchmark datasets, the best methods still fail in many real-world scenarios. This suggests that there is a domain gap between current datasets and common scenes containing people. To evaluate the current state-of-the-art methods on more challenging images, and to drive the field to address new problems, we introduce AGORA, a synthetic dataset with high realism and highly accurate ground truth. We create around 14K training and 3K test images by rendering between 5 and 15 people per image using either image...

Perceiving Systems

PS:License 1.0

SCALE: Modeling Clothed Humans with a Surface Codec of Articulated Local Elements

SCALE learns a representation of 3D humans in clothing that generalizes to new body poses. SCALE is a point-based representation based on a collection of local surface elements. The code enables people to train and animate SCALE models and includes examples from the CAPE dataset.

Perceiving Systems

PS:License 1.0

SMPLify-XMC: On Self-Contact and Human Pose

SMPLify-XMC is a SMPLify-X optimization framework with Mimicked Contact. It fits a SMPL-X body to a image given (1) 2D keypoints, (2) a known 3D body pose and self-contact, (3) gender, height and weight as input. SMPLify-XMC is used in MTP (Mimic-The-Pose) data collection pipeline to create near ground-truth SMPL-X parameters and self-contact for each in-the-wild image.

Perceiving Systems

PS:License 1.0

SCANimate: Weakly Supervised Learning of Skinned Clothed Avatar Networks

SCANimate is an end-to-end trainable framework that takes raw 3D scans of a clothed human and turns them into an animatable avatar. These avatars are driven by pose parameters and have realistic clothing that moves and deforms naturally. SCANimate uses an implicit shape representation and does not rely on a customized mesh template or surface mesh registration.

Perceiving Systems

PS:License 1.0

BABEL: Bodies, Action and Behavior with English Labels

The BABEL dataset consists of labels that describe the action being performed in a mocap sequence. There are two types of labels — sequence labels that describe the actions being performed in the entire sequence, and fine-grained frame labels that describe the actions being performed in each frame. The mocap sequences in BABEL are derived from the AMASS dataset.

The GitHub repo consists of helper code that loads and filters the data based on labels. It also consists of training code, features, and pre-trained models that perform the task of action recognition on the BABEL dataset.

Perceiving Systems

PS:License 1.0

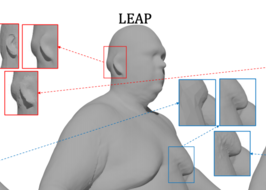

LEAP: Learning Articulated Occupancy of People

LEAP (LEarning Articulated occupancy of People), a novel neural occupancy representation of the human body. It is effectively an implitic version of SMPL. Given a set of bone transformations (i.e. joint locations and rotations) and a query point in space, LEAP first maps the query point to a canonical space via learned linear blend skinning (LBS) functions and then efficiently queries the occupancy value via an occupancy network that models accurate identity- and pose- dependent deformations in the canonical space.

Perceiving Systems

PS:License 1.0

MOJO: We are More than Our Joints

MOJO (More than Our JOints) is a novel variational autoencoder with a latent DCT space that generates 3D human motions from latent frequencies. MOJO preserves the full temporal resolution of the input motion, and sampling from the latent frequencies explicitly introduces high-frequency components into the generated motion. We note that motion prediction methods accumulate errors over time, resulting in joints or markers that diverge from true human bodies. To address this, we fit the SMPL-X body model to the predictions at each time step, projecting the solution back onto the space of valid...

Perceiving Systems

PS:License 1.0

SMPL-X for Blender and Unity

We facilitate the use of SMPL-X in popular third-party applications by providing dedicated add-ons for Blender and Unity.

The SMPL-X 1.1 model can now be quickly added as a textured skeletal mesh with a shape specific rig, as well as shape keys (blend shapes) for shape, expression and pose correctives. We also provide functionality to recalculate joint locations on shape change and proper activation of pose correctives after pose changes.

Perceiving Systems

PS:License 1.0

DECA: Learning an Animatable Detailed 3D Face Model from In-the-Wild Images

DECA reconstructs a 3D head model with detailed facial geometry from a single input image. The resulting 3D head model can be easily animated.

The main features:

* Reconstruction: produces head pose, shape, detailed face geometry, and lighting information from a single image.

* Animation: animate the face with realistic wrinkle deformations.

* Robustness: tested on facial images in unconstrained conditions. Our method is robust to various poses, illuminations and occlusions.

* Accurate: state-of-the-art 3D face shape reconstruction on the NoW Challenge benchmark dataset.

Perceiving Systems

PS:License 1.0

SMPLpix: Neural Avatars from 3D Human Models

SMPLpix is a neural rendering framework that combines deformable 3D models such as SMPL-X with the power of image-to-image translation frameworks (aka pix2pix models). Create a 3D avatar from an video and then render it in new poses.

Perceiving Systems

PS:License 1.0

POSA: Populating 3D Scenes by Learning Human-Scene Interaction

POSA takes a 3D body and automatically places it in a 3D scene in a semantically meaningful way.

This repository contains the training, random sampling, and scene population code used for the experiments in POSA.

The code defines a novel representation of human-scene-interaction that is body centric.

This can be exploited for 3D human tracking from video to model likely interactions between a body and the scene.

Perceiving Systems

PS:License 1.0

NoW Evaluation: Learning to Regress 3D Face Shape and Expression from an Image without 3D Supervision

Code: We provide the evaluation code for NoW challenge proposed in the RingNet paper. Please check the repository which is self-explanatory.

NoW Benchmark Dataset and Challenge: Please check the external link to download the data and participate in the challenge.

Perceiving Systems

PS:License 1.0

GIF: Generative Interpretable Faces

GIF is a photorealistic generative face model with explicit control over 3D geometry (parametrized like FLAME), appearance, and lighting. Training and animation code is provided.

Perceiving Systems

PS:License 1.0

Learning a statistical full spine model from partial observations

The study of the morphology of the human spine has attracted research attention for its many potential applications, such as image segmentation, bio-mechanics or pathology detection. However, as of today there is no publicly available statistical model of the 3D surface of the full spine. This is mainly due to the lack of openly available 3D data where the full spine is imaged and segmented. In this paper we propose to learn a statistical surface model of the full-spine (7 cervical, 12 thoracic and 5 lumbar vertebrae) from partial and incomplete views of the spine. In order to deal with the...

Perceiving Systems

PS:License 1.0

STAR: A Sparse Trained Articulated Human Body Regressor

We propose STAR, a realistic human body with a learned set of sparse and spatially local pose corrective blend shapes. STAR addresses many of the drawbacks of the widely used SMPL model despite having an order of magnitude fewer parameters. The code released includes the model implementation in Tensorflow, PyTorch, and Chumpy.

Perceiving Systems

PS:License 1.0

GRAB: A Dataset of Whole-Body Human Grasping of Objects

Training computers to understand, model, and synthesize human grasping requires a rich dataset containing complex 3D object shapes, detailed contact information, hand pose and shape, and the 3D body motion over time. While "grasping" is commonly thought of as a single hand stably lifting an object, we capture the motion of the entire body and adopt the generalized notion of "whole-body grasps". Thus, we collect a new dataset, called GRAB (GRasping Actions with Bodies), of whole-body grasps, containing full 3D shape and pose sequences of 10 subjects interacting with 51 everyday objects of va...

Perceiving Systems

PS:License 1.0

GrabNet: Generating 3D hand grasps for unseen 3D objects

There is a significant interest in the community in training models to grasp 3D objects. This is important for example for interacting human avatars, as well as for robotic grasping by imitating human grasping. We use our GRAB dataset (see entry above) of whole-body grasps, and extract hand-only information. We then train on this our deep-net model GrabNet to generate 3D hand grasps, using our hand model MANO, for unseen 3D objects. We provide both the GrabNet model and its training dataset for research purposes.

Perceiving Systems

PS:License 1.0

ExPose: EXpressive POse and Shape rEgression

Training models to quickly and accurately estimate expressive humans (SMPL-X) from an RGB image, including the main body, face and hands, is challenging for a number of reasons. First, there exists no dataset with paired images and ground truth SMPL-X annotations. Secondly, the face and hands take up much fewer pixels than the main body, making inference harder. Third, full body images are further downsampled to use with contemporary methods.

Here we provide the first dataset of 32.617 pairs of: (1) an in-the-wild RGB image, and (2) an expressive whole-body 3D human reconstruction (SM...

Perceiving Systems

PS:License 1.0

Generating 3D People in Scenes without People

Our PSI system aims to generate 3D people in a 3D scene from the view of an agent. The system takes as input the depth and the semantic segmentation from a camera view, and generates plausible SMPL-X body meshes, which are naturally posed in the 3D scene. Scripts of data pre-processing, training, fitting, evaluation and visualization, as well as the data, are incorporated.

Perceiving Systems

PS:License 1.0

CAPE: Dressing SMPL

CAPE provides a "dressed SMPL" body model. We train CAPE as a conditional Mesh-VAE-GAN to learn the clothing deformation from the SMPL body model, making clothing an additional term on SMPL. CAPE is conditioned on both pose and clothing type, giving the ability to draw samples of clothing to dress different body shapes in a variety of styles and poses.

Perceiving Systems

PS:License 1.0

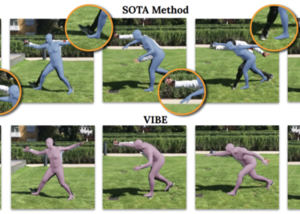

VIBE: Video Inference for Human Body Pose and Shape Estimation

VIBE is a neural network method that takes video of a human in motion as input and outputs the 3D pose and shape of the body in every frame. The output is in SMPL body format and represents the state of the art at time of release. The method runs quickly and can process arbitrary sequence lengths. The trained model is available now and training code will be provided later.

Perceiving Systems

The MIT License

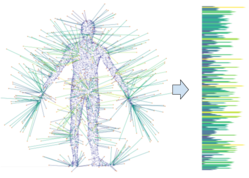

Efficient Learning on Point Clouds with Basis Point Sets

Basis Point Set (BPS) is a simple and efficient method for encoding 3D point clouds into fixed-length representations.

It is based on a simple idea: select k fixed points in space and compute vectors from these basis points to the nearest points in a point cloud; use these vectors (or simply their norms) as features.

The basis points are kept fixed for all the point clouds in the dataset, providing a fixed representation of every point cloud as a vector. This representation can then be used as input to arbitrary machine learning methods, in particular it can be used as input to off-...

Perceiving Systems

The MIT License

Markerless Outdoor Human Motion Capture Using Multiple Autonomous Micro Aerial Vehicles

Capturing human motion in natural scenarios means moving motion capture out of the lab and into the wild. Typical approaches rely on fixed, calibrated, cameras and reflective markers on the body, significantly limiting the motions that can be captured. To make motion capture truly unconstrained, we describe the first fully autonomous outdoor capture system based on flying vehicles. We use multiple micro-aerial-vehicles(MAVs), each equipped with a monocular RGB camera, an IMU, and a GPS receiver module. These detect the person, optimize their position, and localize themselves approximately. ...

Perceiving Systems

PS:License 1.0

SPIN: Human pose and shape from an image

SPIN is a state-of-the-art deep network for regressing SMPL body shape and pose parameters directly from an image. SPIN uses a novel training method that combines a bottom-up deep network with a top-down, model-based, fitting method. SMPLify model fitting is used in the loop with the DNN training to provide SMPL parameters used in the training loss. Code is available.

Perceiving Systems

PS:License 1.0

AMASS Dataset

AMASS is a large dataset of human motions - 45 hours and growing. AMASS enables the training of deep neural networks to model human motion. AMASS unifies multiple datasets by fitting the SMPL body model to mocap markers. The dataset includes SMPL-H body shapes and poses as well as DMPL soft tissue motions. If you want to include your own mocap sequences in the dataset, please contact us. The release includes tutorial code for training DNNs with AMASS. Also the MoSh++ code is now available. We also release SOMA, our complementary tool for automatic mocap labeling.

Perceiving Systems

The MIT License

Three-D Safari: Learning to Estimate Zebra Pose, Shape, and Texture from Images "In the Wild"

We present the first method to perform automatic 3D pose, shape and texture capture of animals from images acquired in-the-wild. In particular, we focus on the problem of capturing 3D information about Grevy's zebras from a collection of images. We integrate the recent SMAL animal model into a network-based regression pipeline, which we train end-to-end on synthetically generated images with pose, shape, and background variation.

We couple 3D pose and shape prediction with the task of texture synthesis, obtaining a full texture map of the animal from a single image.

The predicted textur...

Perceiving Systems

The MIT License

Competitive Collaboration

Competitive Collaboration is a generic framework in which networks learn to collaborate and compete, thereby achieving specific goals. Competitive Collaboration is a three player game consisting of two players competing for a resource that is regulated by a third player, moderator. This framework is similar in spirit to expectation-maximization (EM) but is formulated for neural network training.

Perceiving Systems

PS:License 1.0

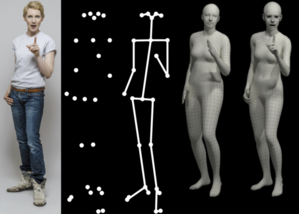

Expressive Body Capture: 3D Hands, Face, and Body from a Single Image

SMPL-X is a major update to the SMPL body model that adds an expressive face and fully articulated hands. If you use SMPL, this is a straightforward upgrade that improves realism and allows you to capture facial expressions and gestures. We also provide SMPLify-X to estimate SMPL-X from a single image. This is a major update to SMPlify in several senses: (1) we detect 2D features corresponding to the face, hands, and feet and fit the full SMPL-X model to these; (2) we train a new neural network pose prior using a large MoCap dataset; (3) we define a new interpenetration penalty that i...