TEMOS: Generating Diverse Human Motions from Textual Descriptions

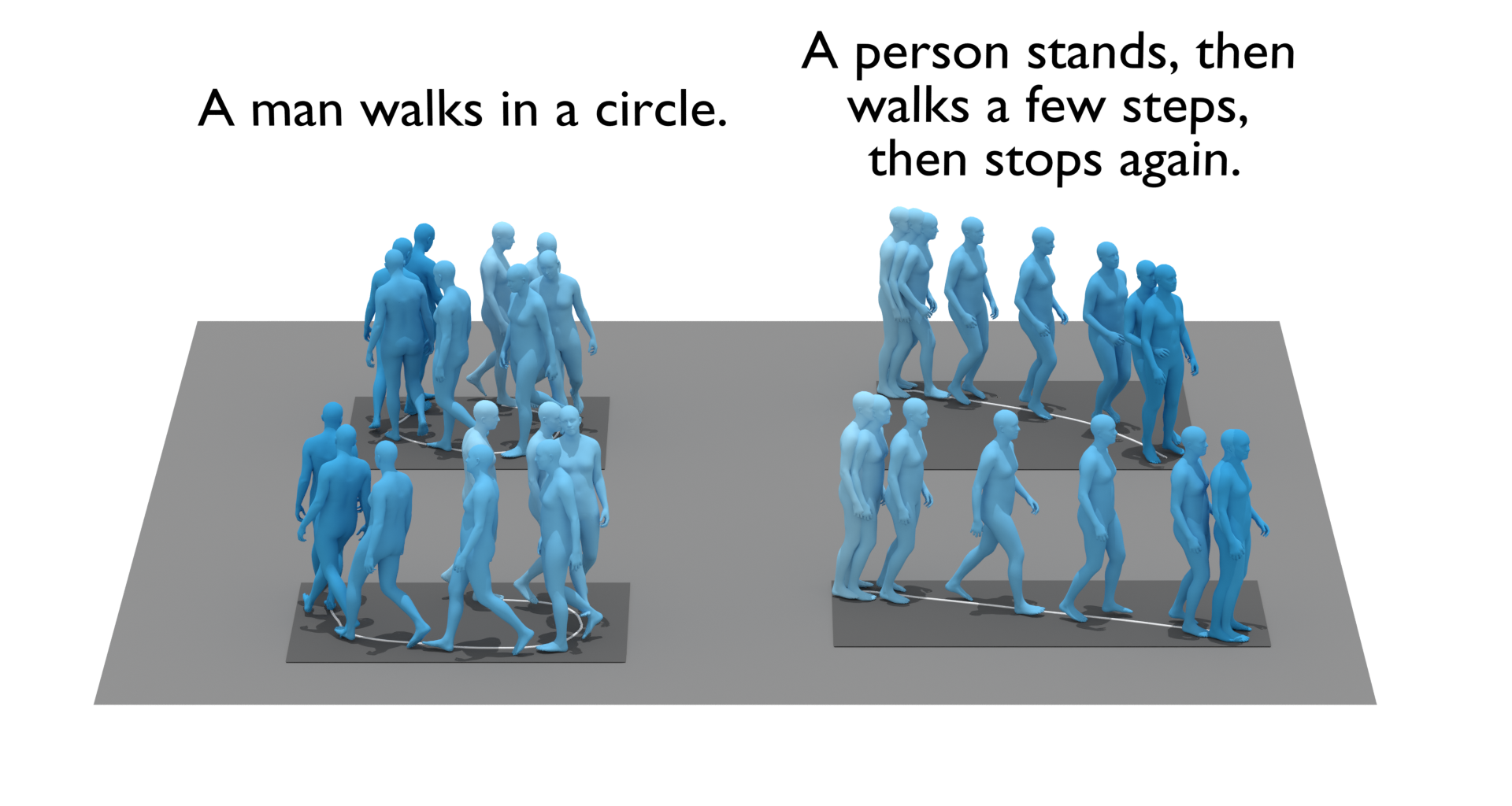

We address the problem of generating diverse 3D human motions from textual descriptions. This challenging task requires joint modeling of both modalities: understanding and extracting useful human-centric information from the text, and then generating plausible and realistic sequences of human poses. In contrast to most previous work which focuses on generating a single, deterministic, motion from a textual description, we design a variational approach that can produce multiple diverse human motions. We propose TEMOS, a text-conditioned generative model leveraging variational autoencoder (VAE) training with human motion data, in combination with a text encoder that produces distribution parameters compatible with the VAE latent space. We show the TEMOS framework can produce both skeleton-based animations as in prior work, as well more expressive SMPL body motions. We evaluate our approach on the KIT Motion-Language benchmark and, despite being relatively straightforward, demonstrate significant improvements over the state of the art. Code and models are available on our webpage.

| Award: | (Oral) |

| Author(s): | Petrovich, Mathis and Black, Michael J. and Varol, Gül |

| Book Title: | European Conference on Computer Vision (ECCV 2022) |

| Year: | 2022 |

| Month: | October |

| Publisher: | Springer International Publishing |

| Project(s): | |

| Bibtex Type: | Conference Paper (inproceedings) |

| DOI: | 10.1007/978-3-031-20047-2_28 |

| Event Name: | ECCV 2022 |

| Event Place: | Tel Aviv, Israel |

| State: | Published |

| URL: | https://mathis.petrovich.fr/temos |

| Award Paper: | Oral |

| Electronic Archiving: | grant_archive |

| ISBN: | 978-3-031-20046-5 |

| Links: | |

BibTex

@inproceedings{TEMOS:2022,

title = {{TEMOS}: Generating Diverse Human Motions from Textual Descriptions},

aword_paper = {Oral},

booktitle = {European Conference on Computer Vision (ECCV 2022)},

abstract = {We address the problem of generating diverse 3D human motions from textual descriptions. This challenging task requires joint modeling of both modalities: understanding and extracting useful human-centric information from the text, and then generating plausible and realistic sequences of human poses. In contrast to most previous work which focuses on generating a single, deterministic, motion from a textual description, we design a variational approach that can produce multiple diverse human motions. We propose TEMOS, a text-conditioned generative model leveraging variational autoencoder (VAE) training with human motion data, in combination with a text encoder that produces distribution parameters compatible with the VAE latent space. We show the TEMOS framework can produce both skeleton-based animations as in prior work, as well more expressive SMPL body motions. We evaluate our approach on the KIT Motion-Language benchmark and, despite being relatively straightforward, demonstrate significant improvements over the state of the art. Code and models are available on our webpage.},

publisher = {Springer International Publishing},

month = oct,

year = {2022},

slug = {temos-2022},

author = {Petrovich, Mathis and Black, Michael J. and Varol, G\"{u}l},

url = {https://mathis.petrovich.fr/temos},

month_numeric = {10}

}