TMR: Text-to-Motion Retrieval Using Contrastive 3D Human Motion Synthesis

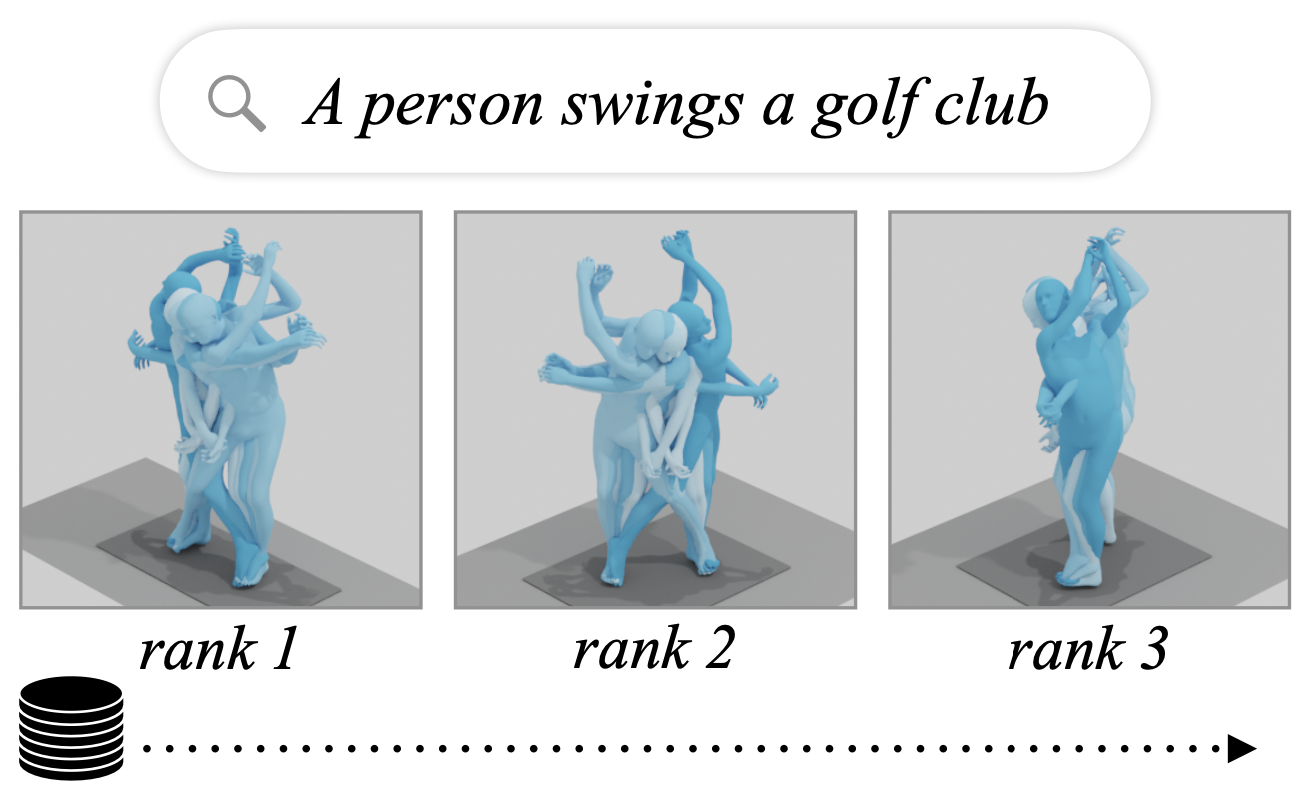

In this paper, we present TMR, a simple yet effective approach for text to 3D human motion retrieval. While previous work has only treated retrieval as a proxy evaluation metric, we tackle it as a standalone task. Our method extends the state-of-the-art text-to-motion synthesis model TEMOS, and incorporates a contrastive loss to better structure the cross-modal latent space. We show that maintaining the motion generation loss, along with the contrastive training, is crucial to obtain good performance. We introduce a benchmark for evaluation and provide an in-depth analysis by reporting results on several protocols. Our extensive experiments on the KIT-ML and HumanML3D datasets show that TMR outperforms the prior work by a significant margin, for example reducing the median rank from 54 to 19. Finally, we showcase the potential of our approach on moment retrieval. Our code and models are publicly available.

| Author(s): | Petrovich, Mathis and Black, Michael J. and Varol, Gül |

| Book Title: | Proc. International Conference on Computer Vision (ICCV) |

| Pages: | 9488--9497 |

| Year: | 2023 |

| Month: | October |

| Bibtex Type: | Conference Paper (inproceedings) |

| Event Name: | International Conference on Computer Vision 2023 |

| Event Place: | Paris, France |

| State: | Published |

| URL: | https://mathis.petrovich.fr/tmr |

| Electronic Archiving: | grant_archive |

| Links: | |

BibTex

@inproceedings{TMR:2023,

title = {{TMR}: Text-to-Motion Retrieval Using Contrastive {3D} Human Motion Synthesis},

booktitle = {Proc. International Conference on Computer Vision (ICCV)},

abstract = {In this paper, we present TMR, a simple yet effective approach for text to 3D human motion retrieval. While previous work has only treated retrieval as a proxy evaluation metric, we tackle it as a standalone task. Our method extends the state-of-the-art text-to-motion synthesis model TEMOS, and incorporates a contrastive loss to better structure the cross-modal latent space. We show that maintaining the motion generation loss, along with the contrastive training, is crucial to obtain good performance. We introduce a benchmark for evaluation and provide an in-depth analysis by reporting results on several protocols. Our extensive experiments on the KIT-ML and HumanML3D datasets show that TMR outperforms the prior work by a significant margin, for example reducing the median rank from 54 to 19. Finally, we showcase the potential of our approach on moment retrieval. Our code and models are publicly available.},

pages = {9488--9497},

month = oct,

year = {2023},

slug = {tmr-2023},

author = {Petrovich, Mathis and Black, Michael J. and Varol, G\"{u}l},

url = {https://mathis.petrovich.fr/tmr},

month_numeric = {10}

}