Body Talk: A New Crowdshaping Technology Uses Words to Create Accurate 3D Body Models

A breakthrough in our shared understanding, perception, and description of human body shape brings new alternatives to 3D body scanning

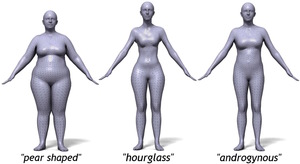

ANAHEIM, CALIFORNIA -- JULY 26, 2016 -- Researchers from the Max Planck Institute for Intelligent Systems and the University of Texas at Dallas, revealed new crowdshaping technology at SIGGRAPH 2016 that creates accurate 3D body models from 2D photos using crowdsourced linguistic descriptions of body shape. The Body Talk system takes a single photo and produces 3D body shapes that look like the person and are accurate enough to size clothing. It does this using the help of 15 volunteers who rate the body shape in the photo using 30 words or fewer. The researchers believe this technology has applications in online shopping, gaming, virtual reality and healthcare.

Youtube video of Body Talk

“High-end scanners and their lower-cost alternatives have yet to become ubiquitous in consumer technology,” said Dr. Michael J. Black, Director of the Perceiving Systems Department at the Max Planck Institute for Intelligent Systems. “Capturing 3D body shape remains a challenge, and our goal is to make it easy and fun. Our research shows that people have a shared understanding of body shape that is expressed in our use of language”.

The system uses machine learning to discover this relationship between our verbal descriptions of bodies and their actual 3D shape. ``Language provides rich descriptions of body features related to gender, physical strength, attractiveness, and health“ said Dr. Alice J. O’Toole, Professor in the School of Behavioral and Brain Sciences at the University of Texas at Dallas.

``What is surprising is that language captures 3D shape so accurately and that the collective judgment of the “crowd” can be used to create realistic avatars. This process of crowdshaping opens up a practical way to scan bodies without a scanner” said Dr. Stephan Streuber, the lead author of the study. In the paper, Body Talk: Crowdshaping Realistic 3D Avatars with Words, the researchers found that realistic 3D bodies could be created using as few as ten words. They demonstrated a “3D paparazzi” application that takes a single photo of a celebrity, has it rated by 15 people, and then creates a 3D avatar of the celebrity that can be animated. They further showed that they can extract measurements from the crowdshaped bodies with an accuracy sufficient for many clothing sizing applications. They even create 3D avatars of characters from books using just the written description.

“When scanning technology is unavailable or inapplicable, Body Talk can be used to visualize mental representations of human body shape,” said Dr. Black. “This system can be used in many areas of science and medicine, for example in studies of body perception disorders, obesity, or cross-cultural attitudes about body shape.”

Learn more about the Body Talk system and try it yourself!

Full paper: Streuber, S., Quiros-Ramirez, M., Hill, M., Hahn, C., Zuffi, S., O’Toole, A., Black, M. J. “Body Talk: Crowdshaping Realistic 3D Avatars with Words,” ACM Transactions on Graphics (Proc. SIGGRAPH), 35(4):54:1-54:14, July 2016

About Max Planck Institute for Intelligent Systems:

The Max Planck Institute for Intelligent Systems (MPI-IS) was founded in 2011. Based in Tübingen and Stuttgart, Germany, the institute focuses on establishing the scientific foundations of perception, action and learning in artificially intelligent systems. The Perceiving Systems department of MPI-IS seeks mathematical and computational models that formalize the principles of perception and enable computers to understand the visual world and its motion. Computer vision research in the department includes: optical flow estimation from sequences of images; statistical modeling of natural images and image motion; articulated human motion estimation and tracking; estimation of human body shape from images and video; representation and detection of motion discontinuities; estimation of intrinsic scene properties.

About the Person Perception Lab at the University of Texas at Dallas

The Person Perception Lab is housed in the School for Behavioral and Brain Sciences at the University of Texas at Dallas. The lab focuses on face and person perception by humans and machines using methods from psychology, computational modeling, and functional neuroimaging. The goal of the research is to understand how the brain/mind represents the complex visual information conveyed by human faces and bodies, both in static and dynamic displays. This information supports tasks in perception, cognition, and social interaction, including person identification, action recognition, and the processing of emotional state. Lab projects include functional magnetic resonance imaging of neural representations of faces, bodies, and people; comparisons between human and machine accuracy in face recognition; studies of perceptual expertise in professional forensic face examiners; and experimental studies of how we describe and visually imagine faces and bodies.

About SIGGRAPH

SIGGRAPH is the world’s largest, most influential annual event in computer graphics and interactive techniques. It is run by the Association for Computing Machinery (ACM) and is held every year. Technical papers presented at SIGGRAPH are highly reviewed and acceptance is competitive. Papers are published in the ACM Transactions on Graphics.