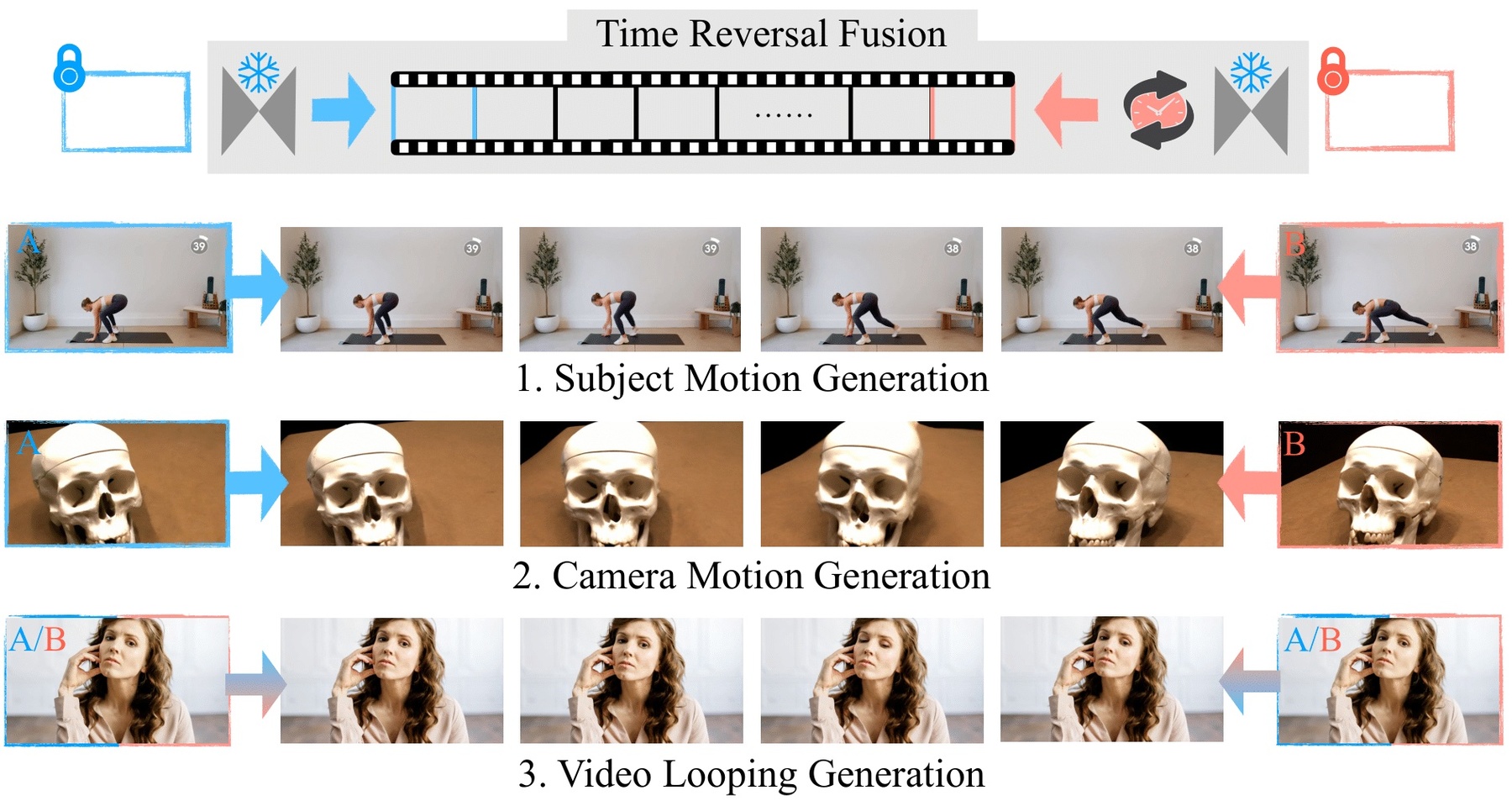

We introduce bounded generation as a generalized task to control video generation to synthesize arbitrary camera and subject motion based only on a given start and end frame. Our objective is to fully leverage the inherent generalization capability of an image-to-video model without additional training or fine-tuning of the original model. This is achieved through the proposed new sampling strategy, which we call Time Reversal Fusion, that fuses the temporally forward and backward denoising paths conditioned on the start and end frame, respectively. The fused path results in a video that smoothly connects the two frames, generating inbetweening of faithful subject motion, novel views of static scenes, and seamless video looping when the two bounding frames are identical. We curate a diverse evaluation dataset of image pairs and compare against the closest existing methods. We find that Time Reversal Fusion outperforms related work on all subtasks, exhibiting the ability to generate complex motions and 3D-consistent views guided by bounded frames.

| Author(s): | Feng, Haiwen and Ding, Zheng and Xia, Zhihao and Niklaus, Simon and Fernandez Abrevaya, Victoria and Black, Michael J. and Zhang, Xuaner |

| Book Title: | European Conference on Computer Vision (ECCV 2024) |

| Pages: | 378--395 |

| Year: | 2024 |

| Month: | September |

| Series: | LNCS |

| Publisher: | Springer Cham |

| Bibtex Type: | Conference Paper (inproceedings) |

| DOI: | https://doi.org/10.1007/978-3-031-73229-4_22 |

| Event Place: | Milan, Italy |

| State: | Published |

| URL: | https://time-reversal.github.io/ |

| Electronic Archiving: | grant_archive |

| Links: | |

BibTex

@inproceedings{TRF_ECCV_24,

title = {Explorative Inbetweening of Time and Space},

booktitle = {European Conference on Computer Vision (ECCV 2024)},

abstract = {We introduce bounded generation as a generalized task to control video generation to synthesize arbitrary camera and subject motion based only on a given start and end frame. Our objective is to fully leverage the inherent generalization capability of an image-to-video model without additional training or fine-tuning of the original model. This is achieved through the proposed new sampling strategy, which we call Time Reversal Fusion, that fuses the temporally forward and backward denoising paths conditioned on the start and end frame, respectively. The fused path results in a video that smoothly connects the two frames, generating inbetweening of faithful subject motion, novel views of static scenes, and seamless video looping when the two bounding frames are identical. We curate a diverse evaluation dataset of image pairs and compare against the closest existing methods. We find that Time Reversal Fusion outperforms related work on all subtasks, exhibiting the ability to generate complex motions and 3D-consistent views guided by bounded frames.},

pages = {378--395},

series = {LNCS},

publisher = {Springer Cham},

month = sep,

year = {2024},

slug = {trf_eccv_24},

author = {Feng, Haiwen and Ding, Zheng and Xia, Zhihao and Niklaus, Simon and Fernandez Abrevaya, Victoria and Black, Michael J. and Zhang, Xuaner},

url = {https://time-reversal.github.io/},

month_numeric = {9}

}