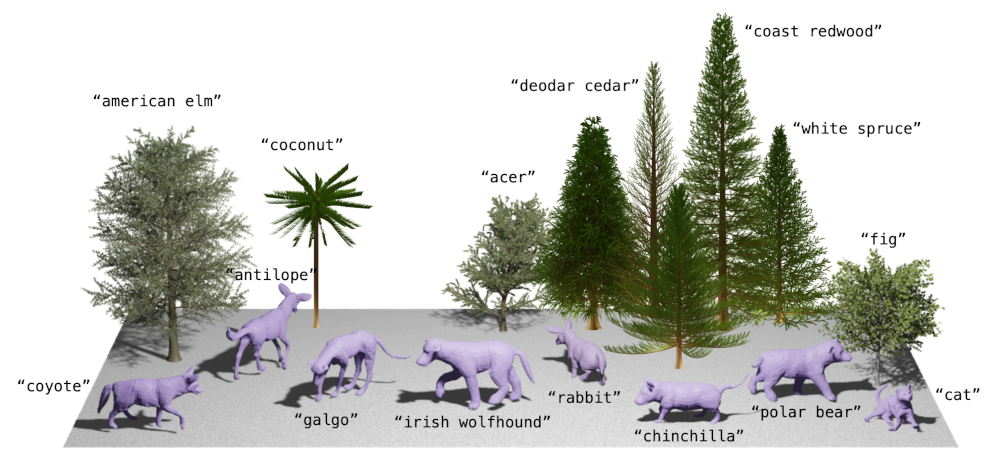

Many classical parametric 3D shape models exist, but creating novel shapes with such models requires expert knowledge of their parameters. For example, imagine creating a specific type of tree using procedural graphics or a new kind of animal from a statistical shape model. Our key idea is to leverage language to control such existing models to produce novel shapes. This involves learning a mapping between the latent space of a vision-language model and the parameter space of the 3D model, which we do using a small set of shape and text pairs. Our hypothesis is that mapping from language to parameters allows us to generate parameters for objects that were never seen during training. If the mapping between language and parameters is sufficiently smooth, then interpolation or generalization in language should translate appropriately into novel 3D shapes. We test our approach with two very different types of parametric shape models (quadrupeds and arboreal trees). We use a learned statistical shape model of quadrupeds and show that we can use text to generate new animals not present during training. In particular, we demonstrate state-of-the-art shape estimation of 3D dogs. This work also constitutes the first language-driven method for generating 3D trees. Finally, embedding images in the CLIP latent space enables us to generate animals and trees directly from images.

| Author(s): | Silvia Zuffi and Michael J. Black |

| Book Title: | European Conference on Computer Vision (ECCV 2024) |

| Year: | 2024 |

| Month: | September |

| Series: | LNCS |

| Publisher: | Springer Cham |

| Project(s): | |

| Bibtex Type: | Conference Paper (inproceedings) |

| Event Place: | Milan, Italy |

| State: | Published |

| URL: | https://awol.is.tue.mpg.de/ |

| Electronic Archiving: | grant_archive |

| Attachments: | |

BibTex

@inproceedings{zuffi_eccv2024_awol,

title = {{AWOL: Analysis WithOut synthesis using Language}},

booktitle = {European Conference on Computer Vision (ECCV 2024)},

abstract = {Many classical parametric 3D shape models exist, but creating novel shapes with such models requires expert knowledge of their parameters. For example, imagine creating a specific type of tree using procedural graphics or a new kind of animal from a statistical shape model. Our key idea is to leverage language to control such existing models to produce novel shapes. This involves learning a mapping between the latent space of a vision-language model and the parameter space of the 3D model, which we do using a small set of shape and text pairs. Our hypothesis is that mapping from language to parameters allows us to generate parameters for objects that were never seen during training. If the mapping between language and parameters is sufficiently smooth, then interpolation or generalization in language should translate appropriately into novel 3D shapes. We test our approach with two very different types of parametric shape models (quadrupeds and arboreal trees). We use a learned statistical shape model of quadrupeds and show that we can use text to generate new animals not present during training. In particular, we demonstrate state-of-the-art shape estimation of 3D dogs. This work also constitutes the first language-driven method for generating 3D trees. Finally, embedding images in the CLIP latent space enables us to generate animals and trees directly from images.},

series = {LNCS},

publisher = {Springer Cham},

month = sep,

year = {2024},

slug = {zuffi_eccv2024_awol},

author = {Zuffi, Silvia and Black, Michael J.},

url = {https://awol.is.tue.mpg.de/},

month_numeric = {9}

}