Autonomous Robotic Manipulation

Modeling Top-Down Saliency for Visual Object Search

Interactive Perception

State Estimation and Sensor Fusion for the Control of Legged Robots

Probabilistic Object and Manipulator Tracking

Global Object Shape Reconstruction by Fusing Visual and Tactile Data

Robot Arm Pose Estimation as a Learning Problem

Learning to Grasp from Big Data

Gaussian Filtering as Variational Inference

Template-Based Learning of Model Free Grasping

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

Autonomous Robotic Manipulation

Learning Coupling Terms of Movement Primitives

State Estimation and Sensor Fusion for the Control of Legged Robots

Inverse Optimal Control

Motion Optimization

Optimal Control for Legged Robots

Movement Representation for Reactive Behavior

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

3D Pose from Images

The estimation of 3D human pose from 2D images is inherently ambiguous. To that end, we develop inference methods and human pose models that enable prediction of 3D pose from images. Learned models of human pose rely on training data but we find that existing motion capture datasets are too limited to explore the full range of human poses.

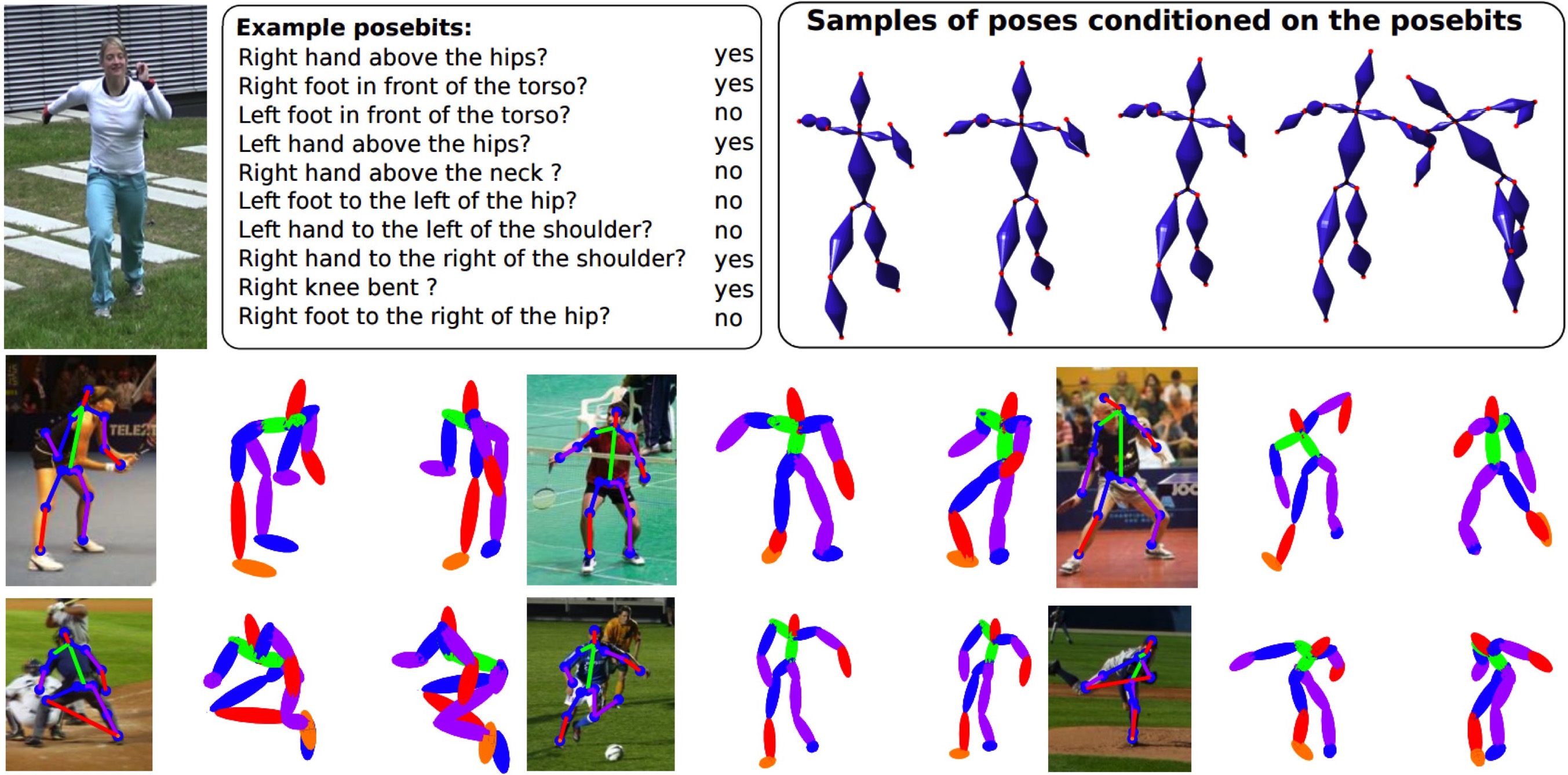

In [] we advocate the inference of qualitative information about 3D human pose, called posebits, from images. Posebits are relationships between body parts (e.g. left-leg in front of right-leg or hands close to each other). The advantages of posebits as a mid-level representation are 1) for many tasks of interest, such qualitative pose information may be sufficient (e.g. semantic image retrieval), 2) it is relatively easy to annotate large image corpora with posebits, as it simply requires answers to yes/no questions; and 3) they help resolve challenging pose ambiguities and therefore facilitate the difficult task of image-based 3D pose estimation. We introduce posebits, a posebit database, a method for selecting useful posebits for pose estimation and a structural SVM model for posebit inference.

In [] we make two key contributions to estimate 3D pose from 2D joint locations. First, we collect a dataset that includes a wide range of human poses that are designed to explore the limits of human pose. We notice that joint limits actually vary with pose and learn the first pose-dependent prior of joint limits. Second, we introduce a new method to infer 3D poses from 2D using the learned prior and an over-complete dictionary of poses. This results in state of the art results on estimating 3D pose from 2D pose.

Members

Publications