Autonomous Robotic Manipulation

Modeling Top-Down Saliency for Visual Object Search

Interactive Perception

State Estimation and Sensor Fusion for the Control of Legged Robots

Probabilistic Object and Manipulator Tracking

Global Object Shape Reconstruction by Fusing Visual and Tactile Data

Robot Arm Pose Estimation as a Learning Problem

Learning to Grasp from Big Data

Gaussian Filtering as Variational Inference

Template-Based Learning of Model Free Grasping

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

Autonomous Robotic Manipulation

Learning Coupling Terms of Movement Primitives

State Estimation and Sensor Fusion for the Control of Legged Robots

Inverse Optimal Control

Motion Optimization

Optimal Control for Legged Robots

Movement Representation for Reactive Behavior

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

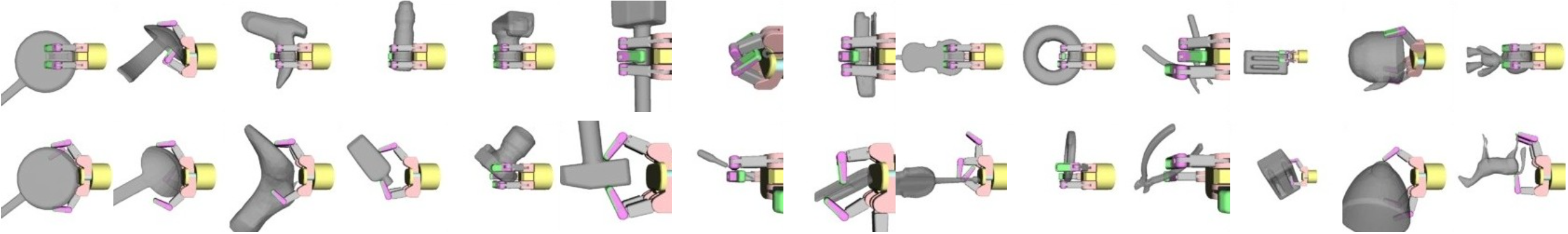

Learning to Grasp from Big Data

Data-driven methods towards grasping address the challenges of grasp synthesis that arise in the real world such as noisy sensors and incomplete information about the objects and the environment. They focus on finding a suitable representation of the perceptual data that allows to predict whether a certain grasp will succeed.

Current advances in many research areas, e.g. computer vision and speech recognition, which are based supervised data-driven methods, are due to the increase in computation capacity and available data. This work provides the first labeled dataset for grasp prediction, allowing to use state-of-the-art data-driven learning techniques such as deep learning and random forests, for grasp prediction of known and unkown objects.

Ideally labeled datasets for grasp prediction should be obtained from robot experiments. This procedure requires supervision and is mostly bound by the run-time of the robot experiments, rendering it infeasible for obtaining large datasets. We use simulation to generate feasible and unfeasible grasps, using physics simulation to narrow the simulation-reality gap. We empirically evaluate that the proposed grasp quality metric is consistent with human believe, using crowd-sourcing.

This dataset can be used to bootstrap data-driven techniques such that refinement for real world grasping only requires a small number robot experiments. First empirical results demonstrate that learning techniques with less capacity (linear methods), cannot cope with the variety of objects and thus cannot be used for general purpose grasp prediction.

In future work we want to analyze and empirically evaluate the data generation process itself, further closing the simulation-reality gap. This database will be extended with more objects and real robot experiments, including further data queues (tactile information) such that predictive models for the actual grasp execution can be learned as well.

Members

Publications