Autonomous Robotic Manipulation

Modeling Top-Down Saliency for Visual Object Search

Interactive Perception

State Estimation and Sensor Fusion for the Control of Legged Robots

Probabilistic Object and Manipulator Tracking

Global Object Shape Reconstruction by Fusing Visual and Tactile Data

Robot Arm Pose Estimation as a Learning Problem

Learning to Grasp from Big Data

Gaussian Filtering as Variational Inference

Template-Based Learning of Model Free Grasping

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

Autonomous Robotic Manipulation

Learning Coupling Terms of Movement Primitives

State Estimation and Sensor Fusion for the Control of Legged Robots

Inverse Optimal Control

Motion Optimization

Optimal Control for Legged Robots

Movement Representation for Reactive Behavior

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

4D Shape

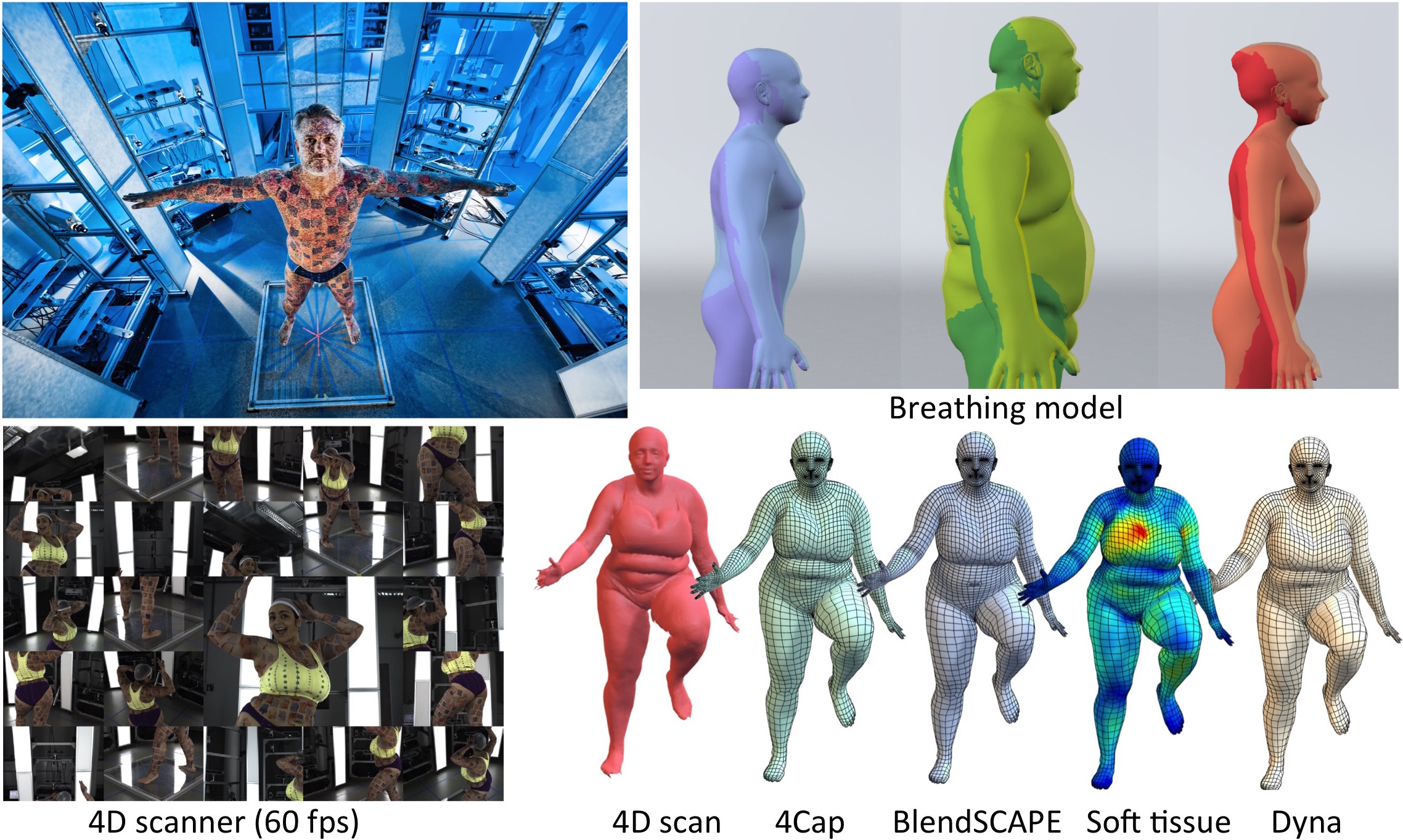

Human bodies are dynamic; they deform as they move, jiggle due to soft-tissue dynamics, and change shape with respiration. In [] we learn a model of body shape deformations due to breathing for different breathing types and provide simple animation controls to render lifelike breathing regardless of body shape. Using 3D scans of 58 human subjects, we augment a SCAPE model to include breathing shape change for different genders, body shapes, and breathing types.

Current 3D scanners capture only static bodies with high spatial resolution while mocap systems only capture a sparse set of 3D points at high temporal resolution. To better understand how people deform as they move, we need both high spatial and temporal resolution. To that end we commissioned the world's first 4D scanner that captures detailed full body shape at 60 frames per second. This 4D output, however, is simply a sequence of point clouds. To model the statistics of human shape in motion, we first register a common template mesh to each sequence in a process we call 4Cap [].

Using over 40,000 registered meshes of ten subjects, we learn how soft- tissue motion causes mesh triangles to deform relative to a base 3D body model []. The resulting Dyna model uses a second-order auto-regressive model that predicts soft-tissue deformations based on previous deformations, the velocity and acceleration of the body, and the angular velocities and accelerations of the limbs. Dyna also models how deformations vary with a person’s body mass index (BMI), producing different deformations for people with different shapes. We provide tools for animators to modify the deformations and apply them to new stylized characters. We have also ported this model to our vertex-based SMPL model [

].

Members

Publications