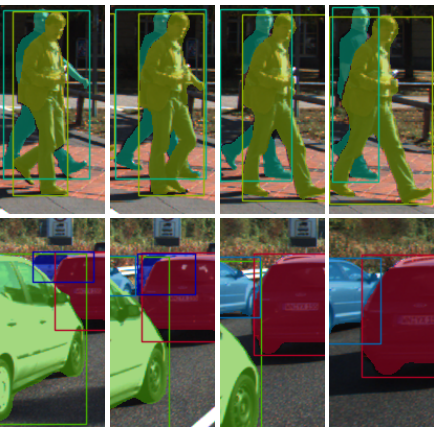

This paper extends the popular task of multi-object tracking to multi-object tracking and segmentation (MOTS). Towards this goal, we create dense pixel-level annotations for two existing tracking datasets using a semi-automatic annotation procedure. Our new annotations comprise 65,213 pixel masks for 977 distinct objects (cars and pedestrians) in 10,870 video frames. For evaluation, we extend existing multi-object tracking metrics to this new task. Moreover, we propose a new baseline method which jointly addresses detection, tracking, and segmentation with a single convolutional network. We demonstrate the value of our datasets by achieving improvements in performance when training on MOTS annotations. We believe that our datasets, metrics and baseline will become a valuable resource towards developing multi-object tracking approaches that go beyond 2D bounding boxes.

| Author(s): | Paul Voigtlaender and Michael Krause and Aljosa Osep and Jonathon Luiten and Berin Balachandar Gnana Sekar and Andreas Geiger and Bastian Leibe |

| Book Title: | Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) |

| Year: | 2019 |

| Month: | June |

| Bibtex Type: | Conference Paper (inproceedings) |

| Event Name: | IEEE International Conference on Computer Vision and Pattern Recognition (CVPR) 2019 |

| Event Place: | Long Beach, USA |

| Electronic Archiving: | grant_archive |

| Links: | |

BibTex

@inproceedings{Voigtlaender2019CVPR,

title = {MOTS: Multi-Object Tracking and Segmentation},

booktitle = {Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR)},

abstract = {This paper extends the popular task of multi-object tracking to multi-object tracking and segmentation (MOTS). Towards this goal, we create dense pixel-level annotations for two existing tracking datasets using a semi-automatic annotation procedure. Our new annotations comprise 65,213 pixel masks for 977 distinct objects (cars and pedestrians) in 10,870 video frames. For evaluation, we extend existing multi-object tracking metrics to this new task. Moreover, we propose a new baseline method which jointly addresses detection, tracking, and segmentation with a single convolutional network. We demonstrate the value of our datasets by achieving improvements in performance when training on MOTS annotations. We believe that our datasets, metrics and baseline will become a valuable resource towards developing multi-object tracking approaches that go beyond 2D bounding boxes.},

month = jun,

year = {2019},

slug = {voigtlaender2019cvpr},

author = {Voigtlaender, Paul and Krause, Michael and Osep, Aljosa and Luiten, Jonathon and Sekar, Berin Balachandar Gnana and Geiger, Andreas and Leibe, Bastian},

month_numeric = {6}

}