ARCTIC: A Dataset for Dexterous Bimanual Hand-Object Manipulation

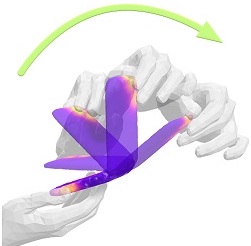

Humans intuitively understand that inanimate objects do not move by themselves, but that state changes are typically caused by human manipulation (e.g., the opening of a book). This is not yet the case for machines. In part this is because there exist no datasets with ground-truth 3D annotations for the study of physically consistent and synchronised motion of hands and articulated objects. To this end, we introduce ARCTIC -- a dataset of two hands that dexterously manipulate objects, containing 2.1M video frames paired with accurate 3D hand and object meshes and detailed, dynamic contact information. It contains bi-manual articulation of objects such as scissors or laptops, where hand poses and object states evolve jointly in time. We propose two novel articulated hand-object interaction tasks: (1) Consistent motion reconstruction: Given a monocular video, the goal is to reconstruct two hands and articulated objects in 3D, so that their motions are spatio-temporally consistent. (2) Interaction field estimation: Dense relative hand-object distances must be estimated from images. We introduce two baselines ArcticNet and InterField, respectively and evaluate them qualitatively and quantitatively on ARCTIC.

| Author(s): | Fan, Zicong and Taheri, Omid and Tzionas, Dimitrios and Kocabas, Muhammed and Kaufmann, Manuel and Black, Michael J and Hilliges, Otmar |

| Book Title: | IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR) |

| Pages: | 12943--12954 |

| Year: | 2023 |

| Month: | June |

| Project(s): | |

| Bibtex Type: | Conference Paper (inproceedings) |

| DOI: | 10.1109/CVPR52729.2023.01244 |

| Event Name: | CVPR 2023 |

| Event Place: | Vancouver |

| State: | Published |

| URL: | https://arctic.is.tue.mpg.de |

| Electronic Archiving: | grant_archive |

| Links: | |

BibTex

@inproceedings{fan2023arctic,

title = {{ARCTIC}: A Dataset for Dexterous Bimanual Hand-Object Manipulation},

booktitle = {IEEE/CVF Conf.~on Computer Vision and Pattern Recognition (CVPR)},

abstract = {Humans intuitively understand that inanimate objects do not move by themselves, but that state changes are typically caused by human manipulation (e.g., the opening of a book). This is not yet the case for machines. In part this is because there exist no datasets with ground-truth 3D annotations for the study of physically consistent and synchronised motion of hands and articulated objects. To this end, we introduce ARCTIC -- a dataset of two hands that dexterously manipulate objects, containing 2.1M video frames paired with accurate 3D hand and object meshes and detailed, dynamic contact information. It contains bi-manual articulation of objects such as scissors or laptops, where hand poses and object states evolve jointly in time. We propose two novel articulated hand-object interaction tasks: (1) Consistent motion reconstruction: Given a monocular video, the goal is to reconstruct two hands and articulated objects in 3D, so that their motions are spatio-temporally consistent. (2) Interaction field estimation: Dense relative hand-object distances must be estimated from images. We introduce two baselines ArcticNet and InterField, respectively and evaluate them qualitatively and quantitatively on ARCTIC.},

pages = {12943--12954},

month = jun,

year = {2023},

slug = {arctic},

author = {Fan, Zicong and Taheri, Omid and Tzionas, Dimitrios and Kocabas, Muhammed and Kaufmann, Manuel and Black, Michael J and Hilliges, Otmar},

url = {https://arctic.is.tue.mpg.de},

month_numeric = {6}

}