Autonomous Robotic Manipulation

Modeling Top-Down Saliency for Visual Object Search

Interactive Perception

State Estimation and Sensor Fusion for the Control of Legged Robots

Probabilistic Object and Manipulator Tracking

Global Object Shape Reconstruction by Fusing Visual and Tactile Data

Robot Arm Pose Estimation as a Learning Problem

Learning to Grasp from Big Data

Gaussian Filtering as Variational Inference

Template-Based Learning of Model Free Grasping

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

Autonomous Robotic Manipulation

Learning Coupling Terms of Movement Primitives

State Estimation and Sensor Fusion for the Control of Legged Robots

Inverse Optimal Control

Motion Optimization

Optimal Control for Legged Robots

Movement Representation for Reactive Behavior

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

Motion Optimization

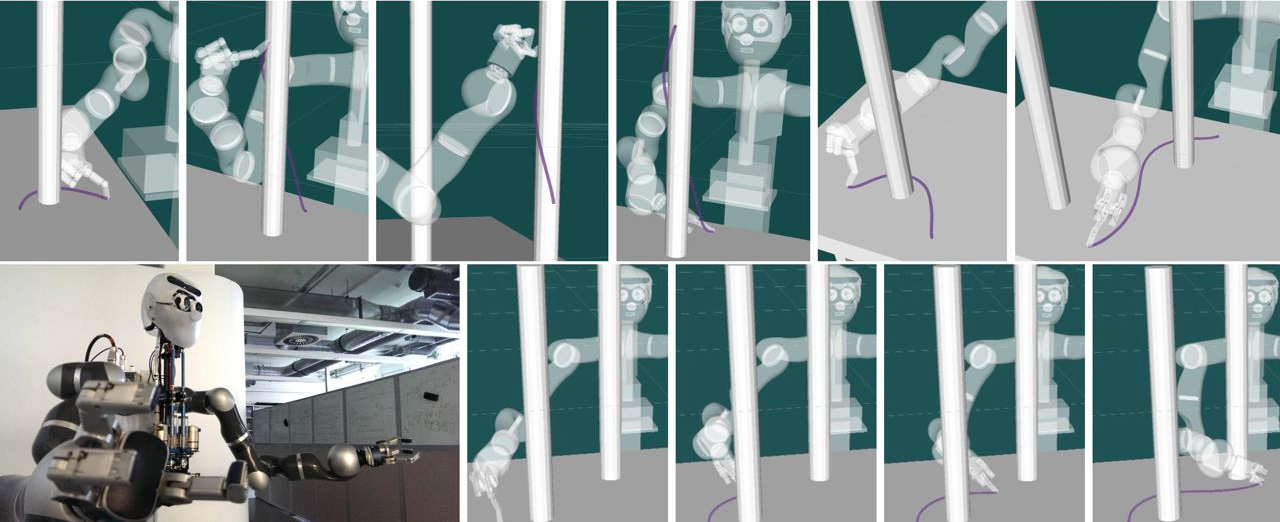

Motion generation is increasingly formalized as a large scale optimization over future outcomes of actions. For high dimensional manipulation platforms, this optimization is computationally so difficult that for a long time traditional approaches focused primarily on feasibility of the solution rather than even local optimality. Recent efforts, though, to understand this optimization problem holistically and to exploit structure in the problem to enhance computational efficiency, are starting to pay off. We now have very fast constrained optimizers that leverage second order information to enhance convergence and leverage problem geometry much more effectively than traditional planners. At the Autonomous Motion Department, we are exploring this space from many directions.

One of our core efforts centers around formalizing the role of Riemannian Geometry in representing how obstacles shape the geometry of their surroundings and how those models integrate efficiently into the optimization []. Our optimizers account for environmental constraints as well as behavioral terms and geometric models of the robot's surroundings while maintaining the computational efficiency needed to animate fast responsive and reactive behaviors on Apollo.

Additionally, since robots can only retrieve information from the environment through limited sensors that must be processed online, no internal model of the environment will ever be sufficiently precise to act blindly. We study how our motion system can leverage the intrinsic robustness of contact controllers by explicitly optimizing a profile of desired contacts designed to reduce uncertainty []. In the same way we might reach out to touch a table before picking up a glass in the dark, Apollo can localize himself efficiently through planned contact by reasoning about the trade off between uncertainty reduction and task success.

Our ongoing efforts are shaping Apollo into a friendly manipulation platform with natural unimposing motion and efficient reactive behavior.

Members

Publications