Autonomous Robotic Manipulation

Modeling Top-Down Saliency for Visual Object Search

Interactive Perception

State Estimation and Sensor Fusion for the Control of Legged Robots

Probabilistic Object and Manipulator Tracking

Global Object Shape Reconstruction by Fusing Visual and Tactile Data

Robot Arm Pose Estimation as a Learning Problem

Learning to Grasp from Big Data

Gaussian Filtering as Variational Inference

Template-Based Learning of Model Free Grasping

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

Autonomous Robotic Manipulation

Learning Coupling Terms of Movement Primitives

State Estimation and Sensor Fusion for the Control of Legged Robots

Inverse Optimal Control

Motion Optimization

Optimal Control for Legged Robots

Movement Representation for Reactive Behavior

Associative Skill Memories

Real-Time Perception meets Reactive Motion Generation

Scene Models for Optical Flow

Historically, optical flow methods make generic, spatially homogeneous, assumptions about the spatial structure of the 2D image motion. In reality, optical flow varies across an image depending on object class. Simply put, different objects move differently. For rigid objects, the motion is related to the 3D object shape and relative motion. For articulated and non-rigid objects, the motion may be highly stereotyped. Consequently, we should be able to leverage knowledge about objects in the scene, their semantic category, and their geometry, to better estimate optical flow.

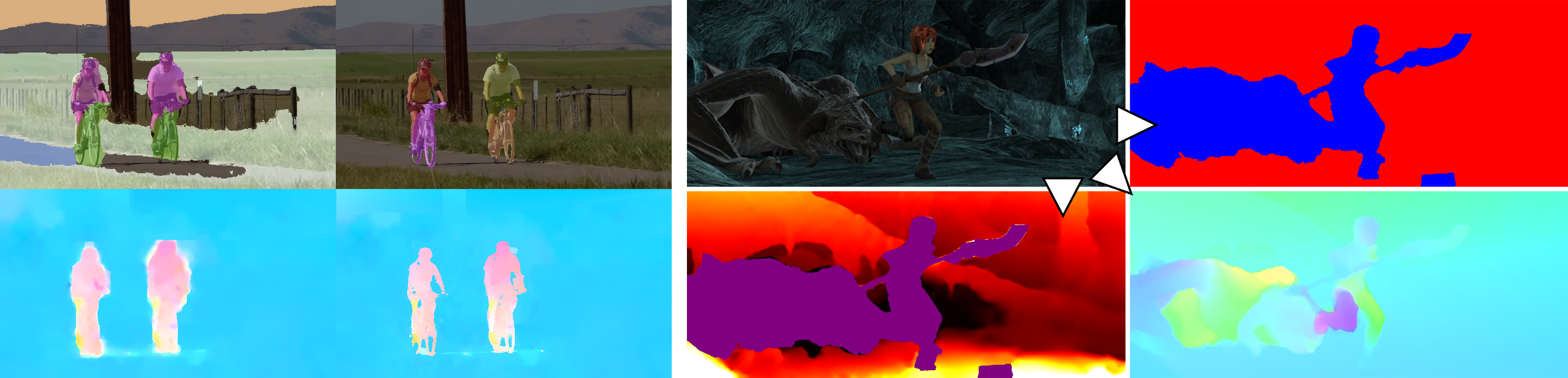

We proposed a method for semantic optical flow (SOF) [] estimation that exploits recent advances in static semantic scene segmentation to segment the image into objects of different types. We define different models of image motion in these regions depending on the type of object. For example, we model the motion on roads with homographies, vegetation with spatially smooth flow, and independently moving objects like cars and planes with affine motion plus deviations. We then pose the flow estimation problem using a novel formulation of localized layers, which addresses limitations of traditional layered models for dealing with complex scene motion. At time of publication, SOF achieved the lowest error of any monocular method in the KITTI-2015 flow benchmark and produces qualitatively better flow and segmentation than recent top methods on a wide range of natural videos.

Furthermore, the optical flow of natural scenes is a combination of the motion of the observer and the independent motion of objects. Existing algorithms typically focus on either recovering motion and structure under the assumption of a purely static world or optical flow for general unconstrained scenes. We combine these approaches in an optical flow algorithm that estimates an explicit segmentation of moving objects using appearance and physical constraints. In static regions, we take advantage of strong constraints to jointly estimate the camera motion and the 3D structure of the scene over multiple frames. This allows us to also regularize the structure instead of the motion. Our formulation uses a Plane+Parallax framework, which works even under small baselines, and reduces the motion estimation to a one-dimensional search problem, resulting in more accurate estimation. In moving regions the flow is treated as unconstrained, and computed with an existing optical flow method. The resulting Mostly-Rigid Flow (MR-Flow) method [] achieved state-of-the-art results on both the MPISintel and KITTI-2015 benchmarks.

These methods are optimization-based methods that tend to be slow. Furthermore, we manually define constraints, which are often strong simplifications of the real world. To overcome this, we present the Collaborative Competition framework [], which reasons about the whole scene in a joint, data-driven fashion, and is able to learn to compute the segmentation and the geometry of the scene, and the motion of objects and the background, without explicit supervision.

Members

Publications