Causality in Trustworthy Machine Learning: Fairness, Robustness, Recourse, and Distributional Stability

Causality is fundamental in advancing fairness within machine learning, as it addresses biases from spurious correlations. Conventional fairness strategies often fail to distinguish causal from non-causal relationships, leading to unjust decisions. In contrast, causal techniques rigorously capture how sensitive attributes affect model outputs, enabling counterfactual fairness. This ensures individuals are evaluated based on intrinsic qualities rather than external factors beyond their control. Embedding causal models into fairness constraints helps manage both visible and hidden biases, strengthening AI's ethical reliability.

Robustness in machine learning also depends on causal insights, particularly against adversarial perturbations and distributional shifts. Models trained only on observational data often lack resilience to unanticipated conditions. Causal modeling alleviates these weaknesses by disentangling stable relationships from incidental associations, reducing vulnerability to adversarial manipulation. Through causal adversarial training, models maintain consistent performance across varied data distributions while preserving fairness.

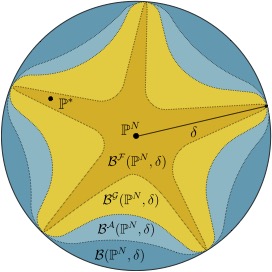

Distributionally robust optimization (DRO) benefits from a causal perspective by shaping ambiguity sets that respect structural data dependencies. Traditional DRO methods make broad assumptions about distributional shifts, often yielding overly cautious models. Causal DRO refines this by ensuring ambiguity sets align with genuine causal structures, improving accuracy and interpretability while mitigating risks from uncertain environments.