Challenging the Validity of Personality Tests for Large Language Models

Recent advances in large language models (LLMs) have made these models' responses more and more human-like and have led to an unprecedented amount of human-language-model interactions. This sparked interest in the potential emergence of psychological traits such as psychopathy and personality characteristics like extraversion in LLMs. As an idea that seems plausible at first glance, psychological tests are now being used in attempts to assess and quantify (potential) personality traits of LLMs. However, it is a priori not clear whether the validity of psychological tests transfers from one population (e.g. humans) to another (LLMs). Neglecting to conduct thorough examinations when transferring measurement tools to LLMs can render certain inferences, like those about personality traits, invalid.

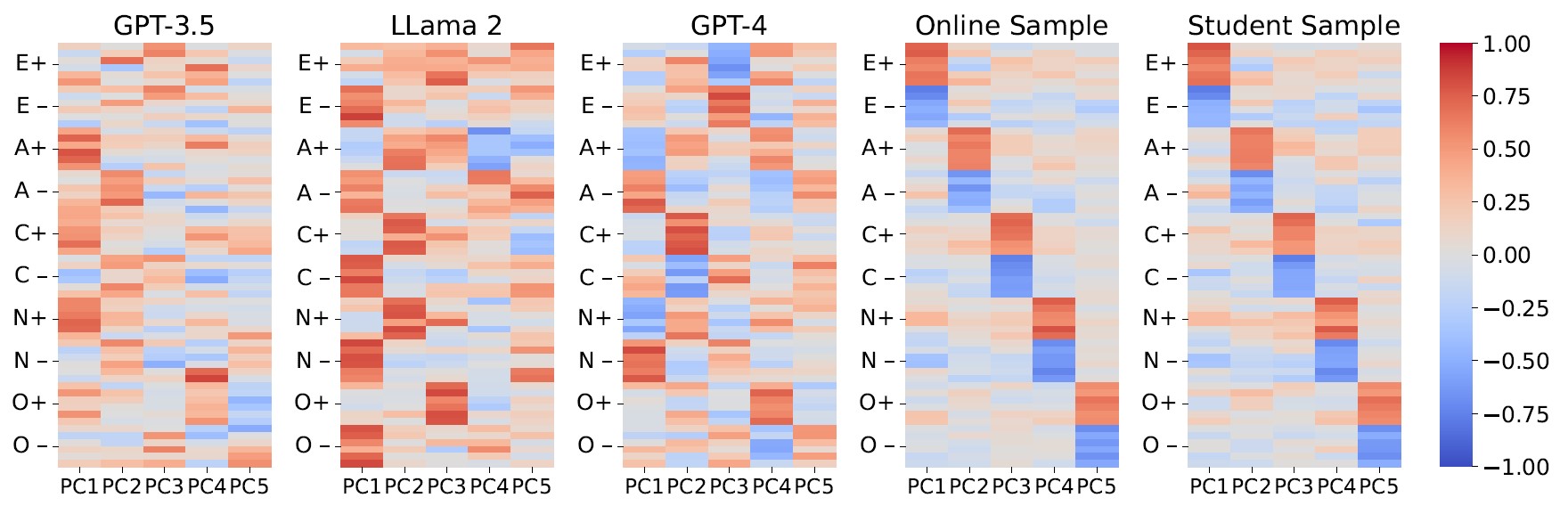

In this work, we investigate the measurement invariance for personality inventories between humans and LLMs. Specifically, we use exploratory factor analysis, confirmatory factor analysis, and reliability analysis to support our conclusion. This approach represents a consensus of methods developed in psychometrics over the last decades and constitutes a standard procedure for developing, updating or transferring psychometrics. Furthermore, we find significant inconsistencies in the form of agree biases in LLMs.

We summarize the contributions of this work as follows:

- Agree-bias: We show that LLM responses to the well-known 50-Item-IPIP-Big Five Markers exhibit patterns that are highly unusual for humans. Concretely, reverse-coded items (“I am introverted” vs. “I am extraverted”) are often both answered affirmatively.

- Psychometric validity analysis tools: We present the methods used by psychometricians to rigorously investigate measurement invariance namely, EFA, CFA and reliability analysis. By presenting these methods to the research community, we provide a novel framework for evaluating the application of human psychometrics to LLMs.

- Fundamental limitations of personality assessments in LLMs: Our findings reveal that LLMs fail to replicate the five-factor structure found in samples of human responses. This implies that measurement models valid for humans do not fit LLMs and currently applied procedures for administering questionnaires to LLMs do not allow for the inference of personality.

We hope that this work will guide the analysis and adaptation of other psychometric and educational tests for LLMs.