Sample efficient learning of predictors that complement humans

In this project, we explore how AI systems and human decision-makers can be combined to reduce errors while minimizing the burden on humans. One effective approach is to use a "rejector" that dynamically assigns predictions to either the AI or the human expert based on their strengths. This allows the AI to handle cases where it outperforms humans while deferring to the human in uncertain situations. By jointly optimizing both the AI and the rejector, we can ensure that the AI complements human weaknesses and defers when necessary. Prior empirical studies have shown that this approach can outperform either the AI or the human alone, as humans and AI systems tend to make different types of errors. Humans may be biased toward certain features, while AI models may be constrained by limited training data or expressive power. At the same time, humans can leverage contextual information unavailable to the AI, such as privacy-protected data.

Despite the potential of expert deferral, many existing deployments fail to fully integrate AI and human decision-making. Traditional approaches often train AI models independently and rely on post-hoc deferral mechanisms, such as confidence-based thresholds, to decide when to involve a human expert. This "staged learning" method overlooks the possibility of training AI models while accounting for human strengths and weaknesses, leading to suboptimal decision-making. Recent research has introduced joint training strategies that optimize both the AI classifier and the rejector simultaneously, but we still lack a theoretical understanding of why joint learning performs better than staged learning. In this project, we address three key theoretical challenges in expert deferral: how to allocate model capacity effectively, how to handle the scarcity of human prediction data, and how to optimize AI-human collaboration using surrogate loss functions.

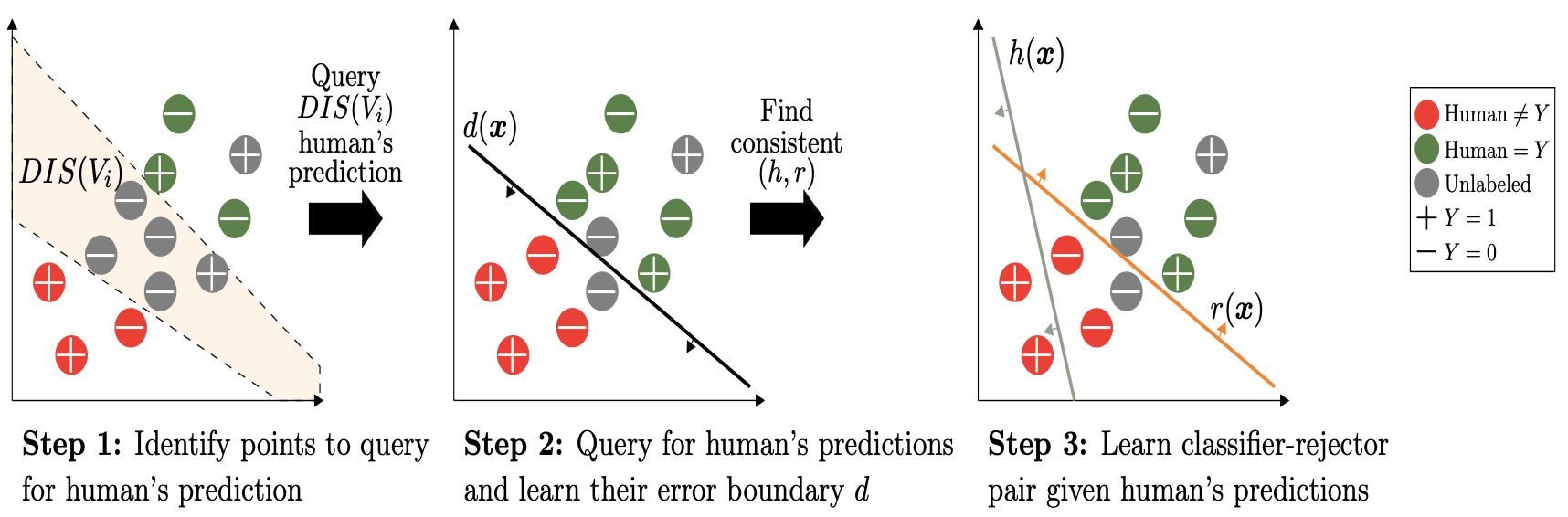

To address these challenges, we propose a theoretical framework that provides insights into joint learning for expert deferral. We first establish bounds on the performance gap between a jointly trained AI-human system and one that trains the AI independently. Next, we introduce a generalized family of surrogate loss functions that extend prior work, providing theoretical guarantees on their performance. Finally, we tackle the challenge of limited human prediction data by designing an active learning scheme that efficiently identifies the decision boundaries of human experts. This approach allows us to develop AI systems that adapt to human strengths and weaknesses with minimal data collection. Our findings contribute to a deeper understanding of AI-human collaboration and provide a foundation for improving expert deferral systems in real-world applications.