Causal Representation Learning

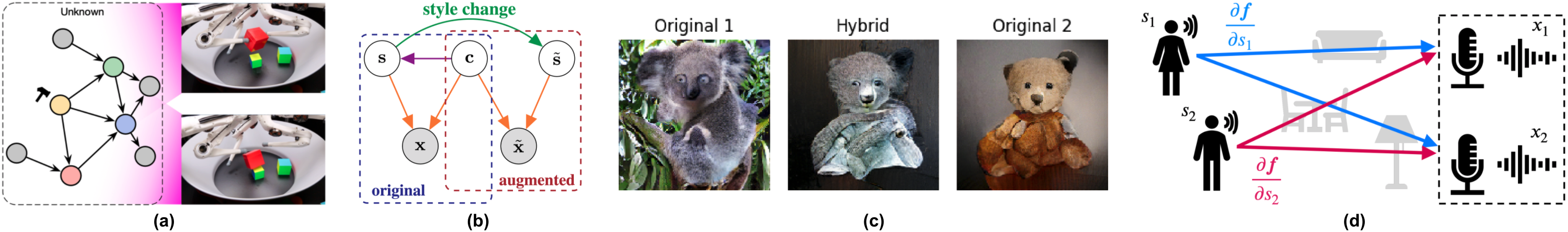

Causal representation learning aims to move from statistical representations towards learning causal world models that support notions of intervention and planning, see Fig. (a) [].

Coarse-grained causal models Defining objects that are related by causal models typically amounts to appropriate coarse-graining of more detailed models of the world (e.g., physical models). Subject to appropriate conditions, causal models can arise, e.g., from coarse-graining of microscopic structural equation models [], ordinary differential equations [

], temporally aggregated time series [

], or temporal abstractions of recurrent dynamical models [

]. Although models in economics, medicine, or psychology typically involve variables that are abstractions of more elementary concepts, it is unclear when such coarse-grained variables admit causal models with well-defined interventions; [

] provides some sufficient conditions.

Disentanglement A special case of causal representation learning is disentanglement, or nonlinear ICA, where the latent variables are assumed to be statistically independent. Through theoretical and large-scale empirical study, we have shown that disentanglement is not possible in a purely unsupervised setting [] (ICML'19 best paper). Follow-up works considered a semi-supervised setting [

], and showed that disentanglement methods learn dependent latents when trained on correlated data [

].

Multi-view learning Learning with multiple views of the data allows for overcoming the impossibility of purely-unsupervised representation learning, as demonstrated through identifiability results for multi-view nonlinear ICA [] and weakly-supervised disentanglement [

]. This idea also helps explain the impressive empirical success of self-supervised learning with data augmentations: we prove that the latter isolates the invariant part of the representation that is shared across views under arbitrary latent dependence, see Fig. (b) [

].

Learning independent mechanisms For image recognition, we showed (by competitive training of expert modules) that independent mechanisms can transfer information across different datasets []. In an extension to dynamic systems, learning sparsely communicating, recurrent independent mechanisms (RIMs) led to improved generalization and strong performance on RL tasks [

]. Similar ideas have been useful for learning object-centric representations and causal generative scene models [

].

Extracting causal structure from deep generative models We have devised methods for analysing deep generative models through a causal lens, e.g., for better extrapolation [] or creating hybridized counterfactual images, see Fig. (c) [

]. Causal ideas have also led to a new structured decoder architecture [] and new forms of gradient combination to avoid learning spurious correlations [

].

New notions of non-statistical independence To use the principle of independent causal mechanisms as a learning signal, we have proposed two new notions of non-statistical independence: a general group-invariance framework that unifies several previous approaches [], and an orthogonality condition between partial derivatives tailored specifically for unsupervised representation learning, see Fig. (d) [

].

Members

Publications