MotionFix: Text-Driven 3D Human Motion Editing

2024

Conference Paper

ps

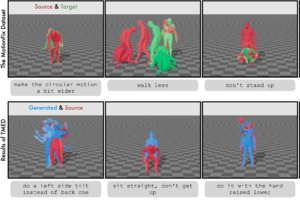

The focus of this paper is 3D motion editing. Given a 3D human motion and a textual description of the desired modification, our goal is to generate an edited motion as described by the text. The challenges include the lack of training data and the design of a model that faithfully edits the source motion. In this paper, we address both these challenges. We build a methodology to semi-automatically collect a dataset of triplets in the form of (i) a source motion, (ii) a target motion, and (iii) an edit text, and create the new dataset. Having access to such data allows us to train a conditional diffusion model that takes both the source motion and the edit text as input. We further build various baselines trained only on text-motion pairs datasets and show superior performance of our model trained on triplets. We introduce new retrieval-based metrics for motion editing and establish a new benchmark on the evaluation set. Our results are encouraging, paving the way for further research on fine-grained motion generation. Code and models will be made publicly available.

| Author(s): | Nikos Athanasiou and Alpár Cseke and Markos Diomataris and Michael J. Black and Gül Varol |

| Book Title: | SIGGRAPH Asia 2024 Conference Proceedings |

| Year: | 2024 |

| Month: | December |

| Publisher: | ACM |

| Department(s): | Perzeptive Systeme |

| Research Project(s): |

Language and Movement

Modeling Human Movement |

| Bibtex Type: | Conference Paper (inproceedings) |

| Paper Type: | Conference |

| Event Place: | Tokyo, Japan |

| State: | To be published |

| URL: | https://motionfix.is.tue.mpg.de/ |

|

BibTex @inproceedings{MotionFix,

title = {MotionFix: Text-Driven 3D Human Motion Editing},

author = {Athanasiou, Nikos and Cseke, Alpár and Diomataris, Markos and Black, Michael J. and Varol, G{\"u}l},

booktitle = {SIGGRAPH Asia 2024 Conference Proceedings},

publisher = {ACM},

month = dec,

year = {2024},

doi = {},

url = {https://motionfix.is.tue.mpg.de/},

month_numeric = {12}

}

|

|