2024

ei

Rahaman, N., Weiss, M., Wüthrich, M., Bengio, Y., Li, E., Pal, C., Schölkopf, B.

Language Models Can Reduce Asymmetry in Information Markets

arXiv:2403.14443, March 2024, Published as: Redesigning Information Markets in the Era of Language Models, Conference on Language Modeling (COLM) (techreport)

ev

Achterhold, J., Guttikonda, S., Kreber, J. U., Li, H., Stueckler, J.

Learning a Terrain- and Robot-Aware Dynamics Model for Autonomous Mobile Robot Navigation

CoRR abs/2409.11452, 2024, Preprint submitted to Robotics and Autonomous Systems Journal. https://arxiv.org/abs/2409.11452 (techreport) Submitted

lds

Eberhard, O., Vernade, C., Muehlebach, M.

A Pontryagin Perspective on Reinforcement Learning

Max Planck Institute for Intelligent Systems, 2024 (techreport)

lds

Er, D., Trimpe, S., Muehlebach, M.

Distributed Event-Based Learning via ADMM

Max Planck Institute for Intelligent Systems, 2024 (techreport)

ev

Baumeister, F., Mack, L., Stueckler, J.

Incremental Few-Shot Adaptation for Non-Prehensile Object Manipulation using Parallelizable Physics Simulators

CoRR abs/2409.13228, CoRR, 2024, Submitted to IEEE International Conference on Robotics and Automation (ICRA) 2025 (techreport) Submitted

2023

ei

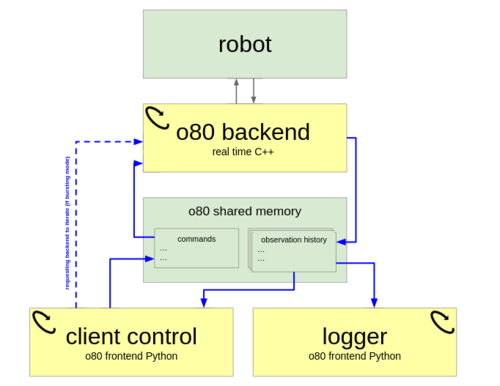

Berenz, V., Widmaier, F., Guist, S., Schölkopf, B., Büchler, D.

Synchronizing Machine Learning Algorithms, Realtime Robotic Control and Simulated Environment with o80

Robot Software Architectures Workshop (RSA) 2023, ICRA, 2023 (techreport)

2022

dlg

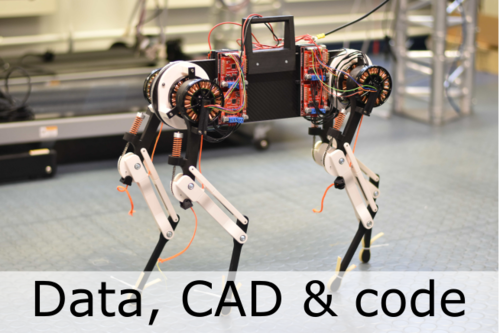

Ruppert, F., Badri-Spröwitz, A.

Learning Plastic Matching of Robot Dynamics in Closed-Loop Central Pattern Generators: Data

Edmond, May 2022 (techreport)

dlg

pi

Badri-Spröwitz, A., Sarvestani, A. A., Sitti, M., Daley, M. A.

Data for BirdBot Achieves Energy-Efficient Gait with Minimal Control Using Avian-Inspired Leg Clutching

Edmond, March 2022 (techreport)

ev

Li, H., Stueckler, J.

Observability Analysis of Visual-Inertial Odometry with Online Calibration of Velocity-Control Based Kinematic Motion Models

abs/2204.06651, CoRR/arxiv, 2022 (techreport)

2021

re

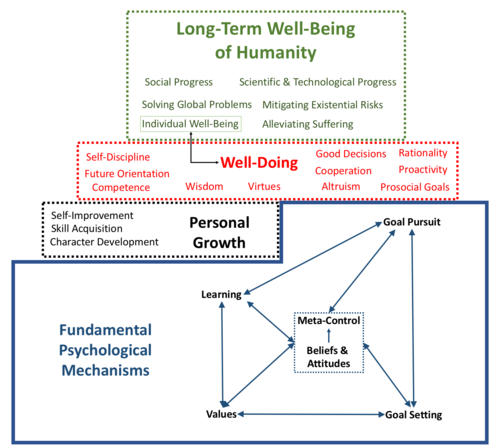

Lieder, F., Prentice, M., Corwin-Renner, E.

Toward a Science of Effective Well-Doing

May 2021 (techreport)

2020

re

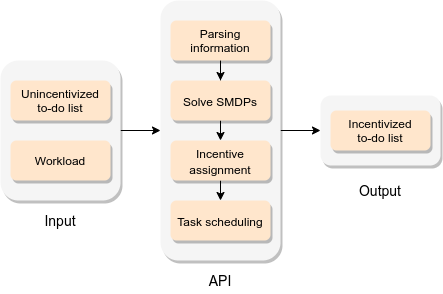

Stojcheski, J., Felso, V., Lieder, F.

Optimal To-Do List Gamification

ArXiv Preprint, 2020 (techreport)

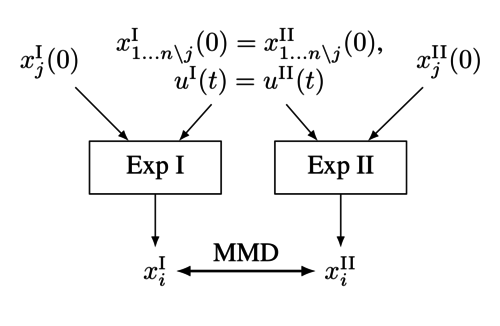

ics

Baumann, D., Solowjow, F., Johansson, K. H., Trimpe, S.

Identifying Causal Structure in Dynamical Systems

2020 (techreport)

2018

ev

Ma, L., Stueckler, J., Wu, T., Cremers, D.

Detailed Dense Inference with Convolutional Neural Networks via Discrete Wavelet Transform

arxiv, 2018, arXiv:1808.01834 (techreport)

slt

Keriven, N., Garreau, D., Poli, I.

NEWMA: a new method for scalable model-free online change-point detection

2018 (techreport)

2016

am

ics

Ebner, S., Trimpe, S.

Supplemental material for ’Communication Rate Analysis for Event-based State Estimation’

Max Planck Institute for Intelligent Systems, January 2016 (techreport)

2015

am

ics

Trimpe, S.

Distributed Event-based State Estimation

Max Planck Institute for Intelligent Systems, November 2015 (techreport)

ei

Abbott, T., Abdalla, F. B., Allam, S., Amara, A., Annis, J., Armstrong, R., Bacon, D., Banerji, M., Bauer, A. H., Baxter, E., others,

Cosmology from Cosmic Shear with DES Science Verification Data

arXiv preprint arXiv:1507.05552, 2015 (techreport)

ei

Jarvis, M., Sheldon, E., Zuntz, J., Kacprzak, T., Bridle, S. L., Amara, A., Armstrong, R., Becker, M. R., Bernstein, G. M., Bonnett, C., others,

The DES Science Verification Weak Lensing Shear Catalogs

arXiv preprint arXiv:1507.05603, 2015 (techreport)

2014

ps

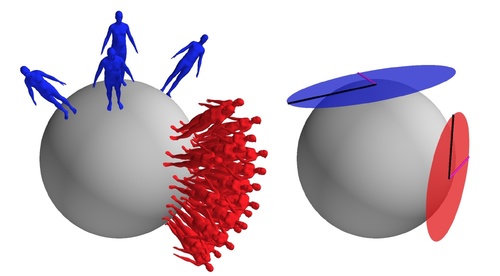

Freifeld, O., Hauberg, S., Black, M. J.

Model transport: towards scalable transfer learning on manifolds - supplemental material

(9), April 2014 (techreport)

2013

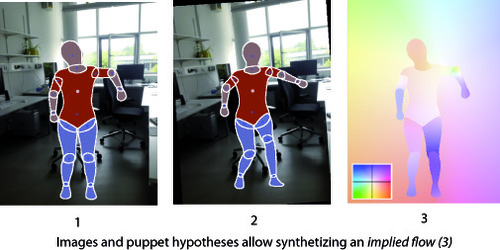

ps

Zuffi, S., Black, M. J.

Puppet Flow

(7), Max Planck Institute for Intelligent Systems, October 2013 (techreport)

am

Sankaran, B., Ghazvininejad, M., He, X., Kale, D., Cohen, L.

Learning and Optimization with Submodular Functions

ArXiv, May 2013 (techreport)

ps

Sun, D., Roth, S., Black, M. J.

A Quantitative Analysis of Current Practices in Optical Flow Estimation and the Principles Behind Them

(CS-10-03), Brown University, Department of Computer Science, January 2013 (techreport)

ei

pn

Hennig, P.

Animating Samples from Gaussian Distributions

(8), Max Planck Institute for Intelligent Systems, Tübingen, Germany, 2013 (techreport)

ei

Hogg, D. W., Angus, R., Barclay, T., Dawson, R., Fergus, R., Foreman-Mackey, D., Harmeling, S., Hirsch, M., Lang, D., Montet, B. T., Schiminovich, D., Schölkopf, B.

Maximizing Kepler science return per telemetered pixel: Detailed models of the focal plane in the two-wheel era

arXiv:1309.0653, 2013 (techreport)

ei

Montet, B. T., Angus, R., Barclay, T., Dawson, R., Fergus, R., Foreman-Mackey, D., Harmeling, S., Hirsch, M., Hogg, D. W., Lang, D., Schiminovich, D., Schölkopf, B.

Maximizing Kepler science return per telemetered pixel: Searching the habitable zones of the brightest stars

arXiv:1309.0654, 2013 (techreport)

2012

ps

Hirshberg, D., Loper, M., Rachlin, E., Black, M. J.

Coregistration: Supplemental Material

(No. 4), Max Planck Institute for Intelligent Systems, October 2012 (techreport)

ps

Freifeld, O., Black, M. J.

Lie Bodies: A Manifold Representation of 3D Human Shape. Supplemental Material

(No. 5), Max Planck Institute for Intelligent Systems, October 2012 (techreport)

ps

Butler, D. J., Wulff, J., Stanley, G. B., Black, M. J.

MPI-Sintel Optical Flow Benchmark: Supplemental Material

(No. 6), Max Planck Institute for Intelligent Systems, October 2012 (techreport)

ei

Grosse-Wentrup, M., Schölkopf, B.

High Gamma-Power Predicts Performance in Brain-Computer Interfacing

(3), Max-Planck-Institut für Intelligente Systeme, Tübingen, February 2012 (techreport)

2011

ei

Seldin, Y., Laviolette, F., Shawe-Taylor, J., Peters, J., Auer, P.

PAC-Bayesian Analysis of Martingales and Multiarmed Bandits

Max Planck Institute for Biological Cybernetics, Tübingen, Germany, May 2011 (techreport)

ei

Schuler, C., Hirsch, M., Harmeling, S., Schölkopf, B.

Non-stationary Correction of Optical Aberrations

(1), Max Planck Institute for Intelligent Systems, Tübingen, Germany, May 2011 (techreport)

ei

Nickisch, H., Seeger, M.

Multiple Kernel Learning: A Unifying Probabilistic Viewpoint

Max Planck Institute for Biological Cybernetics, March 2011 (techreport)

ei

Langovoy, M., Wittich, O.

Multiple testing, uncertainty and realistic pictures

(2011-004), EURANDOM, Technische Universiteit Eindhoven, January 2011 (techreport)

ei

Sra, S.

Nonconvex proximal splitting: batch and incremental algorithms

(2), Max Planck Institute for Intelligent Systems, Tübingen, Germany, 2011 (techreport)

2010

ei

Langovoy, M., Wittich, O.

Computationally efficient algorithms for statistical image processing: Implementation in R

(2010-053), EURANDOM, Technische Universiteit Eindhoven, December 2010 (techreport)

ei

Seeger, M., Nickisch, H.

Fast Convergent Algorithms for Expectation Propagation Approximate Bayesian Inference

Max Planck Institute for Biological Cybernetics, December 2010 (techreport)

ei

Seldin, Y.

A PAC-Bayesian Analysis of Graph Clustering and Pairwise Clustering

Max Planck Institute for Biological Cybernetics, Tübingen, Germany, September 2010 (techreport)

ei

Tandon, R., Sra, S.

Sparse nonnegative matrix approximation: new formulations and algorithms

(193), Max Planck Institute for Biological Cybernetics, Tübingen, Germany, September 2010 (techreport)

ei

Langovoy, M., Wittich, O.

Robust nonparametric detection of objects in noisy images

(2010-049), EURANDOM, Technische Universiteit Eindhoven, September 2010 (techreport)

ei

Seeger, M., Nickisch, H.

Large Scale Variational Inference and Experimental Design for Sparse Generalized Linear Models

Max Planck Institute for Biological Cybernetics, August 2010 (techreport)

ei

Jegelka, S., Bilmes, J.

Cooperative Cuts for Image Segmentation

(UWEETR-1020-0003), University of Washington, Washington DC, USA, August 2010 (techreport)

ei

Barbero, A., Sra, S.

Fast algorithms for total-variationbased optimization

(194), Max Planck Institute for Biological Cybernetics, Tübingen, Germany, August 2010 (techreport)

ei

Nickisch, H., Rasmussen, C.

Gaussian Mixture Modeling with Gaussian Process Latent Variable Models

Max Planck Institute for Biological Cybernetics, June 2010 (techreport)

ei

Sra, S.

Generalized Proximity and Projection with Norms and Mixed-norms

(192), Max Planck Institute for Biological Cybernetics, Tübingen, Germany, May 2010 (techreport)

ei

Jegelka, S., Bilmes, J.

Cooperative Cuts: Graph Cuts with Submodular Edge Weights

(189), Max Planck Institute for Biological Cybernetics, Tuebingen, Germany, March 2010 (techreport)

ei

Steudel, B., Ay, N.

Information-theoretic inference of common ancestors

Computing Research Repository (CoRR), abs/1010.5720, pages: 18, 2010 (techreport)

2009

ei

Nickisch, H., Kohli, P., Rother, C.

Learning an Interactive Segmentation System

Max Planck Institute for Biological Cybernetics, December 2009 (techreport)

ei

Harmeling, S., Sra, S., Hirsch, M., Schölkopf, B.

An Incremental GEM Framework for Multiframe Blind Deconvolution, Super-Resolution, and Saturation Correction

(187), Max Planck Institute for Biological Cybernetics, Tübingen, Germany, November 2009 (techreport)

ei

Hirsch, M., Sra, S., Schölkopf, B., Harmeling, S.

Efficient Filter Flow for Space-Variant Multiframe Blind Deconvolution

(188), Max Planck Institute for Biological Cybernetics, Tübingen, Germany, November 2009 (techreport)

ei

Gretton, A., Györfi, L.

Consistent Nonparametric Tests of Independence

(172), Max Planck Institute for Biological Cybernetics, Tübingen, Germany, July 2009 (techreport)