Reinforcement Learning and Control

Model-based Reinforcement Learning and Planning

Object-centric Self-supervised Reinforcement Learning

Self-exploration of Behavior

Causal Reasoning in RL

Equation Learner for Extrapolation and Control

Intrinsically Motivated Hierarchical Learner

Regularity as Intrinsic Reward for Free Play

Curious Exploration via Structured World Models Yields Zero-Shot Object Manipulation

Natural and Robust Walking from Generic Rewards

Goal-conditioned Offline Planning

Offline Diversity Under Imitation Constraints

Learning Diverse Skills for Local Navigation

Learning Agile Skills via Adversarial Imitation of Rough Partial Demonstrations

Combinatorial Optimization as a Layer / Blackbox Differentiation

Object-centric Self-supervised Reinforcement Learning

Symbolic Regression and Equation Learning

Representation Learning

Stepsize adaptation for stochastic optimization

Probabilistic Neural Networks

Learning with 3D rotations: A hitchhiker’s guide to SO(3)

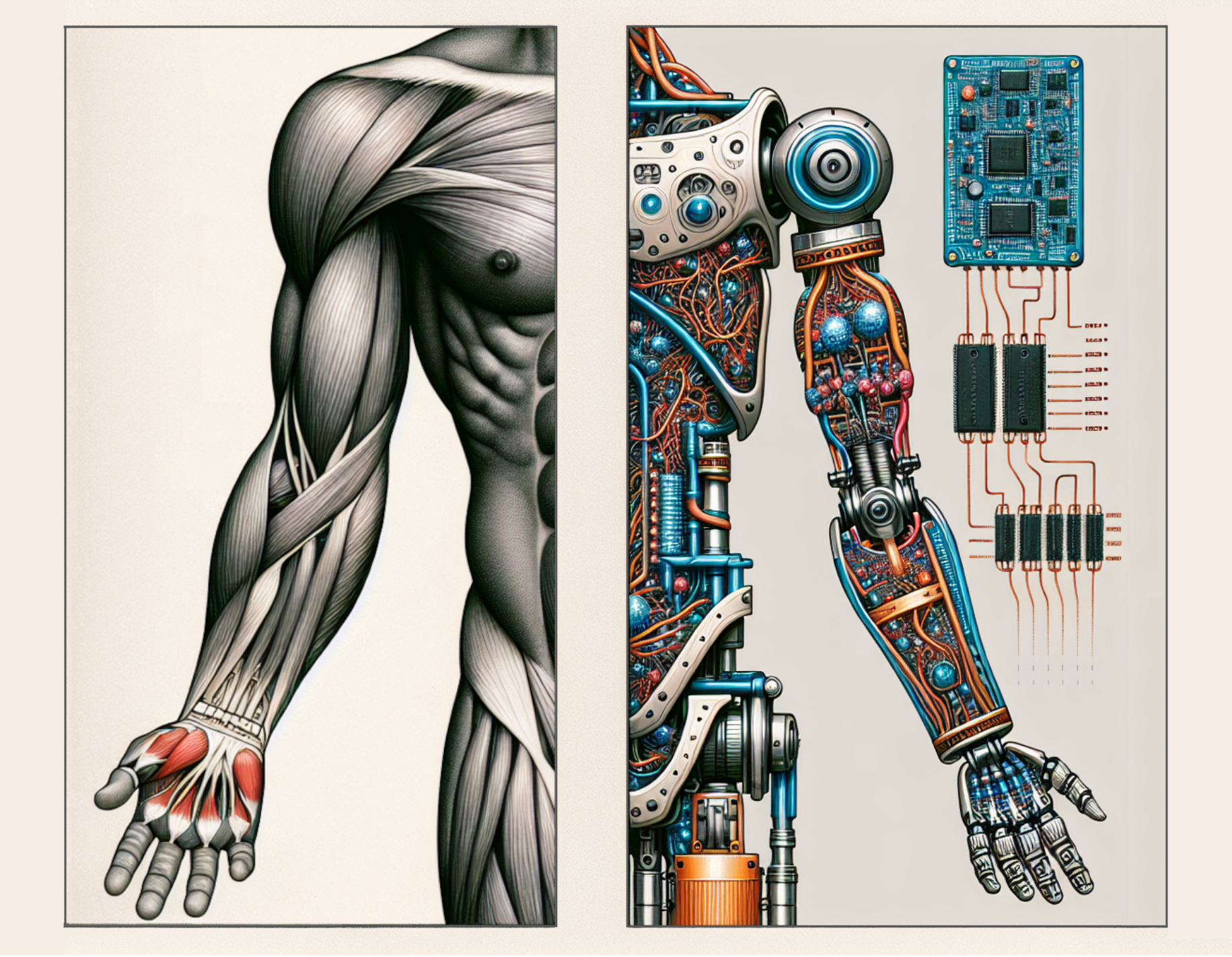

Learning with Muscles

Biological systems rely on muscles for actuation. Do muscles offer advantages over electric motors when viewed through the lens of learning and closed-loop control of behavior? We explored this question and found that muscles can enhance learning speed and robustness, as demonstrated in []. This research highlighted the significant potential of leveraging muscle actuator morphology for natural and robust motion, so far only validated in simulation.

How can these findings be applied to real robots? Currently, physical muscles are not mature or easy to integrate into robotic systems. In this study, we simulated muscle actuator properties in real time using affordable modern electric motors, allowing us to emulate a simplified muscle model on a real robot controlled by a learned policy. Our results suggest that artificial muscles could serve as highly advantageous actuators for future generations of robust legged robots. We continue to investigate which muscle properties are most critical for improving learning speed and behavioral robustness.

Understanding muscle control is critical not only for robotics, but also for advancing our understanding of human motor control. This has promising implications for the development of assistive devices and improved therapies for patients. Learning to control systems with the large number of degrees of freedom present in biological systems has been based primarily on imitation learning, which involves mimicking recorded biological data.

Developing exploration methods and reinforcement learning (RL) algorithms capable of scaling to simulated systems with many muscles was a major challenge we addressed. Our method was first applied to an ostrich and a simple humanoid model []. The effort paid off, as we can now achieve human-like walking in several high-dimensional muscle-driven models using only generic reward terms []. This paves the way for the analysis of muscle patterns in patients with deficits and for the joint optimization of assistive devices such as exoskeletons.