Reinforcement Learning and Control

Model-based Reinforcement Learning and Planning

Object-centric Self-supervised Reinforcement Learning

Self-exploration of Behavior

Causal Reasoning in RL

Equation Learner for Extrapolation and Control

Intrinsically Motivated Hierarchical Learner

Regularity as Intrinsic Reward for Free Play

Curious Exploration via Structured World Models Yields Zero-Shot Object Manipulation

Natural and Robust Walking from Generic Rewards

Goal-conditioned Offline Planning

Offline Diversity Under Imitation Constraints

Learning Diverse Skills for Local Navigation

Learning Agile Skills via Adversarial Imitation of Rough Partial Demonstrations

Combinatorial Optimization as a Layer / Blackbox Differentiation

Object-centric Self-supervised Reinforcement Learning

Symbolic Regression and Equation Learning

Representation Learning

Stepsize adaptation for stochastic optimization

Probabilistic Neural Networks

Learning with 3D rotations: A hitchhiker’s guide to SO(3)

Model-based Reinforcement Learning and Planning

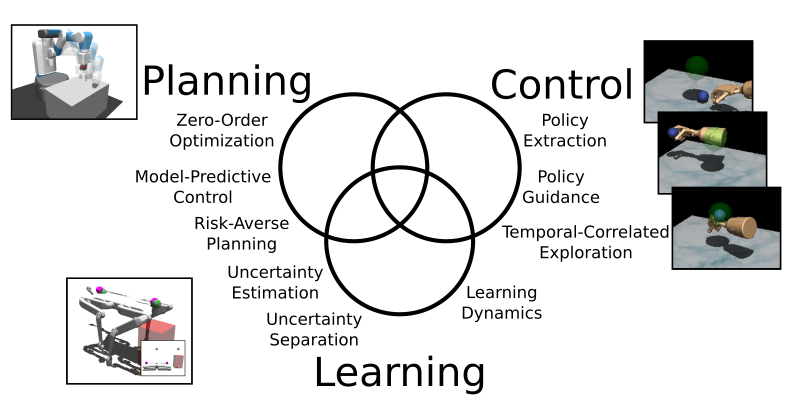

The goal of this project is to bring efficient learning-based control methods to real robots. A promising direction to learning from a few trials is to separate the task. First, train a model of the interactions with the environment and then use model-based reinforcement learning or model-based planning to control the robot. In a first work, we have explored the use of our equation learning framework for the forward model and obtained unparalleled performance on a cart-pendulum system []. However, we found that it does not scale yet to relevant robotic systems.

A widely used method in model-based reinforcement learning is the Cross-Entropy Method (CEM), which is a zero-order optimization scheme to compute good action sequences. In [] we have improved this method by replacing the uncorrelated sampling of actions with a temporal-correlated-based sampling (colored noise). As a result, we were able to reduce the computational cost of CEM by a factor of 3-22 while yielding a performance increase of up to 10 times in a variety of challenging robotic tasks (see figure).

Even with our improvements, it is still challenging to run such a model-based planning method in real-time on a robot with a high update frequency. Thus we set out to extract neural network policies from the data generated by a model-based planning algorithm. A challenging task, as it turns out since naive policy learning methods fail to learn from such a stochastic planning algorithm. We have proposed an adaptive guided policy search method [] that is able to distill strong policies for challenging simulated robotics tasks.

The next step in our aim to run these algorithms on a real robot is to make them risk-aware. We extend the learned dynamics models with the ability to estimate their prediction uncertainty. In fact, the models distinguish between uncertainty due to lack of data and inherent unpredictability (noise). We demonstrate on several continuous control tasks how to obtain active learning and risk-averse planning to avoid dangerous situations []. This is an important step towards safe reinforcement learning on real hardware.

[] Pinneri, C., Sawant, S., Blaes, S., Achterhold, J., Stueckler, J., Rolinek, M., Martius, G. Sample-efficient Cross-Entropy Method for Real-time Planning In Conference on Robot Learning 2020, 2020

[] Pinneri*, C., Sawant*, S., Blaes, S., Martius, G. Extracting Strong Policies for Robotics Tasks from Zero-order Trajectory Optimizers In 9th International Conference on Learning Representations (ICLR 2021), May 2021

[] Vlastelica*, M., Blaes*, S., Pinneri, C., Martius, G. Risk-Averse Zero-Order Trajectory Optimization In 5th Annual Conference on Robot Learning, November 2021

Members

Publications