Kernel Methods and Probabilistic Modeling

Neural networks have become the default machine learning models in many domains. Although it is no longer our main focus, the study of kernel methods is still thriving. In addition to directly representing functions of interest, they are used, for instance, in a supporting role in training algorithms for neural networks. Due to their sample-efficiency, closed-form solutions and desirable theoretical properties, kernel methods have become powerful tools in this domain to understand and design learning algorithms beyond empirical risk minimization.

Empirical likelihood estimators

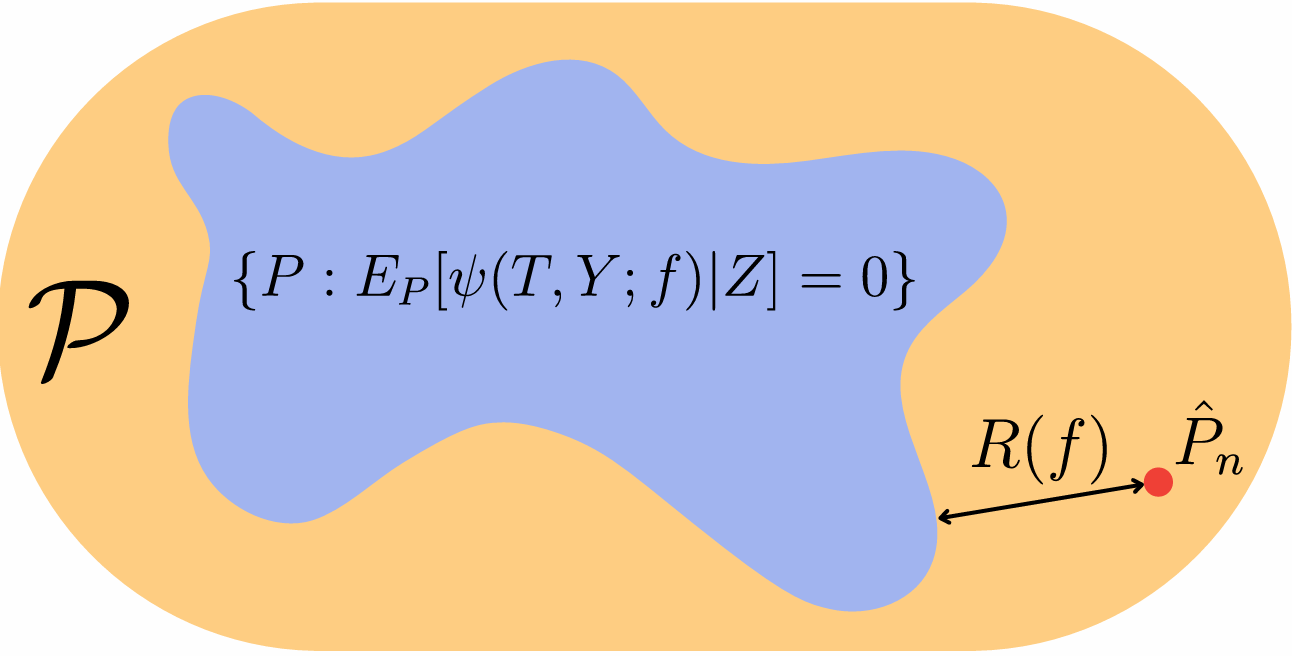

We developed a series of estimators for robust and causal learning based on the empirical likelihood (EL) framework. This method learns a model along with a non-parametric approximation of the data distribution, by minimizing a distributional distance metric under task-specific constraints. These constraints may be infinite-dimensional yet allow a reformulation using our methods of embedding probability distributions in reproducing kernel Hilbert spaces.

The framework subsumes empirical risk minimization while also allowing robustification against distribution shifts and incorporation of causal information, e.g., via instrumental variable regression. We developed such estimators in the machine learning context, based on f-divergences [], maximum mean discrepancy (MMD) [

] and optimal transport [

].

Distributional robust machine learning and optimization

Dual to empirical likelihood estimation is the problem of distributionally robust optimization (DRO), which addresses distribution shifts between train and test datasets by optimizing the model performance with respect to the worst-case distribution in a so-called ambiguity set around the training data. Using kernel-based distance metrics, we developed principled DRO approaches for robust learning under distribution shifts [] and chance-constrained stochastic optimization [

].

Kernel methods

Our kernel-based independence tests were applied to functional data in the context of causal structure learning []. We also continued the theoretical study of kernel distribution discrepancies, characterizing maximum mean discrepancies (MMD) that metrize the weak convergence of probability measures [

]. Finally, we studied kernel interpolation and excluded benign overfitting in fixed dimension for a class of kernels whose RKHS is a Sobolev space [

].

Scalable Model Selection for Deep Learning

The empirical Bayesian approach to model selection relies on the marginal likelihood and balances data fit and model complexity. While this approach is standard for small probabilistic models like Gaussian processes, it had not been successfully used for deep neural networks. Its advantage, however, is that it allows gradient-based optimization of hyperparameters. In [], we show that this is possible during training of a deep neural network using scalable Laplace and Hessian approximations. Using a kernel view of these approximations, we further significantly reduce their cost by deriving lower bounds that enable model selection using efficient stochastic gradient descent [

].

Advanced Applications of Bayesian Model Selection

We validate the proposed model selection methods on different problems and find that optimizing tens to millions of hyperparameters using marginal likelihood gradients can help improve models and enable new capabilities. For example, in [], we show how these methods can be used to learn invariances from data without supervision, akin to automatic data augmentation, improving over previous methods. In [

], we use a combination of gradient-based and discrete model selection to probe representations for linguistic properties and thereby overcome limitations of prior probing approaches, such as the dependence on the selected probing model. The ability to optimize millions of regularization hyperparameters further enables learning sparse models by Automatic Relevance Determination (ARD). We operationalize ARD to learn sparse factor models that integrate and balance data from different biomedical modalities, leading to improved data efficiency [

]. We successfully use the same mechanism in deep learning to select features [

], equivariances [

], and promote sparsity [

].