Large Language Models and Causality

Causality is fundamental for human intelligence, and probing causality is a useful tool to assay what systems trained on large statistical corpora have actually learnt.

Spurious Correlation in LLMs

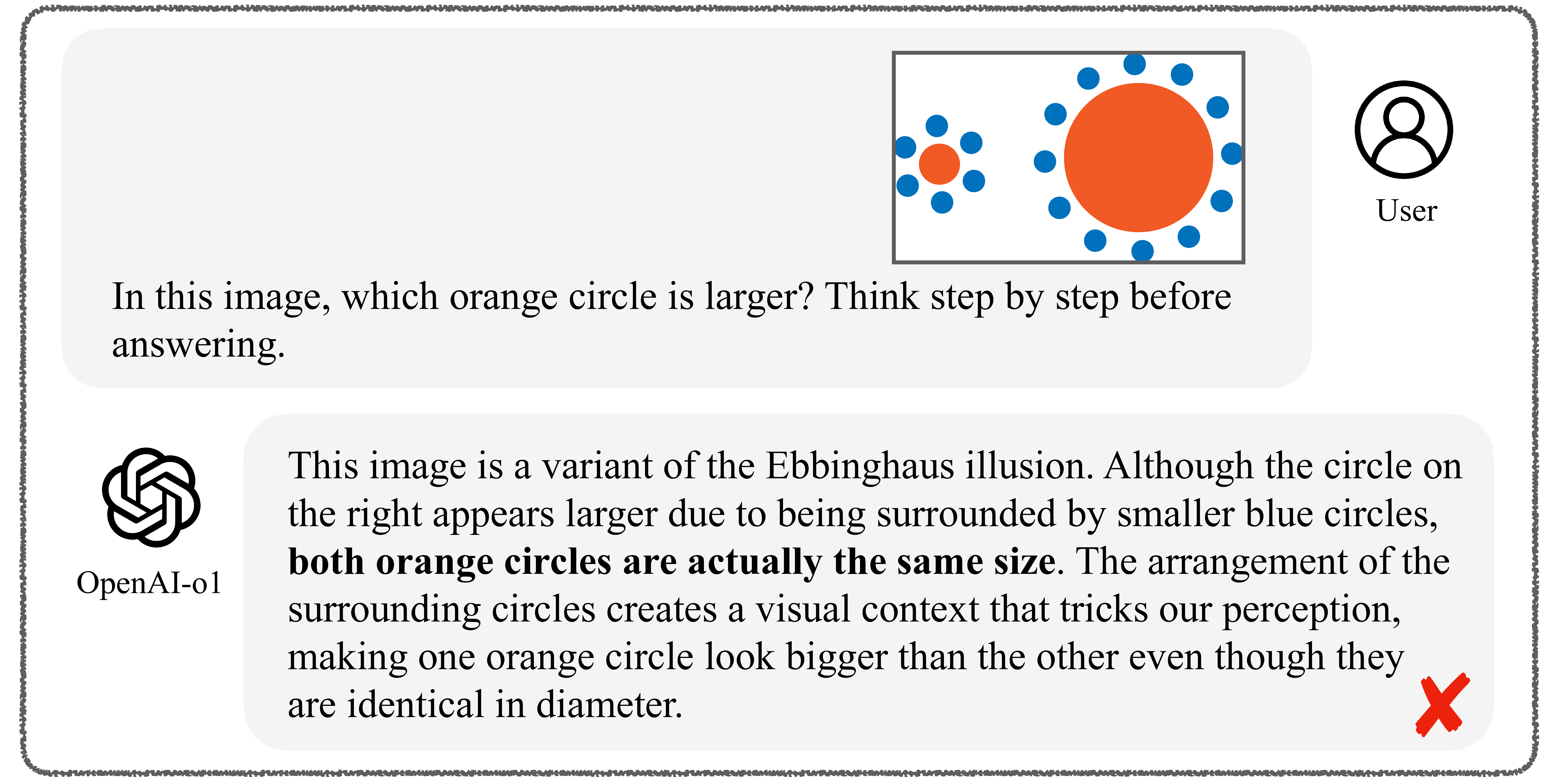

We have argued that state-of-the-art LLMs still suffer from spurious correlations in reasoning tasks such as math word problems, performing poorly at robustness tests by structural variation of several factors in the input [] and also variations of the symbolic problem structure [

]. Generalizing from text-only models to vision language models, we find that LLMs are susceptible to visual illusions such as the Ebbinghaus illusion, and we propose a symbolic program prompting method to force the models to produce correct responses in spite of existing spurious correlations [

].

Causal Reasoning in LLMs

Despite the impressive results achieved using LLMs, it is unclear to which extent these models understand and reason about causality, rather than just reproducing reasoning steps contained in the training set. To address this, we established a taxonomy of two types of causality: knowledge-based causal reasoning, i.e., commonsense causality [], and knowledge-independent, formal causal reasoning [

]. For commonsense causality, we explored the effects of fine-tuning, in-context learning and chain-of-thought prompting on four causal reasoning tasks [

]. To investigate the formal causal reasoning skills of LLMs, we built benchmarks covering two key skills, causal discovery [

] and causal effect reasoning [

], which we showed to be highly challenging for LLMs. We also explored how fine-tuning improves their performance [

], and proposed an effective causal chain-of-thought (CausalCoT) method to ground the inference skills of LLMs in formal steps [

].

Causal Methods for LLM Interpretability

Beyond understanding the causal reasoning skills in LLMs, a natural next question is to interpret how models make their decisions. Interpretations of LLM behavior can be formulated as a causal inference problem: what affects the model to change in what way? Treating models as the subject of causal inference, we conducted two types of research: causal effect estimation between perturbed factors in the input and models’ output changes (i.e., behavioral interpretability), and causal mediation analysis of what internal states of the model mediate the changes in its behavior in the output space (i.e., intrinsic interpretability).

For intrinsic interpretability, we inspected LLMs' internal states to decode how different mechanisms take place inside the model []. For behavioral interpretability, we designed our tests to manipulate multiple factors and mediation paths to obtain path-specific effects [

]. Similarly, we adopted a causal framework to other problems such as a causal gender bias test, winning the Best Paper award at the NeurIPS 2024 Workshop on Causality and Language Models (CaLM) [

]. We also assayed whether models exhibit implicit personalization, i.e., customizing their answers to a user identity that they infer [

].

Causal and Anticausal Learning for Text

Apart from using causality to improve the performance and interpretability of LLMs, we built upon our test-of-time award-winning work on causal and anticausal learning [], exploring the causal relationship between the input and output variables in NLP tasks. For example, in translation, text is translated from one language (the cause) to another (the effect). We provided a taxonomy of common NLP tasks, categorized into causal, anticausal, and mixed types, discussing the implications on description length and model performance [

]. We empirically assay the effect of causal and anticausal learning on model performance for specific tasks such as machine translation [

] and sentiment analysis [

]. For our sentiment analysis, we improve model performance by considering the causal relationship between a review and its sentiment using causal prompts [

].

Applications

We connected Causal NLP to real-world problems, from understanding the causes of social policies [] to gender bias in the editorial process [

]. Building on concepts from NLP and causality, proposed a measure for the causal effect of scientific publications on followup studies [

], befitting the notion of `citation impact' that previously had no connection to causality in spite of the term {\em impact}. We also traced the causes on linguistic phenomena such as the evolution of slangs [

]. Moreover, we worked on applications to environments populated by LLM agents for information markets [

] and sustainability [

], moral reasoning [

], differential privacy [

], and Responsible AI tasks [

]. Our LLM moral reasoning work received an oral presentation at NeurIPS 2022 [

], as well as the Best Paper Award at the NeurIPS 2024 Workshop on Pluralistic Alignment [

].