Robotics

In robotics, autonomous human-level decision-making in complex environments remains a crucial unresolved challenge. Although learning methods for AI have advanced considerably in recent years, robots have yet to achieve the performance observed in computer vision and natural language processing tasks. This is due to a number of factors including the cost of acquiring large training sets, the complexity of dynamic interaction with the real world, and the effort involved in designing and maintaining hardware and creating reproducible setups. Our department addresses multiple key directions to bridge this gap. While we focus on algorithmic developments to refine and accelerate skill acquisition, we also develop hardware that broadens robotic capabilities beyond those of commercially available systems. We thus respect the reality that when interacting with the real world, pre-recorded datasets only go a certain way towards solving the problem. We tackle manipulation, dynamic tasks, and locomotion, and demonstrate our approaches in physical scenarios to ensure rigorous corroboration.

Algorithms and Benchmarks

Algorithmic insights are essential to learning from limited physical interactions, and often times, reducing the dimensionality of reinforcement learning (RL) problems is crucial. We have shown that a stable policy gradient subspace, encompassing only a fraction of the original dimensions, can be identified—an insight formerly well established in deep supervised learning, now revealed for RL []. Another important aspect of efficient learning is the ability to leverage preexisting data sets. In [

], we described the first real-robot benchmark for this setting and highlighted important differences between simulated and real data by testing various offline RL approaches on our `cluster' of TriFinger robots, developed and built over the last years.

Towards ``Big Data'' Robotics

To harness the predictive capabilities of diffusion-based methods for robotics, the generation of extensive data sets is indispensable. For the unresolved problem of robotic piano play, we introduced an automatic fingering generation technique based on optimal transport []. Previously, fingerings would have been provided by humans or tediously distilled from internet video data. RL training for piano play has now achieved substantial efficiency, allowing us to generate an unprecedented number of optimal piano play trajectories from the wealth of sheet music available online. This dataset enabled imitation learning based on diffusion models to reach a new state-of-the-art in bi-manual robotic piano play. Similarly, for the long-standing challenge of robot locomotion in highly constrained environments, we proposed to use efficient search using Monte Carlo tree search (MCTS) together with imitation learning using diffusion policies [

].

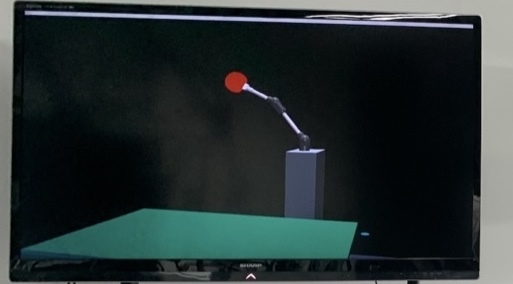

Hybrid simulation and real training

Long-term training and exploration of potentially hazardous, high-speed maneuvers are practical impediments to acquiring robotic skills through learning. By employing our in-house developed robotic arm actuated by pneumatic muscles, and introducing a hybrid simulation and real training technique (HYSR), we successfully learned to return and smash table tennis balls from scratch using model-free RL []. HYSR facilitates training without resetting the real environment and incorporates recorded data that is replayed virtually in tandem with concurrent real robot motions. In this manner, we were able to learn robot table tennis play while avoiding the practical challenge of handling physical balls during training. Recently, we demonstrated the efficiency of this approach even in sparse reward conditions, achieved by pairing multiple virtual entities with single real actions [

]. We also developed a largely 3D-printable version of our muscular robot [

], enabling us to surpass the world record for the fastest table tennis ball returned by a robotic system (20 m/s).