Reinforcement Learning and Control

Model-based Reinforcement Learning and Planning

Object-centric Self-supervised Reinforcement Learning

Self-exploration of Behavior

Causal Reasoning in RL

Equation Learner for Extrapolation and Control

Intrinsically Motivated Hierarchical Learner

Regularity as Intrinsic Reward for Free Play

Curious Exploration via Structured World Models Yields Zero-Shot Object Manipulation

Natural and Robust Walking from Generic Rewards

Goal-conditioned Offline Planning

Offline Diversity Under Imitation Constraints

Learning Diverse Skills for Local Navigation

Learning Agile Skills via Adversarial Imitation of Rough Partial Demonstrations

Combinatorial Optimization as a Layer / Blackbox Differentiation

Object-centric Self-supervised Reinforcement Learning

Symbolic Regression and Equation Learning

Representation Learning

Stepsize adaptation for stochastic optimization

Probabilistic Neural Networks

Learning with 3D rotations: A hitchhiker’s guide to SO(3)

Efficient volumetric inference with OctNet

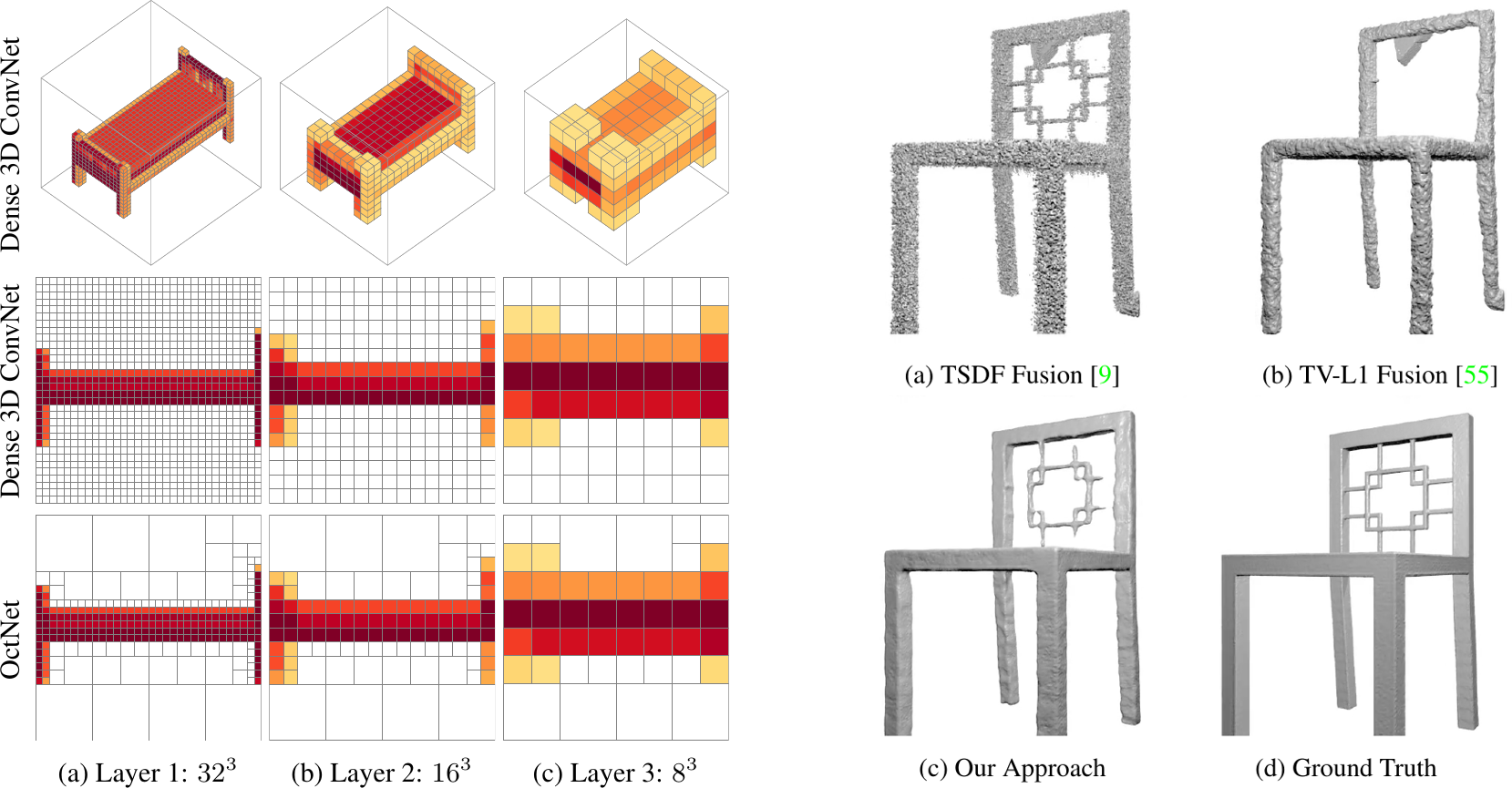

3D deep learning techniques are notoriously memory-hungry, due to the high-dimensional input and output spaces. However, for most applications, not all areas of space are equally informative or important. In order to allow deep learning techniques to scale to spatial resolutions of 256³ and beyond, we have developed the OctNet framework [].

In contrast to existing models, our representation enables 3D convolutional networks which are both deep and high resolution. The data-adaptive representation using unbalanced octrees allows us to focus memory allocation and computations to the relevant dense regions.

With OctNetFusion [], we present a learning-based approach to depth fusion, i.e. to dense 3D reconstruction from multiple depth images. We present a novel 3D CNN architecture that learns to predict an implicit surface representation from the input depth maps, and is additionally able to infer the structure of the octrees representing the objects at inference time.

Members

Publications