Human Pose, Shape and Action

3D Pose from Images

2D Pose from Images

Beyond Motion Capture

Action and Behavior

Body Perception

Body Applications

Pose and Motion Priors

Clothing Models (2011-2015)

Reflectance Filtering

Learning on Manifolds

Markerless Animal Motion Capture

Multi-Camera Capture

2D Pose from Optical Flow

Body Perception

Neural Prosthetics and Decoding

Part-based Body Models

Intrinsic Depth

Lie Bodies

Layers, Time and Segmentation

Understanding Action Recognition (JHMDB)

Intrinsic Video

Intrinsic Images

Action Recognition with Tracking

Neural Control of Grasping

Flowing Puppets

Faces

Deformable Structures

Model-based Anthropometry

Modeling 3D Human Breathing

Optical flow in the LGN

FlowCap

Smooth Loops from Unconstrained Video

PCA Flow

Efficient and Scalable Inference

Motion Blur in Layers

Facade Segmentation

Smooth Metric Learning

Robust PCA

3D Recognition

Object Detection

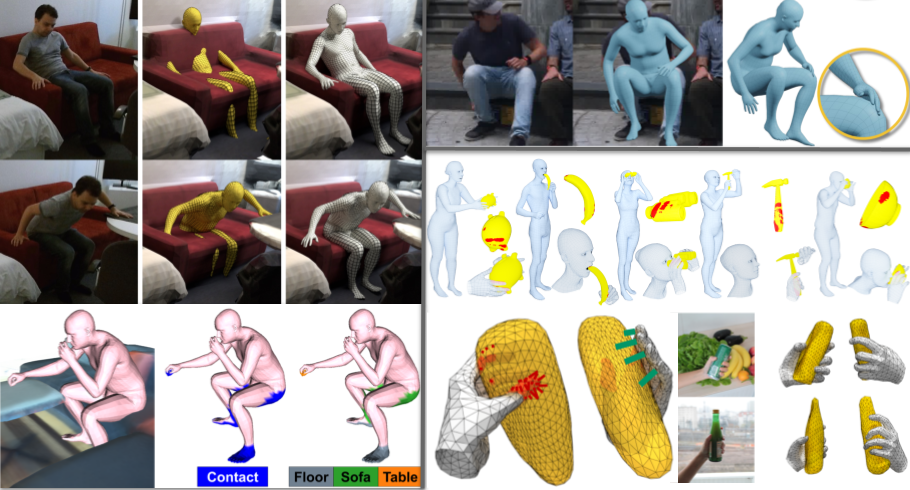

Capturing Contact

Understanding and modeling human behavior requires capturing humans moving in, and interacting with, the world. Standard 3D human body pose and shape (HPS) methods estimate bodies in isolation from the objects and people around them. The results are often physically implausible and lack key information. We view contact as central to understanding behavior and therefore essential in human motion capture. Our goal is to capture people in the context of the world where contact is as important as pose.

To study this, we captured the PROX dataset [] using 3D scene scans and an RGB-D sensor to obtain pseudo ground truth poses with physically meaningful contact. Knowing the 3D scene enables more accurate HPS estimation from monocular RGB images by exploiting contact and interpenetration contraints.

Using the body-scene contact data from PROX, POSA [] learns a generative model of contact for the vertices of a posed body. We use this body-centric prior in monocular pose estimation to encourage the estimated body to have physically and semantically meaningful scene contacts.

TUCH [] explores HPS estimation with self-contact. We create novel datasets of images with known 3D contact poses or contact labels. Using these, and a contact-aware version of SMPLify-X [

], we train a regression network using a modified version of SPIN [

]. Not only is TUCH more accurate for images with self-contact, but also for non-contact poses.

To learn to regress 3D hands and objects from an image, we created the ObMan dataset by extending a robotic grasp simulator to MANO [] and rendering images of hands grasping many objects. We trained a network to regress both object and hand shape, while encouraging contact and avoiding interpenetration.

To address detailed whole-body contact during object manipulation we used mocap to create the GRAB dataset []. GRAB goes beyond previous hand-centric datasets to capture actions like drinking where contact occurs between a cup and fingers as well as the lips.

Members

Publications