Human Pose, Shape and Action

3D Pose from Images

2D Pose from Images

Beyond Motion Capture

Action and Behavior

Body Perception

Body Applications

Pose and Motion Priors

Clothing Models (2011-2015)

Reflectance Filtering

Learning on Manifolds

Markerless Animal Motion Capture

Multi-Camera Capture

2D Pose from Optical Flow

Body Perception

Neural Prosthetics and Decoding

Part-based Body Models

Intrinsic Depth

Lie Bodies

Layers, Time and Segmentation

Understanding Action Recognition (JHMDB)

Intrinsic Video

Intrinsic Images

Action Recognition with Tracking

Neural Control of Grasping

Flowing Puppets

Faces

Deformable Structures

Model-based Anthropometry

Modeling 3D Human Breathing

Optical flow in the LGN

FlowCap

Smooth Loops from Unconstrained Video

PCA Flow

Efficient and Scalable Inference

Motion Blur in Layers

Facade Segmentation

Smooth Metric Learning

Robust PCA

3D Recognition

Object Detection

Robot Perception Group

Robot Perception Group Github Organization Page

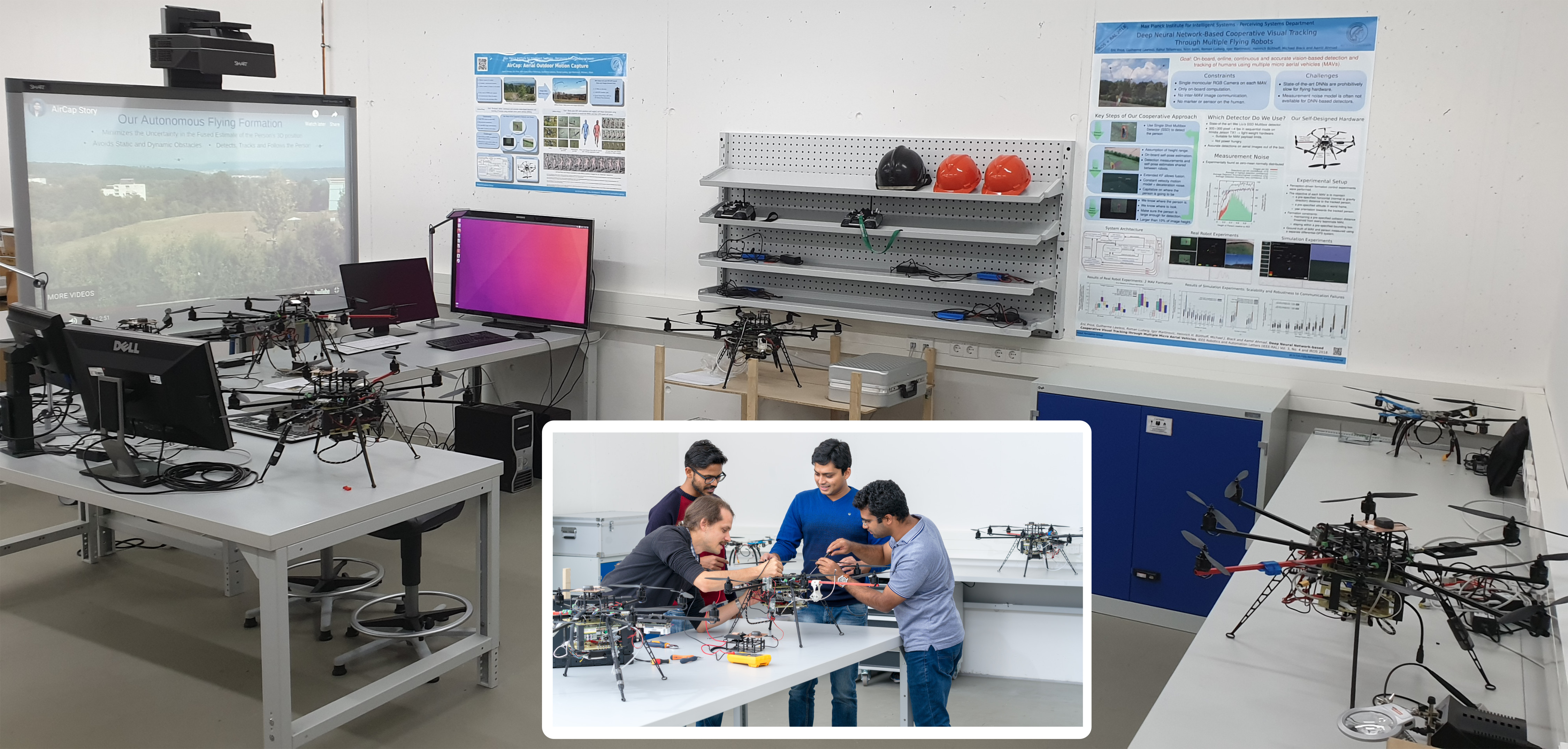

Our focus is on vision-based perception in multi-robot systems. Our goal is to understand how teams of robots, especially flying robots, can act (navigate, cooperate and communicate) in optimal ways using only raw sensor inputs, e.g., RGB images and IMU measurements. Thus, we are studying novel methods for active perception, sensor fusion and state estimation in multi-robot systems. To facilitate our research we also design and develop novel robot platforms.

Multi-robot Active Perception -- We investigate methods for multi-robot formation control based on cooperative target perception without relying on a pre-specified formation geometry. We have developed methods for teams of mobile aerial and ground robots, equipped with only RGB cameras, to maximize their joint perception of moving persons [] [

] or objects in 3D space by actively steering the formations that facilitate the joint perception. We have introduced and rigorously tested active perception methods using novel detection and tracking pipelines [

] and nonlinear model predictive control (MPC) based formation controller [

] [

], which forms our autonomous aerial motion capture (AirCap) system's front-end (AirCap Front-End).

Multi-view pose and shape estimation for Motion Capture (MoCap) -- For human MoCap, we develop methods to estimate 3D pose and shape using images from multiple and approximately-calibrated cameras. Such image datasets are obtained using our AirCap system's front-end running an active perception method. We leverage 2D joint detectors as noisy sensor measurements and jointly optimize for human pose, shape and the extrinsics of the cameras [] (AirCap Back-End).

Multi-robot Sensor Fusion -- We study and develop unified methods for sensor fusion that are not only scalable to large environments but also simultaneously to a large number of sensors and teams of robots []. We have developed several methods for unified and integrated multi-robot cooperative localization and target tracking. Here "unified" means that the poses of all robots and targets are estimated by every other robot and "integrated" means that disagreement among sensors, inconsistent sensor measurements, occlusions and sensor failures, are handled within a single Bayesian framework. The methods are either filter-based (filtering) [

] or pose-graph optimization-based approaches (smoothing). While each category has its own advantages w.r.t. the available computational resources and the level of estimation accuracy, we have also developed a novel moving-horizon technique [

] for a hybrid method that combines the advantages of both kinds of approaches.

New Robot Platforms -- In order to have extensive access to the hardware, we design and build most of our robotic platforms. Currently, our main flying platforms include 8-rotor Octocopters. More details can be found here https://ps.is.tue.mpg.de/pages/outdoor-aerial-motion-capture-system.

There are currently two ongoing AirCap projects in our group: 3D Motion Capture and Perception-Based Control