Human Pose, Shape and Action

3D Pose from Images

2D Pose from Images

Beyond Motion Capture

Action and Behavior

Body Perception

Body Applications

Pose and Motion Priors

Clothing Models (2011-2015)

Reflectance Filtering

Learning on Manifolds

Markerless Animal Motion Capture

Multi-Camera Capture

2D Pose from Optical Flow

Body Perception

Neural Prosthetics and Decoding

Part-based Body Models

Intrinsic Depth

Lie Bodies

Layers, Time and Segmentation

Understanding Action Recognition (JHMDB)

Intrinsic Video

Intrinsic Images

Action Recognition with Tracking

Neural Control of Grasping

Flowing Puppets

Faces

Deformable Structures

Model-based Anthropometry

Modeling 3D Human Breathing

Optical flow in the LGN

FlowCap

Smooth Loops from Unconstrained Video

PCA Flow

Efficient and Scalable Inference

Motion Blur in Layers

Facade Segmentation

Smooth Metric Learning

Robust PCA

3D Recognition

Object Detection

Probabilistic Algorithms

Probabilistic inference is often thought of as elaborate and intricate – or to put it less charitably: cumbersome and expensive. Work performed in the reporting period shows that probabilistic concepts can, instead, be a driver of computational efficiency: Used to quantify sources of error in composite computations, they can guide the automated design of algorithms, yielding robust outputs and predictions.

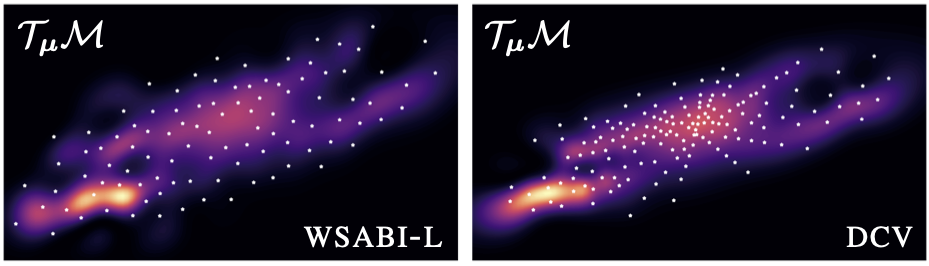

Controlling computations and their precision When computations are expensive, it pays off to make them as informative as possible. Riemannian Statistics is an example for the chained computations increasingly common in machine learning: Tasks that are simple in Euclidean space, like Gaussian integrals, become challenging on manifolds, where every evaluation of the integrand involves a complicated simulation to compute geodesics. An ICML paper [] showed that, by finding informative evaluations, adaptive Bayesian quadrature, which casts integration as inference from observations of the integrand, can offer significant gain in wallclock time over traditional Monte Carlo workhorses.

Fast post-hoc uncertainty in deep learning Another recent work addresses the most popular application of uncertainty quantification: Deep nets. Constructing approximate posteriors for deep learning is a long-standing problem. Most contemporary Bayesian deep learning methods aim for carefully crafted, high-quality output. As a result, they tend to be expensive and difficult to use. The classic Laplace approximation offers a more sober approach. It is essentially a second-order Taylor expansion of the empirical risk at the network's point estimate. While this puts some limits on fidelity, it has practical advantages: It leaves the point estimate in place, which practitioners spend considerable time tuning; and it can be constructed post-hoc, even for networks pre-trained by others. In a collaboration with partners in Tübingen, at the ETH, in Cambridge and at Deepmind, we developed the Laplace Library as a practical tool allowing practitioners easy and computationally cheap access to uncertainty on a comprehensive class of deep neural architectures []. The library has already found numerous users, showing the pressing need for uncertainty quantification in deep learning.

Finally, we have explored applications of Bayesian inference algorithms for scientific applications, e.g., to provide fast amortized infererence of the parameters of gravitational wave models [] and in biomedical applications [

].

Members

Publications