Human Pose, Shape and Action

3D Pose from Images

2D Pose from Images

Beyond Motion Capture

Action and Behavior

Body Perception

Body Applications

Pose and Motion Priors

Clothing Models (2011-2015)

Reflectance Filtering

Learning on Manifolds

Markerless Animal Motion Capture

Multi-Camera Capture

2D Pose from Optical Flow

Body Perception

Neural Prosthetics and Decoding

Part-based Body Models

Intrinsic Depth

Lie Bodies

Layers, Time and Segmentation

Understanding Action Recognition (JHMDB)

Intrinsic Video

Intrinsic Images

Action Recognition with Tracking

Neural Control of Grasping

Flowing Puppets

Faces

Deformable Structures

Model-based Anthropometry

Modeling 3D Human Breathing

Optical flow in the LGN

FlowCap

Smooth Loops from Unconstrained Video

PCA Flow

Efficient and Scalable Inference

Motion Blur in Layers

Facade Segmentation

Smooth Metric Learning

Robust PCA

3D Recognition

Object Detection

Causal Inference

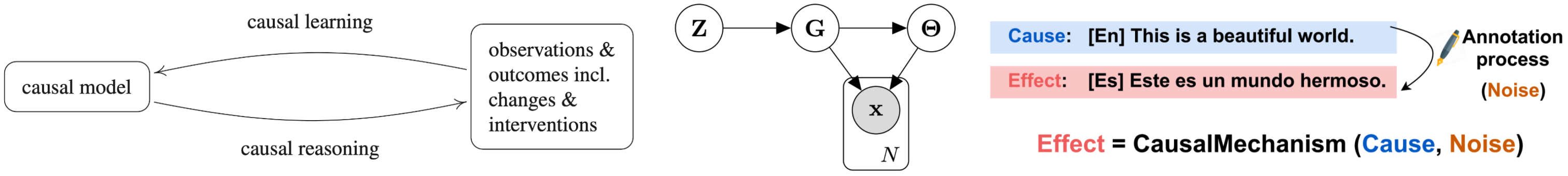

Both causal reasoning and learning crucially rely on assumptions on the statistical properties entailed by causal structures. During the past decade, various assumptions have been proposed and assayed that go beyond traditional ones like the causal Markov condition and faithfulness. This has implications for both scenarios: it improves identifiability of causal structure and also entails additional statistical predictions if the causal structure is known.

Causal reasoning Under suitable model assumptions (here: additive independent noise), causal knowledge admits novel techniques of noise removal such as the method of half-sibling regression published in PNAS [], applied to astronomic data from NASA's Kepler space telescope [

].

Apart from entailing statistical properties for a fixed distribution, causal models also suggest how distributions change across data sets. One may assume, for instance, that structural equations remain constant and only the noise distributions change [], that some of the causal conditionals in a causal Bayesian network change, while others remain constant [

], or that they change independently [

], which results in new approaches to domain adaptation [

].

Based on the idea of no shared information between causal mechanisms [], we developed a new type of conditional semi-supervised learning [

]. More recently, we showed that causal structure can explain a number of published NLP findings [], and explored its use in reinforcement learning [

].

Causal learning/discovery We have further explored the basic problem of telling cause from effect in bivariate distributions [], which we had earlier shown to be insightful also for more general causal inference problems. A long JMLR paper [

] extensively studies the performance of a variety of approaches, suggesting that distinguishing cause and effect is indeed possible above chance level.

For the multivariate setting, we have developed a fully differentiable Bayesian structure learning approach based on a latent probabilistic graph representation and efficient variational inference []. Other new results deal with discovery from heterogeneous or nonstationary data [

], meta-learning [

], employing generalized score functions [

], or learning structural equation models in presence of selection bias [

], while [

] introduced a kernel-based statistical test for joint independence, a key component of multi-variate additive noise-based causal discovery.

Apart from such 'classical' problems, we have extended the domain of causal inference in new directions: e.g., to assay causal signals in images by inferring whether the presence of an object is the cause or the effect of the presence of another []. In a study connecting causal principles and foundations of physics [

], we relate asymmetries between cause and effect to those between past and future, deriving a version of the second law of thermodynamics (the thermodynamic `arrow of time') from the assumption of (algorithmic) independence of causal mechanisms.

Within time series modeling, new causal inference methods reveal aspects of the arrow of time [], or allow for principled causal feature selection in presence of hidden confounding [

]. We have applied some of these methods to analyse climate systems [

] and Covid-19 spread [

], see also [

].

Members

Publications