Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

Efficient Large-Area Tactile Sensing for Robot Skin

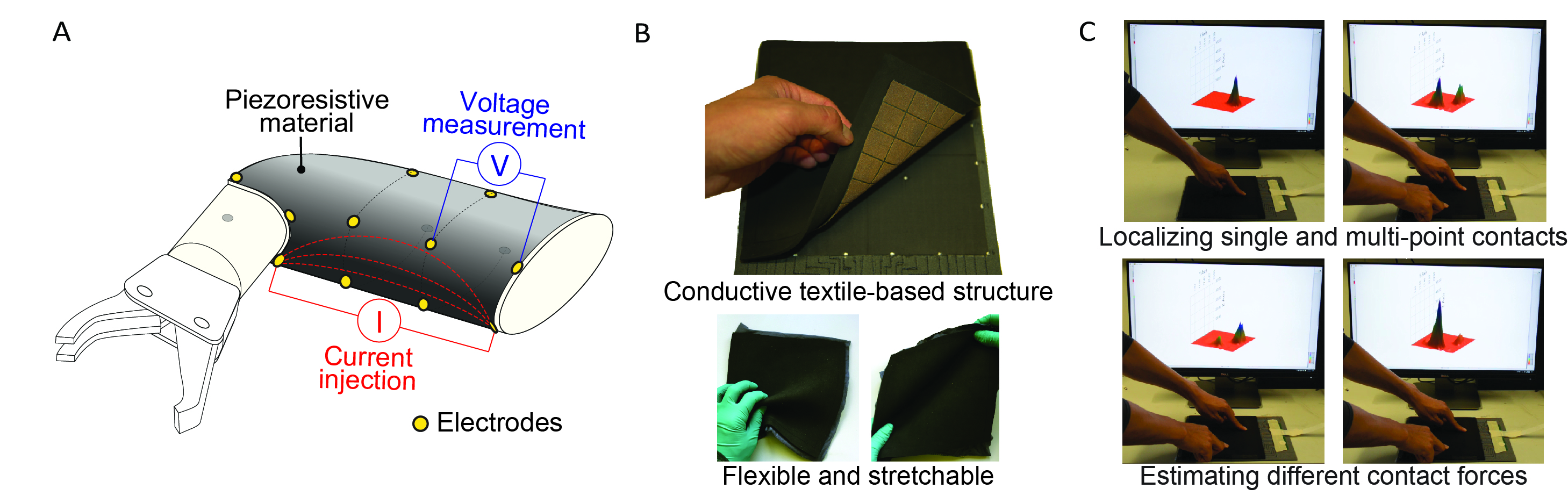

Being able to perceive physical contact is essential for intelligent robots to work well in cluttered everyday environments. Since physical contacts can occur at any location, a successful tactile sensing system should be able to cover all of a robot’s exposed surfaces. Most researchers have pursued the creation of large tactile skin by using many sensing elements that are each responsible for a region; however, deploying many sensing elements is not efficient considering manufacturability, cost-effectiveness, and durability.

This project aims to build a robot skin that combines a sensor design and a computational approach to achieve efficient large-area tactile sensing. The key principle is using a piezoresistive material with a small number of distributed point electrodes; current is injected between successive pairs of electrodes, and the resulting voltage distribution is measured at other electrodes. We used this approach with conductive textiles to create several flexible and stretchable tactile sensor prototype []. They successfully estimate contact location, contact shape, and normal force magnitude over a broad region.

This project opened up two subprojects to enhance large-area tactile sensing performance. The first subproject was optimizing the current injection and voltage measurement by considering the temporal locality of contacts across a large area []. This subproject achieved a tactile sensing framerate over 400 Hz, which is five times faster than the conventional method. The second subproject was enhancing the contact estimation performance using sim-to-real transfer learning [

]. This subproject demonstrated that a multiphysics model of the sensor could substantially improve the contact information estimation performance when combined with deep neural networks. In the future, we plan to apply this tactile sensing approach on a real robot surface to demonstrate a whole-body robot skin.

Members

Publications