Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

Modeling Hand Deformations During Contact

Little is known about the shape and properties of the human finger during haptic interaction, as such situations are difficult to instrument. Interestingly, these parameters are essential for designing and controlling wearable haptic finger devices and for delivering realistic tactile feedback with such devices. For example, there is currently no standard model of the fingertip’s shape and its potential variations across the population. Interaction-dependent deformations have also not yet been modeled.

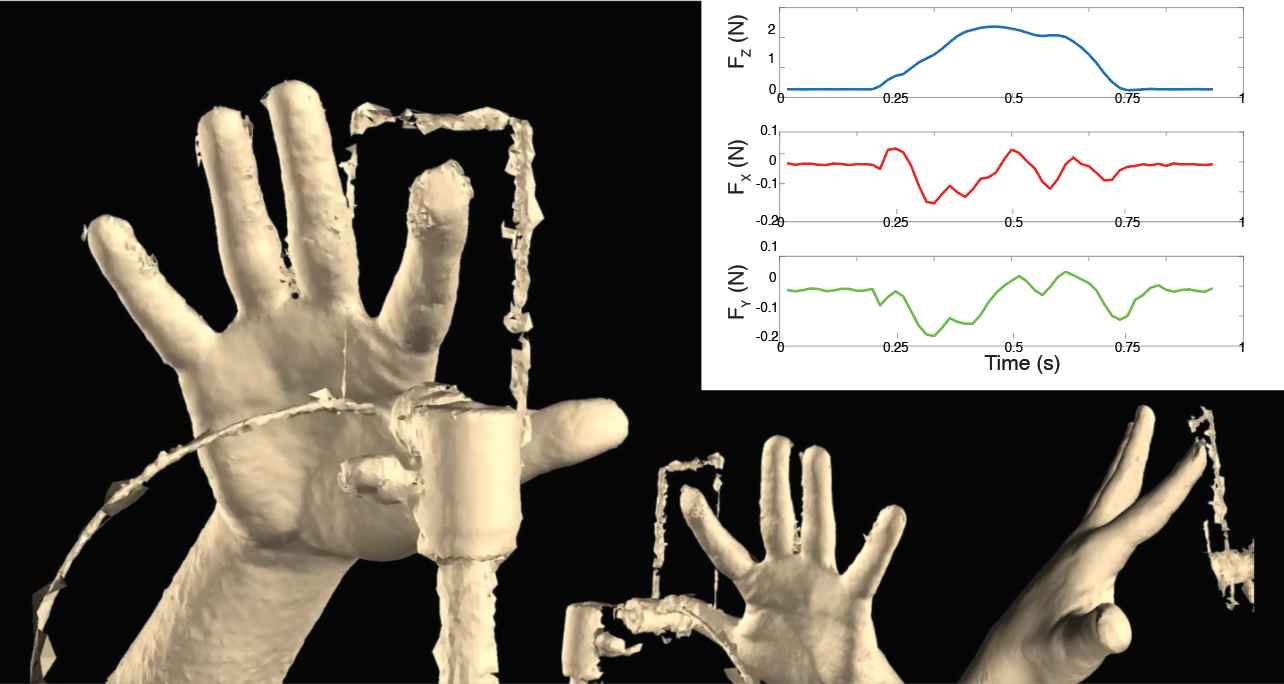

This project explores a framework for four-dimensional scanning (3D shape over time) and modelling of finger-surface interactions, aiming to capture the motion and deformations of the entire finger with high resolution while simultaneously recording the interfacial forces at the contact. We are currently capturing the deformations of the fingertip during active pressing on a rigid surface, which is a first step toward an accurate characterization of the shape and deformations of the physically interacting human hand and fingers [].

In the future, we would like to optimize the scanner configuration and capture a large number of surface-finger interactions from a range of participants that is representative of the general population. We believe that these data will enable the creation of a statistical model that simulates the natural behavior of the interacting finger.

An accurate model of the variations in finger properties across the human population could enable one to infer the user’s fingertip properties from scarce data obtained by lower resolution scanning. It may also be relevant for inferring the physical properties of the underlying tissue from observing the surface mesh deformations, as has been shown for body tissues. Further applications of this research include simulation of human grasping in virtual worlds that is consistent with our manipulation of real objects and estimation of the contact forces generated by the tactile interaction from the 4D data alone.

Members

Publications