Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

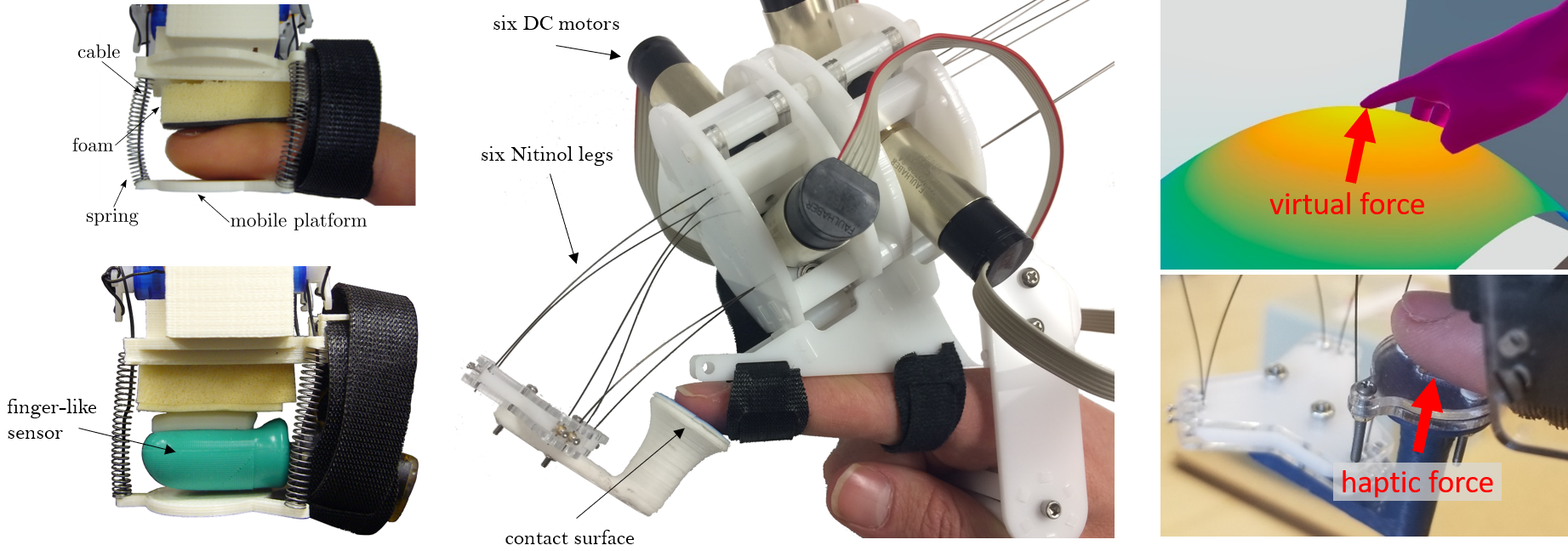

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Wearable haptic devices have seen growing interest in recent years, but providing realistic tactile feedback is a challenge not to be solved soon. Daily interactions with physical objects elicit complex sensations at the fingertips. Furthermore, human fingertips exhibit a broad range of physical dimensions and perceptive abilities, adding increased complexity to the task of simulating haptic interactions in a compelling manner. Through this project (see [] for summary), we aim to provide hardware- and software-based solutions for rendering more expressive and personalized tactile cues to the fingertip.

We are the first to explore the idea of rendering 6-DOF tactile fingertip feedback via a wearable 6-DOF device, such that any fingertip interaction with a surface can be simulated. We demonstrated the potential of parallel continuum manipulators to meet the requirements of such a device [], and we presented a motorized version named the Fingertip Puppeteer, or Fuppeteer for short [

]. We then used this novel hardware to simulate different lower-dimensional devices and evaluate the role of tactile dimensionality on virtual object interaction [

]. The results showed that higher-dimensional tactile feedback may indeed allow completion of a wider range of virtual tasks, but that feedback dimensionality surprisingly does not greatly affect the exploratory techniques employed by the user.

It is also essential to examine how to meet the small size and low weight requirements for wearable haptic interfaces. We used principal component analysis to find the minimum number of an existing device’s actuators that are required to render a given tactile sensation with minimal estimated haptic rendering error [].

Finally, we have also explored the idea of personalizing fingertip tactile feedback for a particular user. We presented two generalizable software-based approaches to modify an existing data-driven haptic rendering algorithm to more accurately display tactile cues to fingertips of different sizes []. Results showed that both personalization approaches significantly reduced force error magnitudes and improved realism ratings.

Members

Publications