Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

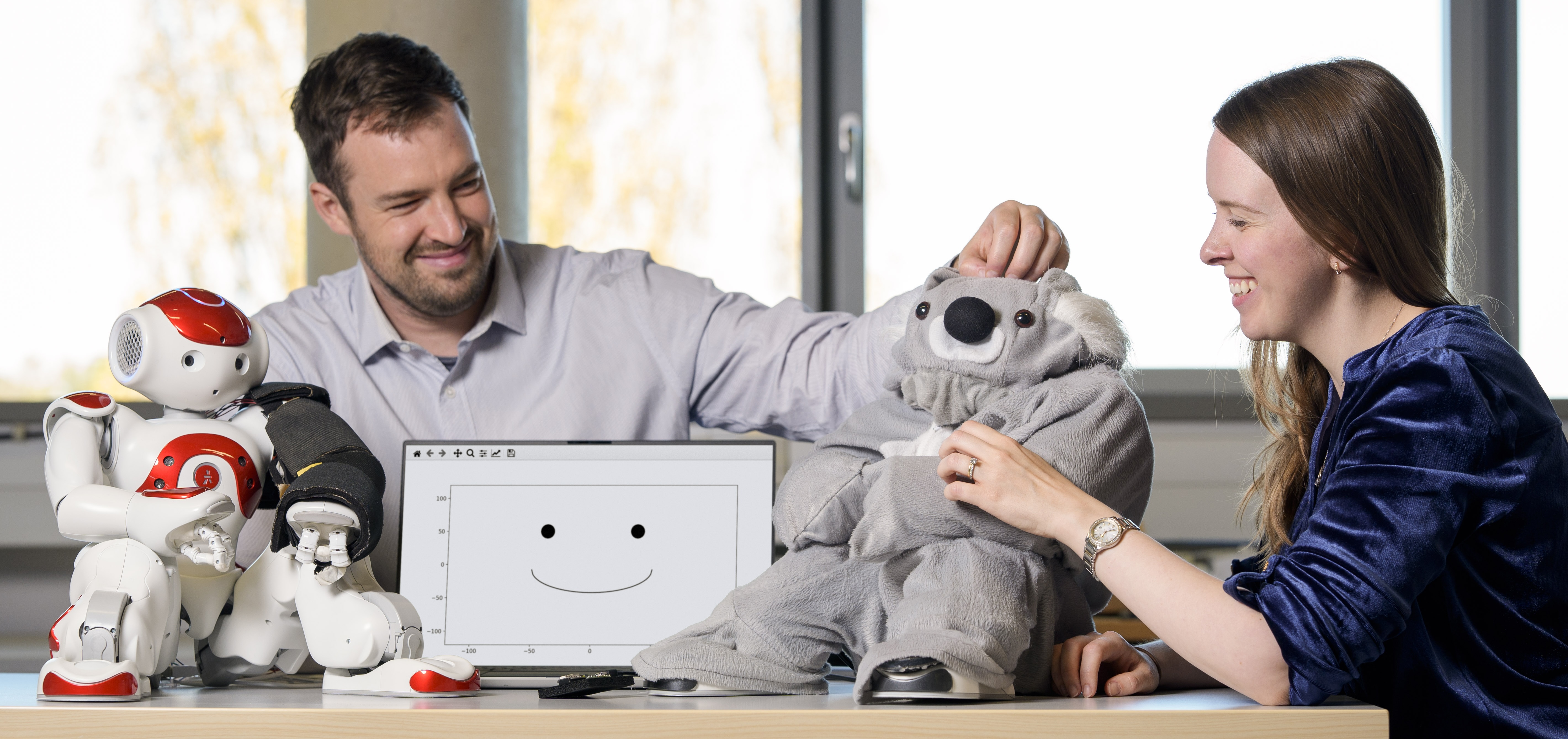

Haptic Empathetic Robot Animal (HERA)

Social touch is a key aspect of our daily interactions with other people. We use social touch to gain attention, communicate needs, and convey emotions. However, children with autism may have difficulties using touch in these ways. While socially assistive robots are generally viewed as promising therapy tool to help children with autism, existing robots typically lack sufficient touch-perception capabilities. We propose that socially assistive robots could better understand user intentions and needs and provide increased support opportunities if they could perceive and intelligently react to touch.

This project entails the design, creation, and testing of a touch-perceptive and emotionally responsive robot, which we refer to as the Haptic Empathetic Robot Animal, or HERA []. HERA is intended to demonstrate new technical capabilities that therapists could use to teach children with autism about safe and appropriate touch. We divided HERA's development into four principal stages: 1) establishing touch-sensing guidelines, 2) building touch-perceiving sensors, 3) creating a long-horizon emotion model that processes and responds to touch interactions, and 4) integrating the subsystems for real-time performance.

We established seven key touch-sensing guidelines that a therapy robot should meet through in-depth interviews with eleven autism specialists []. For our initial robot prototype, we enclosed the commercially available humanoid robot NAO inside a koala suit. Based on our guidelines, we then created a tactile perception system composed of fabric-based, resistive tactile sensors [

] and a two-stage gesture classification algorithm [

]. This system can identify five social touch gestures at two intensity levels (ten total gestures + no touch) at each of the sixteen tactile sensors covering the robot's whole body.

HERA reacts on both short and long-term time scales using an emotion model that is designed to reinforce appropriate social touch behavior and is based on approach-avoidance theory from psychology []. To increase HERA's general applicability as an educational tool, multiple parameters in the emotion model can be modified to customize HERA's personality and behavior. HERA's tactile perception and emotion subsystems run and communicate in real time, enabling live interaction with a robot that feels touch and emotion.

Members

Publications