Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

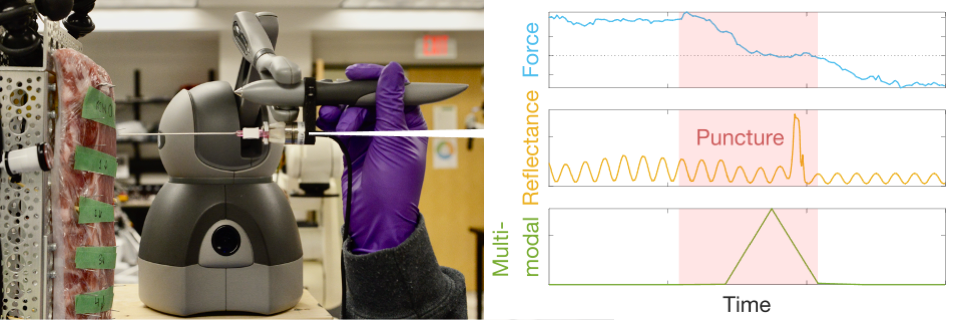

Multimodal Puncture Detection for Urgent Percutaneous Therapies

Illness or injury can cause fluids to accumulate between the chest wall and the lungs. In the absence of drainage, over-pressurization collapses the affected lung and compresses the heart until it can no longer pump blood. This condition is called a tension pneumothorax when the fluid is air, and it is fatal if left untreated. Urgent cases are vented using needle decompression (ND), where operators estimate the correct needle depth by feeling for a loss-of-resistance sensation on the needle. However, very high failure rates have been reported (94.1%), and complications include the accidental perforation of critical organs and vasculature.

To help medical professionals perform ND, this project seeks to identify reliable needle-puncture features in multimodal data streams measured with an instrumented needle. We cemented optical fibers inside a needle, and we mounted the sensorized needle on a haptic interface via a force/torque sensor. This setup enabled the collection of light reflected from the needle's tip during simulated ND, as well as the simultaneous measurement of needle forces, torques, positions, and orientations []. Analyzing these rich data streams provides insight into needle-tissue interactions and underpins an investigation of puncture-detection algorithms.

Prior to this project, there were no publicly available data sets for ND. Given this lack of data and the challenges in collecting large data sets from human operators, we focussed on a class of extremely lightweight puncture-detection algorithms that identify events by thresholding sensor data streams. Other researchers have applied these algorithms to force data streams, but we explored applying them to each of our multimodal data streams, both alone [] and in combinations. We also developed novel puncture-detection algorithms belonging to this class [

].

Our experiments show that combining axial force and in-bore needle reflectance produces a five-fold improvement in puncture-detection performance, as compared to the traditional force-only approach. Furthermore, preliminary user study results suggest that algorithm-generated puncture-detection notifications can help human operators reduce their needle-tip overshoot beyond the target space []. These promising outcomes support the future development of instrumented needles that provide medical professionals with puncture-detection assistance for ND.

This research project involves collaborations with Anupam Bisht (University of Calgary), Kartikeya Murari (University of Calgary), Garnette Sutherland (University of Calgary), David Westwick (University of Calgary), and Linhui Yu (University of Calgary).

Members

Publications