Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

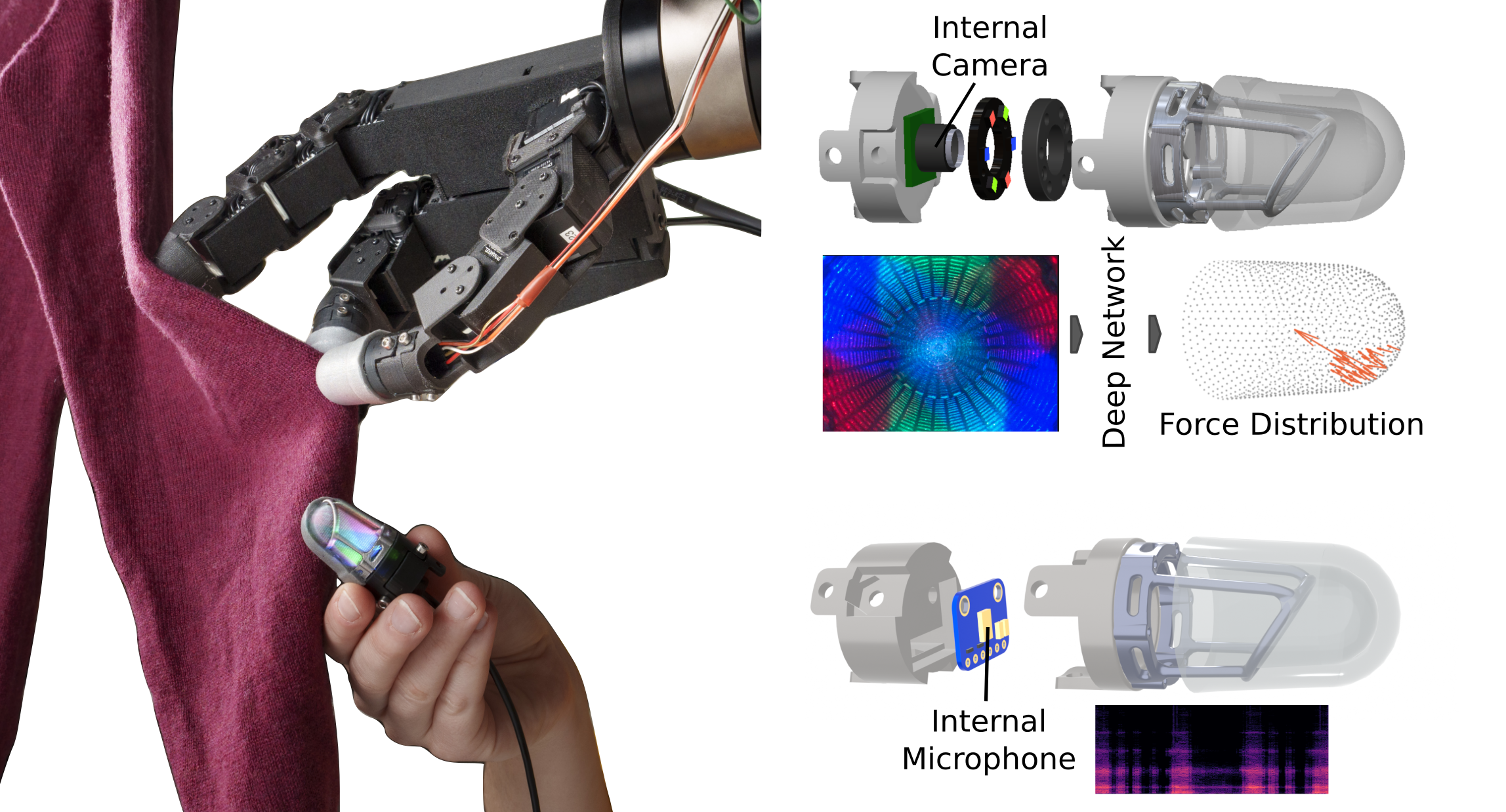

Giving Touch to Soft Robot Fingertips Using Vision, Audio and Machine Learning

Autonomous robots have the potential to become dexterous and work flexibly together with humans. To achieve this goal, their hardware needs to become more robust and provide richer sensory feedback, while their learning algorithms need to become more data-efficient and safety-aware. A clear shortcoming of current commodity robotic hardware is the complete lack or low quality of the tactile sensations it can acquire. In contrast, humans have a rich sense of touch and use it constantly-mostly subconsciously. In fact, if human haptic perception is impaired, dexterous manipulation becomes very challenging or even impossible. High-resolution haptic sensing similar to the human fingertip can enable robots to execute delicate manipulation tasks like picking up small objects, inserting a key into a lock, or handing a full cup of coffee to a human.

This project aims to extend the capabilities of robotic manipulation by creating learning-based tactile sensors to capture the rich components of touch information. For this goal, we explore vision-based and audio-based technologies processed with machine learning to create fast and robust touch sensing. As part of this project, we present Minsight [], a fingertip-sized vision-based tactile sensor based on the Insight technology [

], capable of sensing forces on its omnidirectional sensing surface down to 0.05 N with an update rate of 60 Hz. This approach uses a camera to monitor changes in internal light intensity and/or color caused by deformations of the sensor's surrounding material due to external contact forces. The centrally positioned sensing component, a camera, does not bear the contact load, which ensures the high durability of the sensor.

We investigate, how Minsight's high-resolution tactile information can be used by learning-based processing methods to create robust manipulation strategies. We are furthermore looking into extending high-resolution vision-based sensing with an additional modality to capture also the high-frequency temporal aspects of touch, which are useful to feel surface textures or fabrics. To do so, we created a microphone-based twin sensor to Minsight, which captures audio with a bandwidth of 50 Hz to 15 kHz and use both of them for dynamic exploration of fabrics with a robot hand.

This research project involves collaborations with Prof. Georg Martius (University of Tübingen) and Laura Schiller (University of Tübingen).

Members

Publications