Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

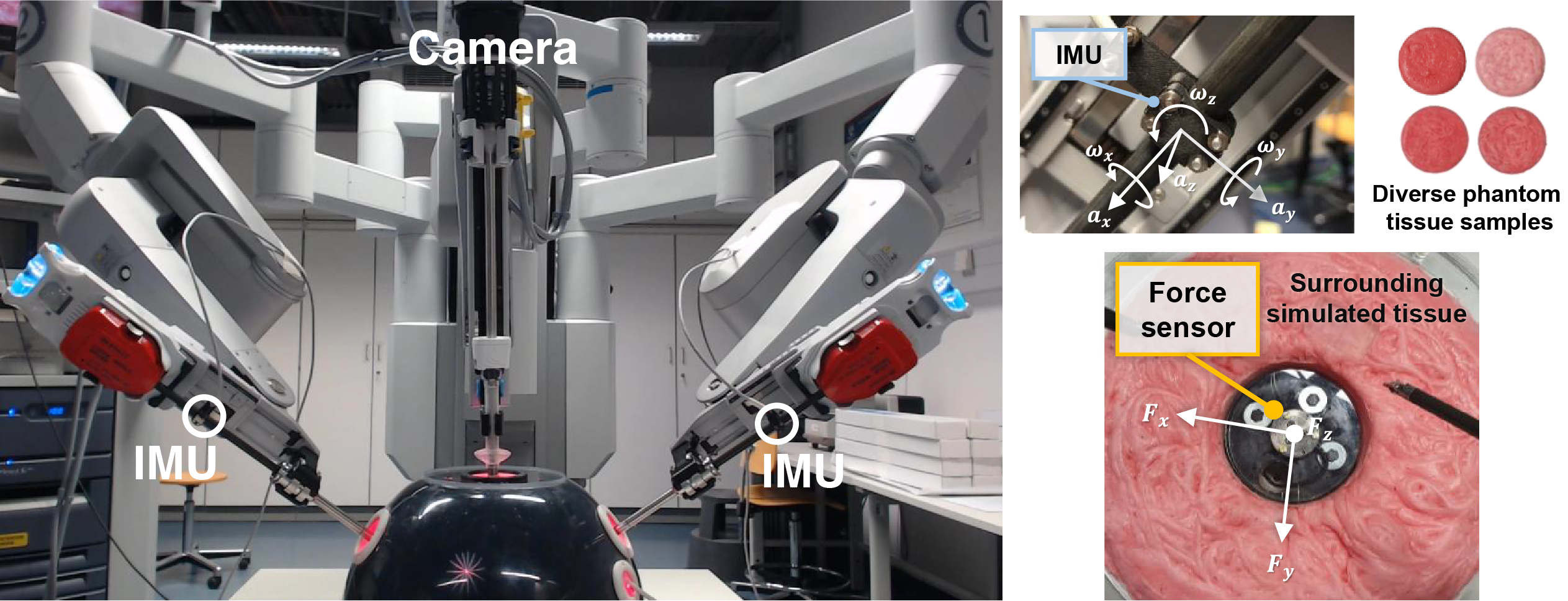

Visual-Inertial Force Estimation in Robotic Surgery

Force feedback is a crucial aspect of surgical procedures. Surgeons rely on their sense of touch to locate tumors, identify blood vessels, and safely manipulate tissue. However, in robot-assisted minimally invasive surgery, surgeons cannot directly feel the forces they apply to tissue through the robotic instruments. This lack of haptic feedback can lead to excessive force application, potentially causing tissue damage, or insufficient force, which can result in inefficient procedures. While direct force sensing could help, integrating sensors into surgical instruments requires addressing significant challenges of biocompatibility, sterilization, miniaturization, and robustness.

To overcome these limitations, we propose an indirect force-sensing method that combines monocular endoscopic video with inertial measurements units (IMUs) mounted on the instrument shafts. Our research objective is to demonstrate that visual tissue deformation cues combined with inertial information of the instruments can effectively estimate interaction forces during robotic surgery []. We developed this approach using a da Vinci Si robot, but designed it to be compatible with various robotic surgery platforms and traditional laparoscopic surgery.

We established a comprehensive experimental setup using four phantom tissue samples of varying stiffness and a force sensor for ground-truth data. The sensing system combines visual information acquired through the endoscope's left channel with inertial measurements from the IMUs mounted externally on the instrument shafts. We then collected 230 one-minute recordings from four operators performing palpation tasks perpendicularly to the tissue.

Based on extensive testing of different architectures, we developed a deep learning pipeline that uses DenseNet for visual processing combined with temporal modeling through (Bi)LSTM networks. Our system demonstrates strong performance in estimating normal forces in the palpations tasks, particularly on tissues of higher stiffness, while also showing the capability to estimate smaller shear forces. This indirect sensing approach has thus the potential to offer a practical solution for force estimation in robotic surgery while avoiding the complications of direct sensing methods.

Members

Publications