Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

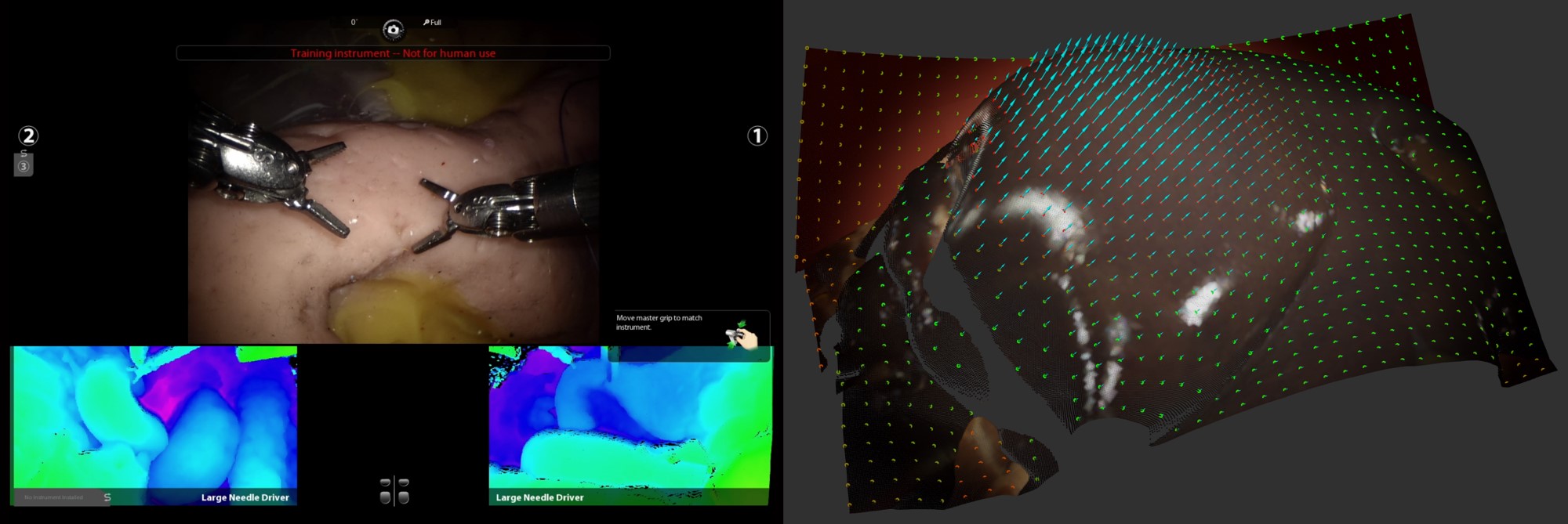

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

New sensing technologies and advanced processing algorithms are transforming computer-integrated surgery. While researchers are actively investigating neural perception and learned representations for vision-based surgical assistance, the majority of these methods build geometric estimates based on 2D white-light images. While being the clinical standard for decades, this type of imaging will evolve through miniaturization of hyperspectral and depth sensors. Furthermore, visual inspection in minimally invasive surgery is usually done with a single camera, preventing the availability of multi-viewpoint imaging data. To promote the generation of real-time, accurate, and robust spatiotemporal measurements of the intraoperative anatomy, this project expands in three main directions: i) investigation and prototyping of innovative 3D sensing technologies for surgery, ii) development of multi-viewpoint computational methods for kinematic fusion and scene reconstruction, iii) acquisition of highly realistic datasets with multiple visual and geometric measurement devices.

We advocate the introduction of one or more lidar (3D laser-based direct time-of-flight) in the abdominal cavity as additional imaging sensors []. To characterize the quality with which this technology could perform anatomical surface measurements during surgery, we conducted ex-vivo experiments comparing lidar to traditional learning-based stereo matching from endoscopic images. Our findings highlight the potential and limitations of lidar for surgical 3D perception and point toward new intraoperative methods that could complement time-of-flight with spectral imaging [

][https://arxiv.org/abs/2401.10709].

We believe future computer-integrated surgery will benefit from an abundance of intra corporeal measurement sources and efficient processing of their outputs, with the dual goals of supporting surgeons and laying the foundation for surgical autonomy. To jointly achieve these goals, special attention should be given to intuitive user interfaces and effective visualization strategies [], as well as to building computational systems able to generate accurate quantitative estimates. We are currently developing algorithmic solutions to deploy multi-viewpoint systems in minimally invasive surgery [https://arxiv.org/abs/2408.04367].

Conducting research in fields like robotics and computer science applied to surgery - which is a strictly regulated, high-volume, and high-risk practice - comes with the challenge of developing technologies that can (and should) directly impact human life. In the era of learning systems, clinical translation of biomedical research, especially in the algorithmic space, is strongly dependent on the quality and availability of data for development and lab testing. To contribute in this direction, we are actively building hardware and software infrastructure for the collection of high-fidelity multi-sensor imaging datasets that will boost surgical research and deliver a significant impact to society.

This research project involves collaborations with Cesar Cadena (ETH Zürich), Marco Hutter (ETH Zürich), Sergey Prokudin (ETH Zürich), and Siyu Tang (ETH Zürich).

Members

Publications