Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

Fabrication of HuggieBot 2.0: A More Huggable Robot

Our first HuggieBot research project showed that people are generally accepting of soft, warm robot hugs that squeeze the user and release promptly. In the future we would like to see if robot hugs can provide the same known health benefits as human hugs. Testing this hypothesis requires a new version of HuggieBot that allows more control over hugs than our original robot test platform; thus, we are therefore now designing, building, and programming HuggieBot 2.0.

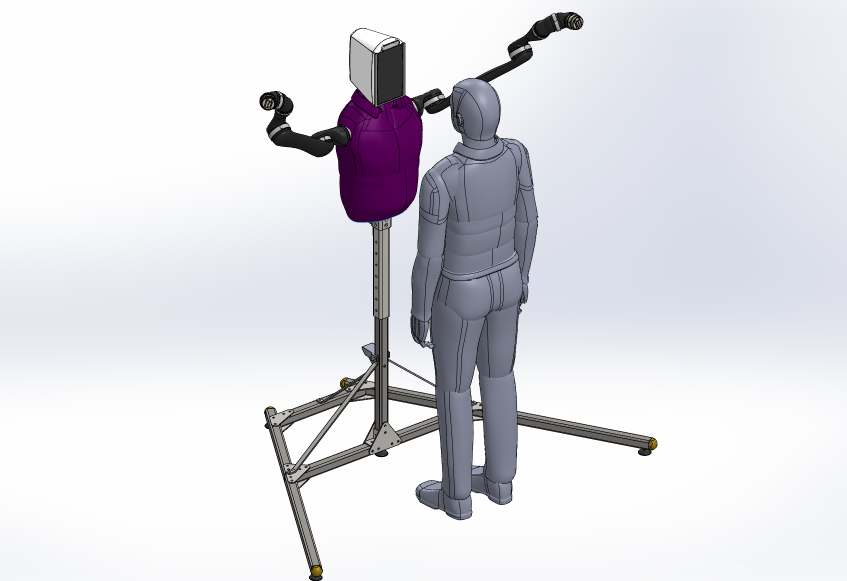

The HuggieBot 2.0 custom robot design builds off positive and negative critiques of the Willow Garage Personal Robot 2 (PR2), which was used in our previous experiment []. As shown in the figure above, a v-shaped stand will allow participants to get closer to the robot without having to lean over a large base, which was a pain point with the PR2.

This new platform uses two Kinova JACO 6-DOF arms, which are slimmer, quieter, and smoother than the arms of the PR2. To accommodate various hugging arm placements and to streamline the hugging process, we are currently creating an inflatable chest that includes haptic sensors; the chest will additionally be soft and heated because users responded favorably to these two features during the previous study. A final element of HuggieBot 2.0 is a face screen that can show both animated faces or pre-recorded video messages to make the hug experience more personal, more enjoyable, and less mechanical for the user.

To test if HuggieBot 2.0 can provide similar health benefits to people as human hugs, we plan to carefully induce mental stress on study participants and then offer either a human hug, an active robot hug, a passive robot hug, or self-soothing. We will use objective measures such as heart rate, facial expression, and cortisol and oxytocin levels to evaluate users' physical responses to the different hugs.

Members

Publications