Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

Modeling Finger-Touchscreen Contact during Electrovibration

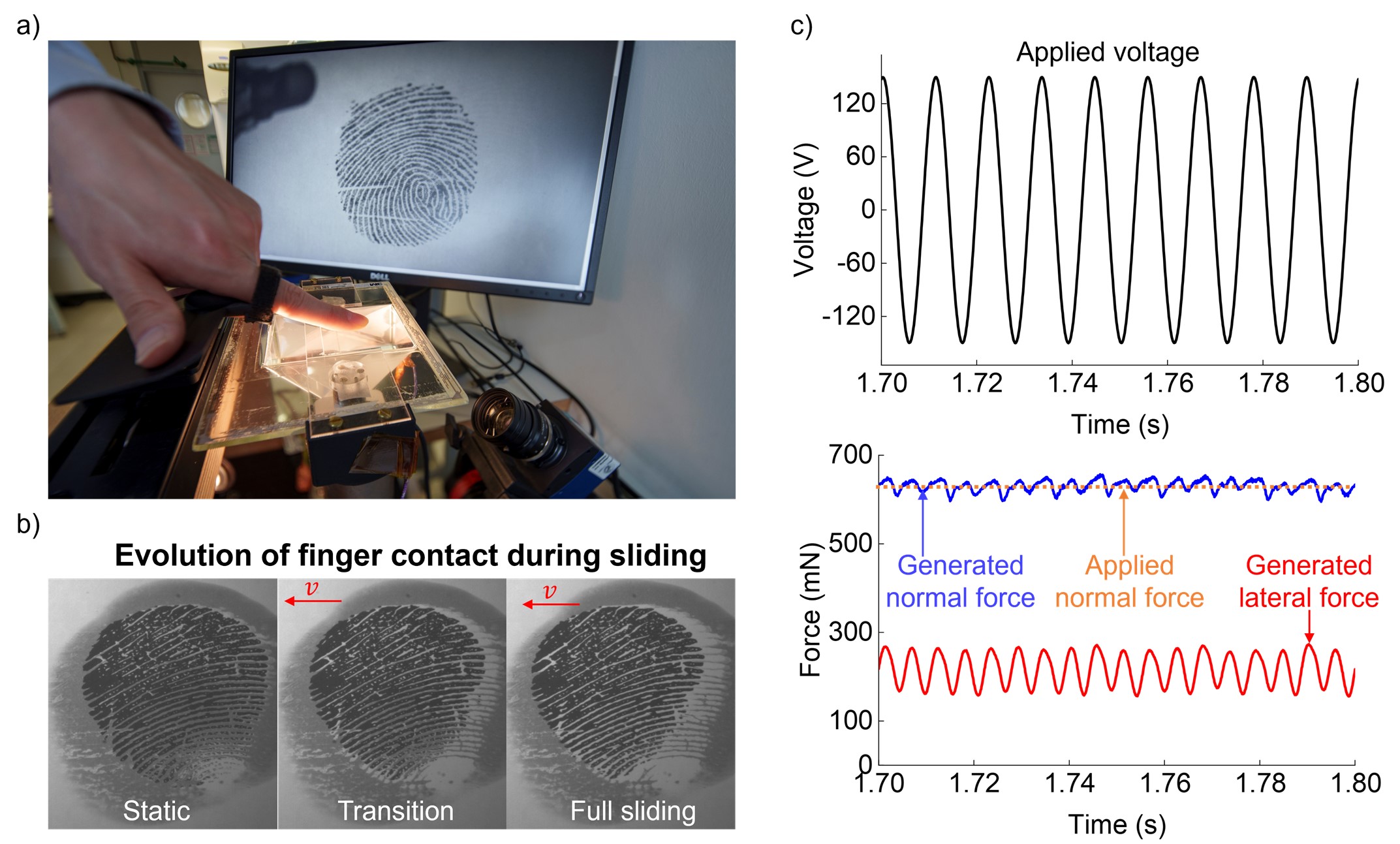

Touchscreens, commonly used in mobile phones and liquid-crystal displays, are a primary interface for digital interaction. By integrating a transparent conductive layer beneath the surface and applying an AC voltage, electrostatic actuation occurs upon contact with a human finger. This haptic rendering technique, known as electrovibration, has attracted considerable scientific interest due to its ability to simulate textures and friction and enhance haptic feedback for touchscreens and virtual reality. While extensive research has focused on human subject studies and the macroscale interpretation of experimental results, the fundamental physical mechanisms underlying these phenomena remain largely unexplored.

This project investigates how finger motion (stationary or moving at different speeds), finger pressing force, and the applied voltage waveform affect the perception of electrovibration and the physical mechanisms that govern this type of haptic feedback.

In our first study [], by conducting psychophysical experiments and simultaneously measuring contact forces, we demonstrated that finger's motion significantly affect what the user feels. At a given voltage, a single moving finger experiences a much larger fluctuation in electrovibration forces than a single stationary finger, making electrovibration much easier to feel during interactions involving finger movement. Indeed, only about 30% of participants could detect the stimulus without motion. Part of this difference comes from the fact that relative motion greatly increases the electrical impedance between a finger and the screen. In contrast to some theories, we found that threshold-level electrovibration did not significantly affect the coefficient of kinetic friction in any conditions.

Currently, we are investigating the effects of finger contact, finger motion, and environmental factors on perceived sensations using optical, electrical, and mechanical approaches. Additionally, we are developing a multiphysics model to predict friction forces during finger-touchscreen interactions at the nanoscale.

This research project involves a collaboration with Yuan Ma (The Hong Kong Polytechnic University).

Members

Publications