Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

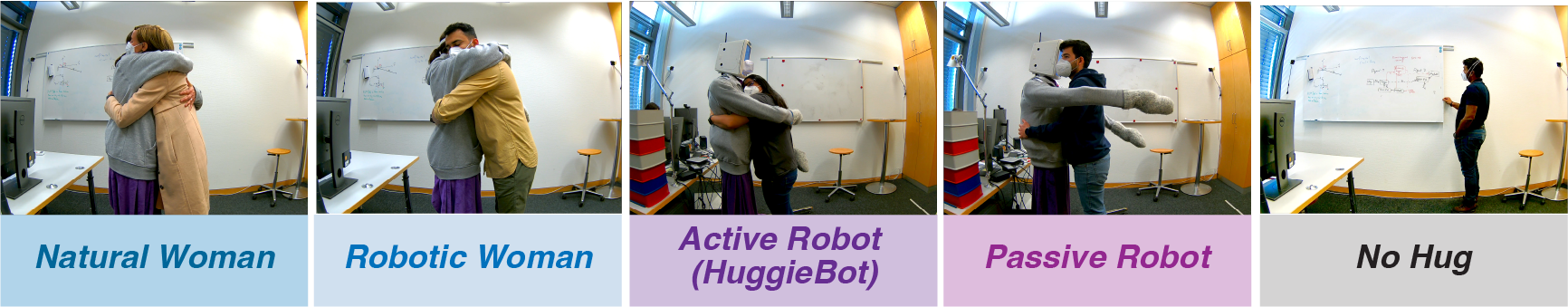

How does HuggieBot compare to a human hugging partner?

Social touch is fundamental to human connection, providing emotional support and reducing stress. When we receive a comforting hug from a trusted person, our bodies respond with physiological changes that promote relaxation, including releasing oxytocin counterbalancing cortisol. However, in moments of acute stress, not everyone has access to a supportive human hug. This lack of social support touch inspired our work to design and iteratively improve HuggieBot [], the first interactive hugging robot with visual and haptic perception to answer the question: could a robot provide similar comfort to a human hugging partner?

In our latest study [], we investigated how different forms of social touch impact stress recovery by analyzing physiological, neurohormonal, and behavioral responses to various post-stress interactions. Participants underwent the Trier Social Stress Test (TSST), a well-established protocol for inducing acute psychosocial stress. Then, they experienced ten minutes with one of five post-stress conditions: no hug, a passive robot hug, an active robot hug (HuggieBot), a robotic woman hug, or a natural woman hug. After a rest period, all participants then experienced ten minutes with the active robot hug.

To assess the effects of these interactions, we collected salivary samples at seven different time points, which we later analyzed for oxytocin and cortisol. Participants completed surveys at eight different time points. We continuously measured their heart rate throughout the experiment. Additionally, we analyzed six hugging behavioral responses during the hug sessions to quantify how participants interacted with the hugging agent during each condition. Behavioral measurements we coded included the number of hugs participants chose to exchange with the hugging agent, the number of times participants rested their head on the hugging agent, the percent time (of the ten minutes) they spent hugging the agent, and the minimum, average, and maximum time spent hugging the agent. By combining physiological, hormonal, and behavioral data, we aim to uncover the extent to which robotic hugs can provide meaningful emotional support post-acute stress.

Members

Publications