Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

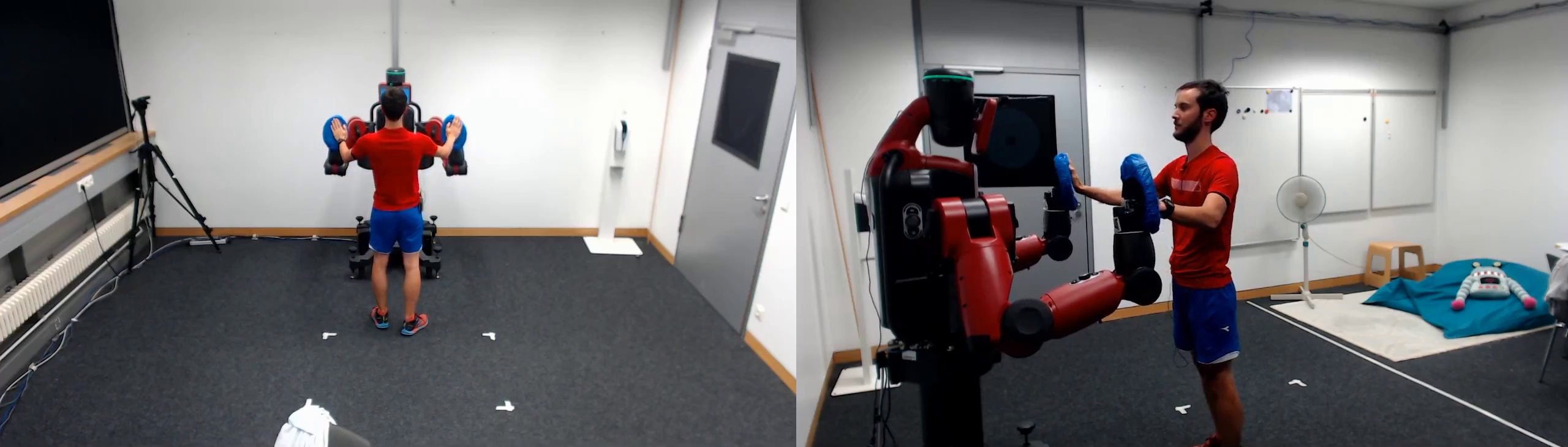

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

Enhancing HRI through Responsive Multimodal Feedback

We investigate how multi-modal feedback-gestures, facial expressions, and sounds can promote physical activity and enhance task comprehension by exploring how social-physical robots can create engaging, movement-based interactions.

To study such interactions, we developed eight exercise games for the Rethink Robotics Baxter Research Robot that examine how people respond to social-physical interactions with a humanoid robot. These games were developed with the input and guidance of experts in game design, therapy, and rehabilitation [], and validated via extensive pilot testing [

]. Results from our game evaluation study with 20 younger and 20 older adults show that bimanual humanoid robots could motivate users to stay healthy via social-physical exercise [

]. However, we found that these robots must have customizable behaviors and end-user monitoring capabilities to be viable in real-world scenarios.

To address the above needs, we developed the Robot Interaction Studio, a platform for enabling minimally supervised human-robot interaction []. This system combines Captury Live, a real-time markerless motion-capture system, with a ROS-compatible robot to estimate user actions in real time and provide corrective feedback [

]. We evaluated this platform via a user study where Baxter sequentially presented the user with three gesture-based cues in randomized order [

]. The study results showed that participants explored the interaction workspace, mimicked Baxter, and interacted with Baxter's hands even without instructions.

Given the importance of gestures in motor learning, we examined how nonverbal robot feedback influences human behavior []. Inspired by educational research, we tested formative feedback (real-time corrections) and summative feedback (post-task scores) across three tasks: positioning, mimicking arm poses, and contacting the robot's hands. We found that both formative and summative feedback improved user task performance. However, only formative feedback enhanced task comprehension.

These findings highlight how real-time, multi-modal interactions enable robots to encourage physical activity and improve learning, making them valuable tools for exercise and rehabilitation.

This research project involves collaborations with Naomi T. Fitter (Oregon State University) and Michelle J. Johnson (University of Pennsylvania).

Members

Publications