Human Pose, Shape and Action

3D Pose from Images

2D Pose from Images

Beyond Motion Capture

Action and Behavior

Body Perception

Body Applications

Pose and Motion Priors

Clothing Models (2011-2015)

Reflectance Filtering

Learning on Manifolds

Markerless Animal Motion Capture

Multi-Camera Capture

2D Pose from Optical Flow

Body Perception

Neural Prosthetics and Decoding

Part-based Body Models

Intrinsic Depth

Lie Bodies

Layers, Time and Segmentation

Understanding Action Recognition (JHMDB)

Intrinsic Video

Intrinsic Images

Action Recognition with Tracking

Neural Control of Grasping

Flowing Puppets

Faces

Deformable Structures

Model-based Anthropometry

Modeling 3D Human Breathing

Optical flow in the LGN

FlowCap

Smooth Loops from Unconstrained Video

PCA Flow

Efficient and Scalable Inference

Motion Blur in Layers

Facade Segmentation

Smooth Metric Learning

Robust PCA

3D Recognition

Object Detection

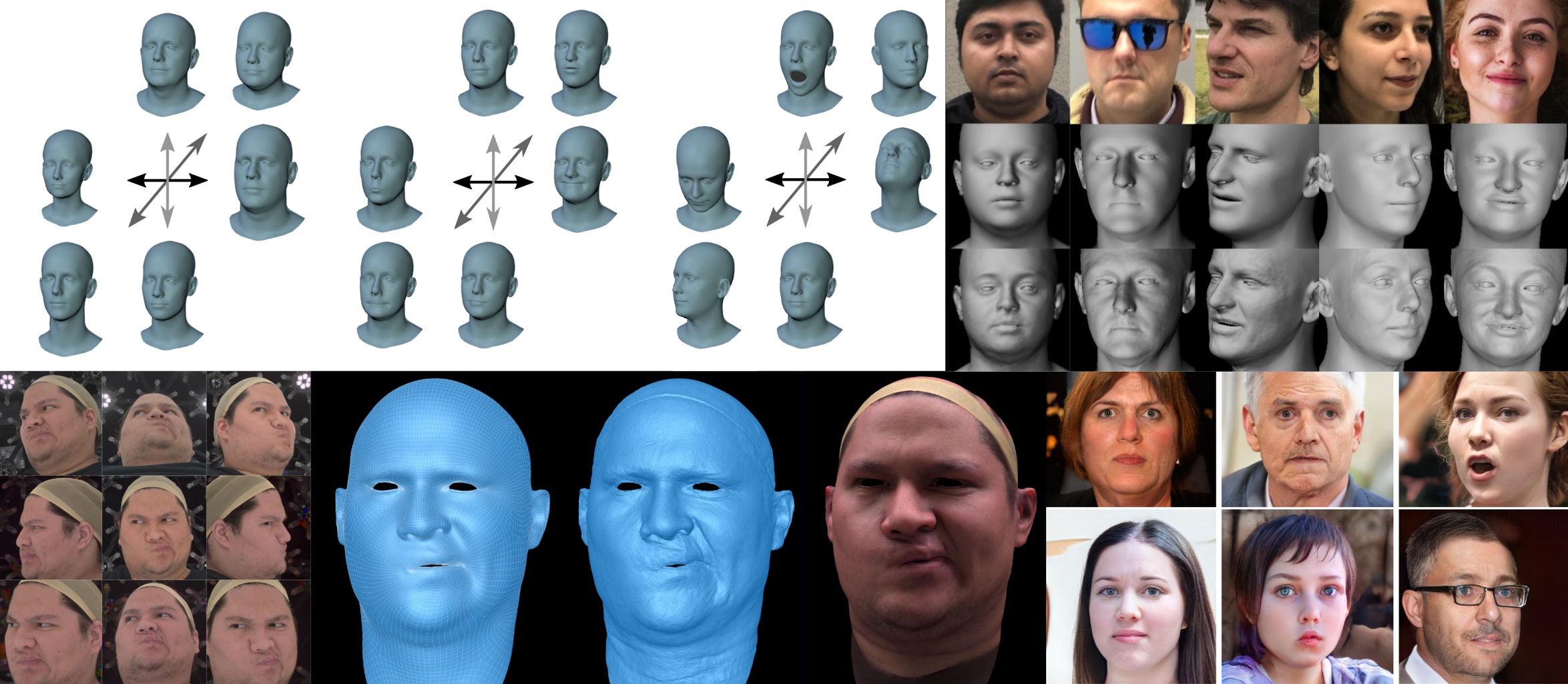

Faces and Expressions

Facial shape and motion are essential to communication. They are also fundamentally three dimensional. Consequently, we need a 3D model of the face that can capture the full range of face shapes and expressions. Such a model should be realistic, easy to animate, and easy to fit to data. See [] for a comprehensive overview of different facial representations.

To that end, we train an expressive 3D head model called FLAME from over 33,000 3D scans. Because it is learned from large-scale, expressive data, it is more realistic than previous models. To capture non-linear expression shape variations, we introduce CoMA [], a versatile autoencoder framework for meshes with hierarchical mesh up- and down-sampling operations. Models like FLAME and CoMA require large datasets of 3D faces in dense semantic correspondence across different identities and expressions. ToFu [

], a geometry inference framework that facilitates a hierarchical volumetric feature aggregation scheme, predicts facial meshes in a consistent mesh topology directly from calibrated multi-view images three orders of magnitude faster than traditional techniques.

To capture, model, and understand facial expressions, we need to estimate the parameters of our face models from images and videos. Training a neural network to regress model parameters from image pixels is difficult because we lack paired training data of images and the true 3D face shape. To address this, RingNet [] directly learns this mapping using only 2D image features. DECA [

] additionally learns an animatable detailed displacement model from in-the-wild images. This enables important applications such as creation of animatable avatars from a single image. Our NoW benchmark enables the field to quantitatively compare such methods for the first time.

Classical rendering methods can be used to generate images using FLAME but these look unrealistic due to the lack of hair, eyes, and the mouth cavity (i.e., teeth or tongue). To address this, we are developing new neural rendering methods. GIF [] combines a generative adversarial network (GAN) with FLAME’s parameter control to generate realistic looking face images.

Members

Publications