Human Pose, Shape and Action

3D Pose from Images

2D Pose from Images

Beyond Motion Capture

Action and Behavior

Body Perception

Body Applications

Pose and Motion Priors

Clothing Models (2011-2015)

Reflectance Filtering

Learning on Manifolds

Markerless Animal Motion Capture

Multi-Camera Capture

2D Pose from Optical Flow

Body Perception

Neural Prosthetics and Decoding

Part-based Body Models

Intrinsic Depth

Lie Bodies

Layers, Time and Segmentation

Understanding Action Recognition (JHMDB)

Intrinsic Video

Intrinsic Images

Action Recognition with Tracking

Neural Control of Grasping

Flowing Puppets

Faces

Deformable Structures

Model-based Anthropometry

Modeling 3D Human Breathing

Optical flow in the LGN

FlowCap

Smooth Loops from Unconstrained Video

PCA Flow

Efficient and Scalable Inference

Motion Blur in Layers

Facade Segmentation

Smooth Metric Learning

Robust PCA

3D Recognition

Object Detection

Smooth Metric Learning

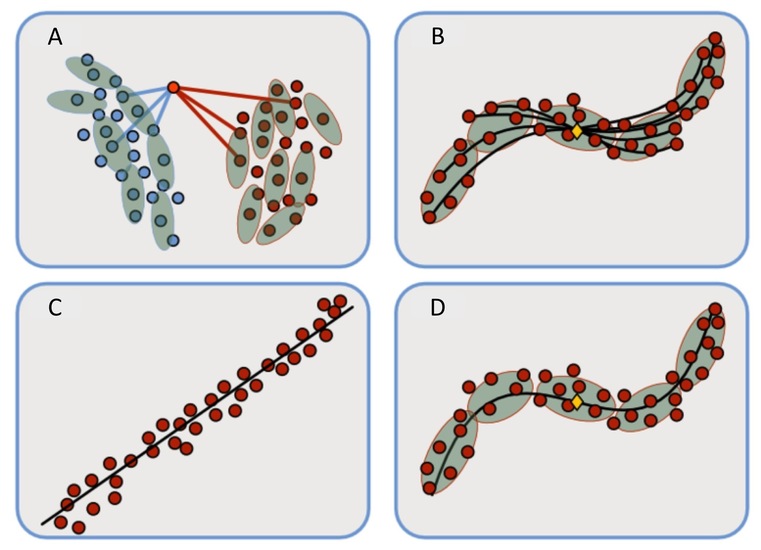

Statistics relies on measuring distances. This simple observation has lead to the idea that the distance measure should change locally to better adapt to the problem. With this strategy even simple classifiers can be competitive with the state-of-the-art because the distance measure locally adapts to the structure of the data. This idea, which is known as Multi-metric Learning, is illustrated in A.

One mathematical issue with this model is that the applied distance measure is not metric as it is not symmetric and the triangle inequality is violated. This has prevented the idea from being used for non-classification tasks, such as regression and dimensionality reduction, where metricity is essential.

In previous models, a local distance function (in the form of a metric tensor) is learned at a discrete set of points. We define a smooth version of this model by defining the metric tensor at any point as a smoothly changing weighted average of the learned metric tensors. From the smoothness we show that the resulting feature space becomes a Riemannian manifold. Since such spaces are metric spaces, we have avoided the above-mentioned issue with previous models.

On Riemannian manifolds, statistical operations such as regression, PCA, Kalman filters, etc., are well-defined and algorithms are readily available. We can, thus, extend multi-metric learning to be useful for these tasks. Note that to achieve this, we only have to ensure that the distance measure changes smoothly throughout the feature space, which is a very natural assumption.

The basic idea behind most statistical operations on manifolds is to express the data in a local tangent space of the manifold and perform Euclidean statistics in this space. As is common, we first compute the mean of the data (defined as the minimizer of the sum of squared distances to the data) and express the data in the tangent at this point. Here a Euclidean model, e.g. PCA, is learned and the resulting model is mapped back to the manifold (which in this case is the original feature space). This process is shown in B-D.

To perform these operations we need algorithms for computing shortest paths and distances according to the smoothly changing distance measure. Furthermore we need algorithms for mapping back and forth between local tangent spaces. We show how these problems can be formulated as the solution to a system of ordinary differential equations.

Video

Members

Publications