Human Pose, Shape and Action

3D Pose from Images

2D Pose from Images

Beyond Motion Capture

Action and Behavior

Body Perception

Body Applications

Pose and Motion Priors

Clothing Models (2011-2015)

Reflectance Filtering

Learning on Manifolds

Markerless Animal Motion Capture

Multi-Camera Capture

2D Pose from Optical Flow

Body Perception

Neural Prosthetics and Decoding

Part-based Body Models

Intrinsic Depth

Lie Bodies

Layers, Time and Segmentation

Understanding Action Recognition (JHMDB)

Intrinsic Video

Intrinsic Images

Action Recognition with Tracking

Neural Control of Grasping

Flowing Puppets

Faces

Deformable Structures

Model-based Anthropometry

Modeling 3D Human Breathing

Optical flow in the LGN

FlowCap

Smooth Loops from Unconstrained Video

PCA Flow

Efficient and Scalable Inference

Motion Blur in Layers

Facade Segmentation

Smooth Metric Learning

Robust PCA

3D Recognition

Object Detection

Image Segmentation and Semantics

Semantic segmentation is a fundamental problem of computer vision that requires answering what is where in a given image, video or 3D point cloud. The best performing recent techniques require human annotations to obtain ground truth used to train deep neural networks. Such annotation is costly and time consuming to obtain. Consequently, in this project, we address the following two questions:

- How to acquire accurate training data with minimal human cost [

]?

- How to build fast and efficient models for test time inference leveraging the collected data [

], [

]?

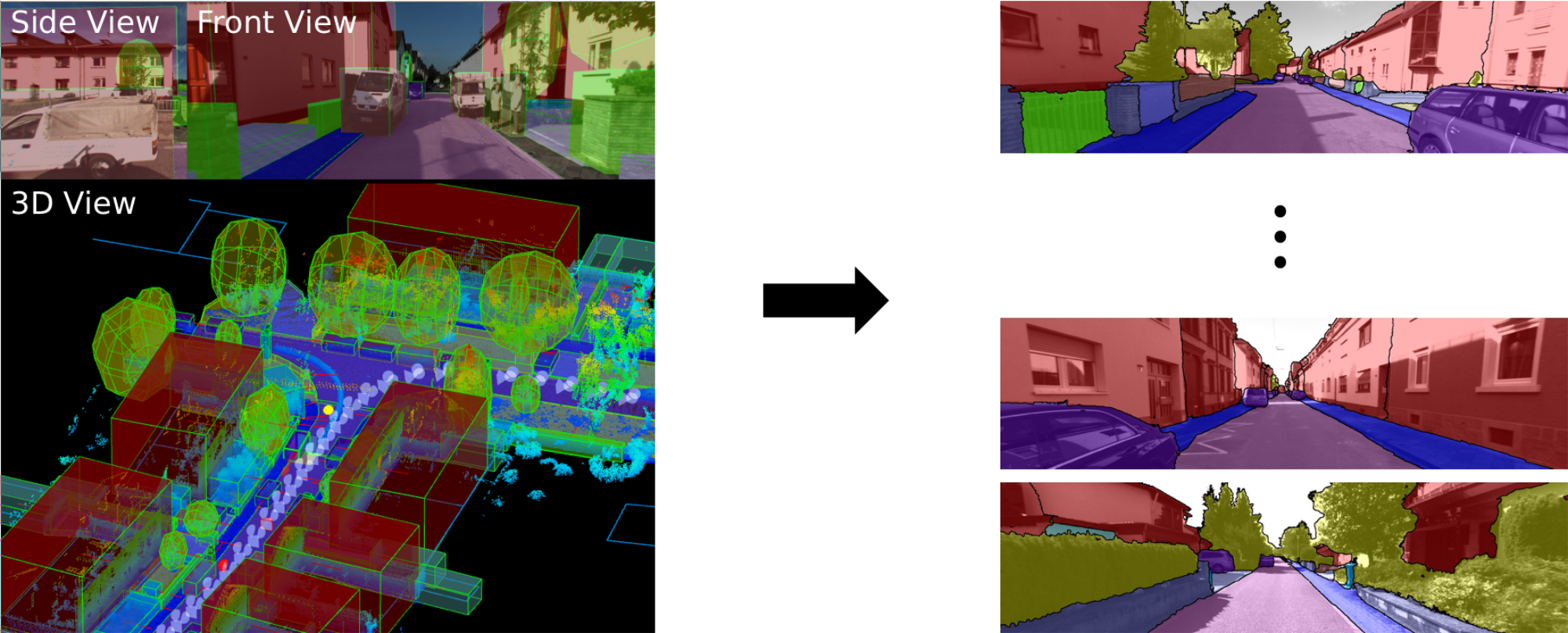

In [], we developed a scalable technique to generate pixelwise annotations for images. For a given 3D reconstructed scene, we annotate static elements in a rough manner and transfer annotations into the image domain using a novel label propagation technique leveraging geometric constraints. We leverage our method to obtain 2D labels for a novel suburban video dataset that we have collected, resulting in 400k semantic and instance image annotations.

In [], [

] we introduced fast and efficient techniques for semantic segmentation to propagate information using well established Auto-Context and Bilateral filter techniques.

Bilateral filters have wide spread use due to their edge-preserving properties. We generalize the approach to derive a gradient descent algorithm so the filter parameters can be learned from data []. This allows us to learn high dimensional linear filters that operate in sparsely populated feature spaces. We build on the permutohedral lattice construction for efficient filtering.

We further introduce a new "bilateral inception" module [] that can be inserted in existing CNN architectures and performs bilateral filtering, at multiple feature-scales, between superpixels in an image. The feature spaces for bilateral filtering and other parameters of the module are learned end-to-end using standard backpropagation techniques. The bilateral inception module addresses two issues that arise with general CNN segmentation architectures. First, this module propagates information between (super) pixels while respecting image edges, thus using the structured information of the problem for improved results. Second, the layer recovers a full resolution segmentation result from the lower resolution solution of a CNN.

Members

Publications